As after installing http://support.microsoft.com/kb/2770917 on Windows Server 2012 Hyper-V hosts the integration services components are upgraded from 6.2.9200.16384 to 6.2.9200.16433. Windows Server 2012 guest get that same upgrade and as such also the newer integration services components. The guest with older OS version needed a different approach. So I turned to all the great PowerShell support now available for Hyper-V to automate this. Pretty pleased with the results of our adventures in PowerShell scripting I let the script go on Hyper-V cluster dedicated to test & development. As such there are some virtual machines on there running Windows 2003 SP2 (X64) and Windows XP SP3 (x86). Guess what, after running my script and verifying the integration services version I see that those VM still report version 6.2.9200.16384 . No update. Didn’t my new scripting achievement “take” on those older guests?

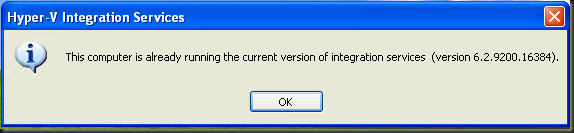

So I try the install manually and this is what I get:

Why is there no upgrade for these guests? Are they not needed or do I have an issue? So I mount the ISO and dig around in the files to find a clue in the date:

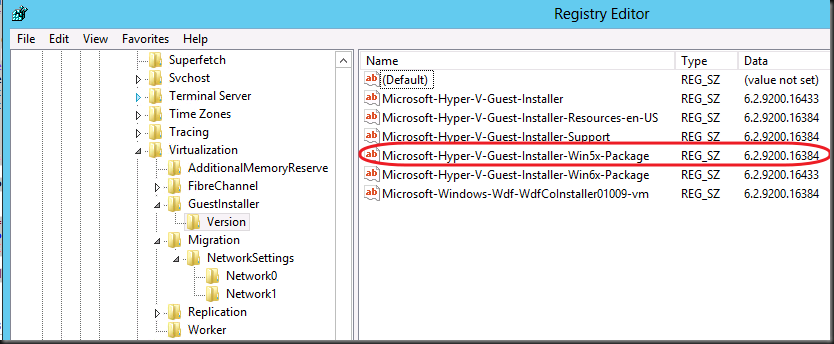

It looks like there are indeed no update components in there for Windows XP/ W2K3. So then I look at the following registry key on the host where I normally use the Microsoft-Hyper-V-Guest-Installer-Win6x-Package value to find out what integration services version my hosts are running:

Bingo, there it seems indicated that we indeed need version for XP/W2K3 and version for W2K8(R2)/W2K12 and Vista/Windows 7/Windows 8. Cool, but I had to check if this was indeed as it should be and I’m happy to confirm all is well. Ben Armstrong (http://blogs.msdn.com/b/virtual_pc_guy/) confirmed that this is how it should be. There was a update needed for backup that only applied to Windows 8 / Windows Server 2012 guests. As this fix was in a common component for Windows Server 2008 and later they all got the update. But for the older OS versions this was not the case and hence no update is need. Which is reflected in all the above. In short, this means your XP SP3 & W2K3SP2 VMs are just fine running the version of the integration services and are not in any kind of trouble.

This does leave me with an another task. I was planning to do enhancements to my script like feedback on progress, some logging, some better logic for clustered and non clustered environments, but now I have to also address this possibility and verify using the registry keys on the host which IC version I should check against per OS version. Checking against just for the one related to the host isn’t good enough ![]() .

.

![clip_image001[10] clip_image001[10]](https://blog.workinghardinit.work/wp-content/uploads/2012/11/clip_image00110_thumb.png)