Introduction

In Veeam Backup & Replication (VBR) v11 we have the new hardened repository host. I Have also shared some other findings in previous blogs. You add this to the Veeam managed servers via single-use credentials. These are not stored on the VBR server. That account only needs permission on the repository volumes after adding the repository server to the VBR managed servers. Let’s investigate how to change the service account on a Veeam hardened repository in case we ever need to. Warning, this early info and not official guidance.

Where is that account configured for use with Veeam?

Naturally, we created the account for adding the hardened repository to the Veeam managed servers. We also set the correct permission on the backup repository volumes. After adding the repository host to Veeam managed servers, with the single-use credential method, we removed this account from the sudo group and that’s it.

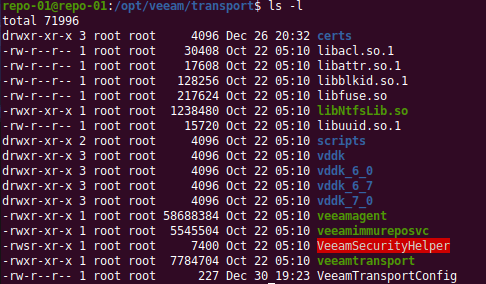

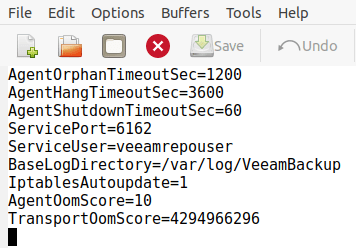

The VBR server itself does not hold the credentials. The credentials only live on the repository host. But where is that configured? Well under Under /opt/veeam/transport you will find a config file VeeamTransportConfig.

Open it up in your favorite editor and take a look.

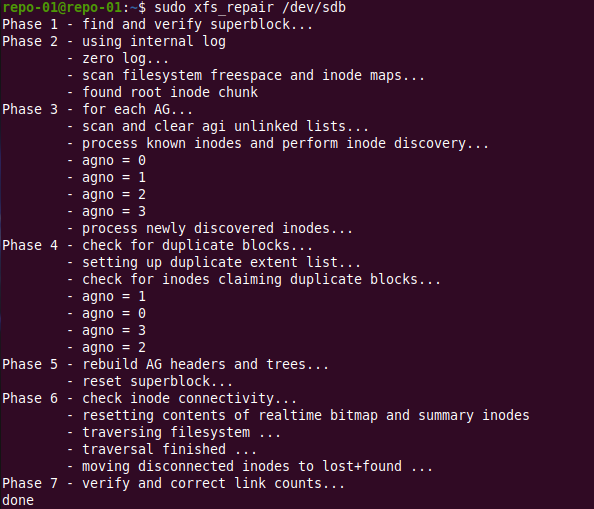

Change the service account on a Veeam hardened repository

From my testing, you can simply change the user account in the VeeamTransportConfig file if you ever need to. When you do, save the file and restart the veeamtransport service so it takes effect.

sudo service veeamtransport restart

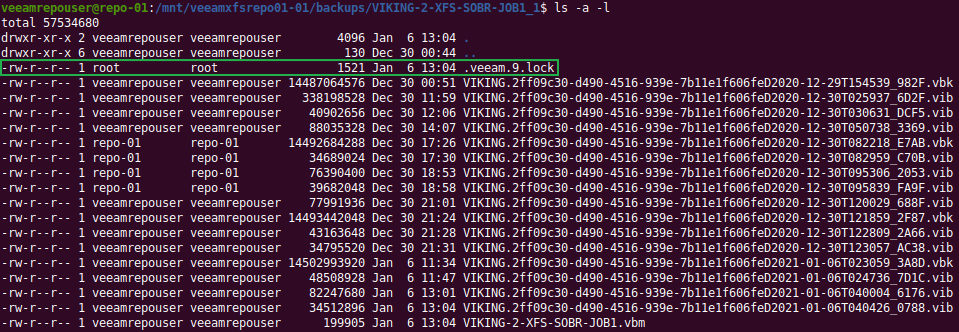

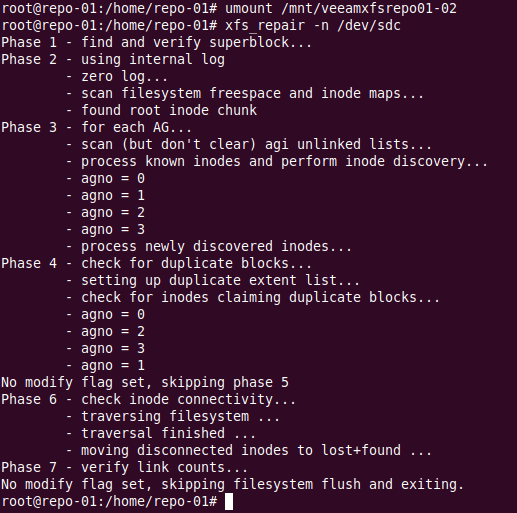

When you change this service user you must also take care of the permissions on the repository folder(s).

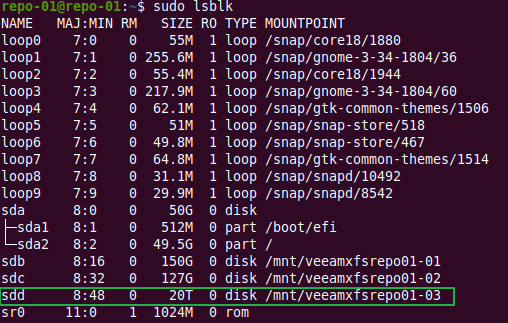

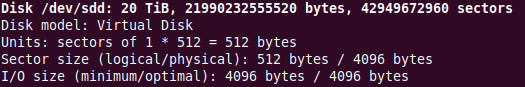

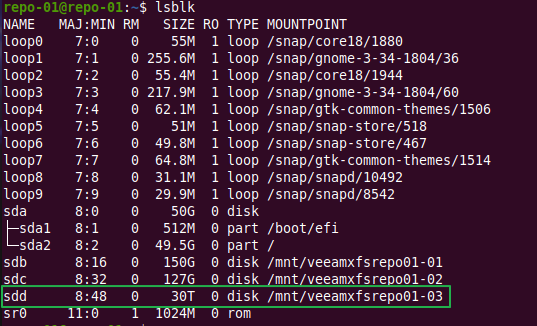

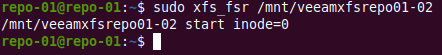

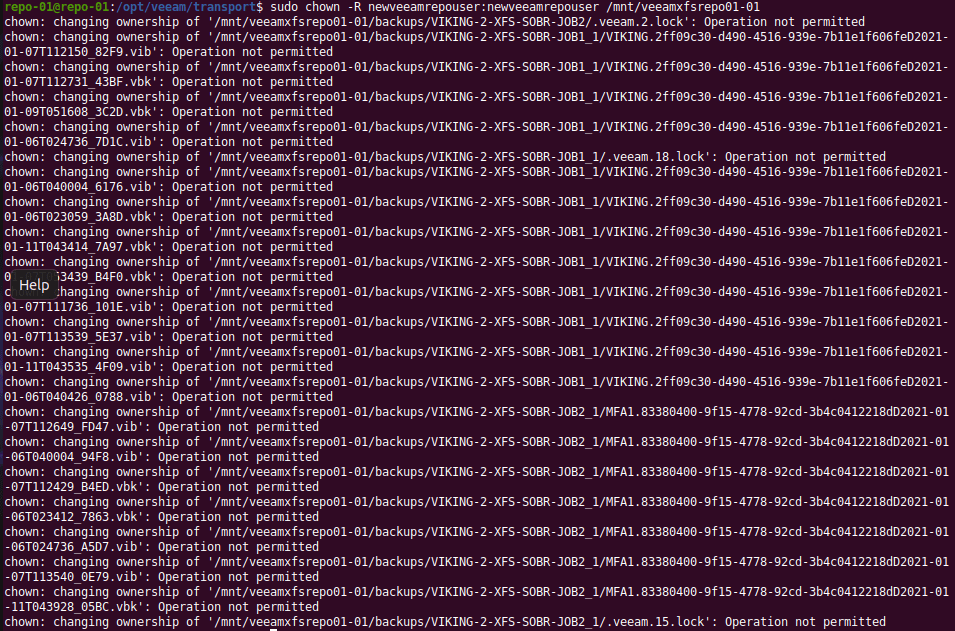

We change the ownership recursively for the drive mount to that user.

sudo chown -R newveeamrepouser: newveeamrepouser /mnt/veeamxfsrepo01-02

The permissions for the user should still be what it needs to be (chmod 700)

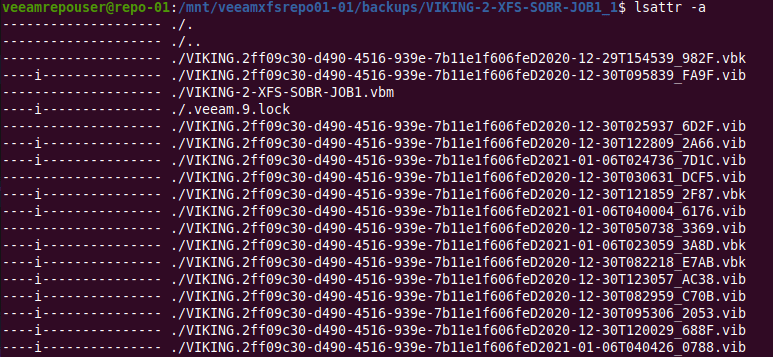

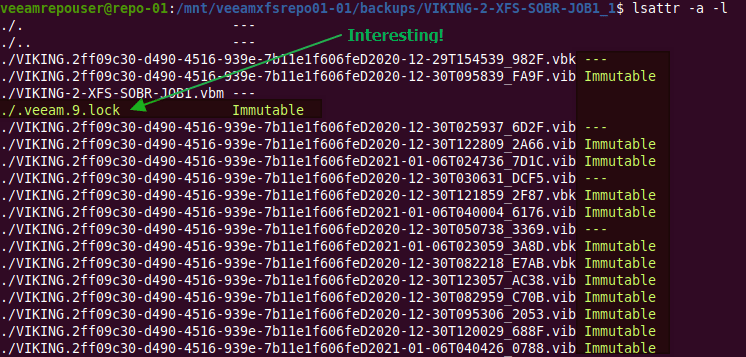

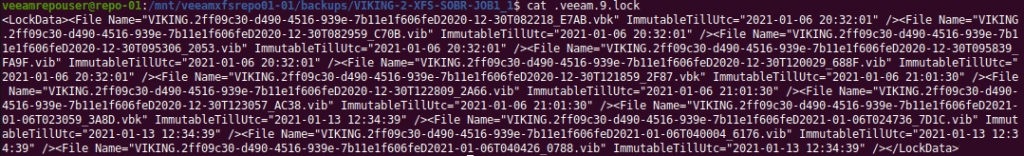

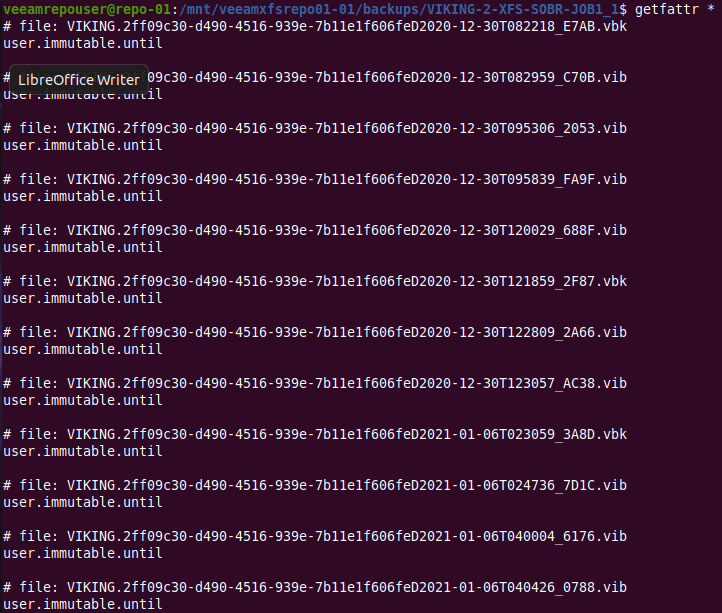

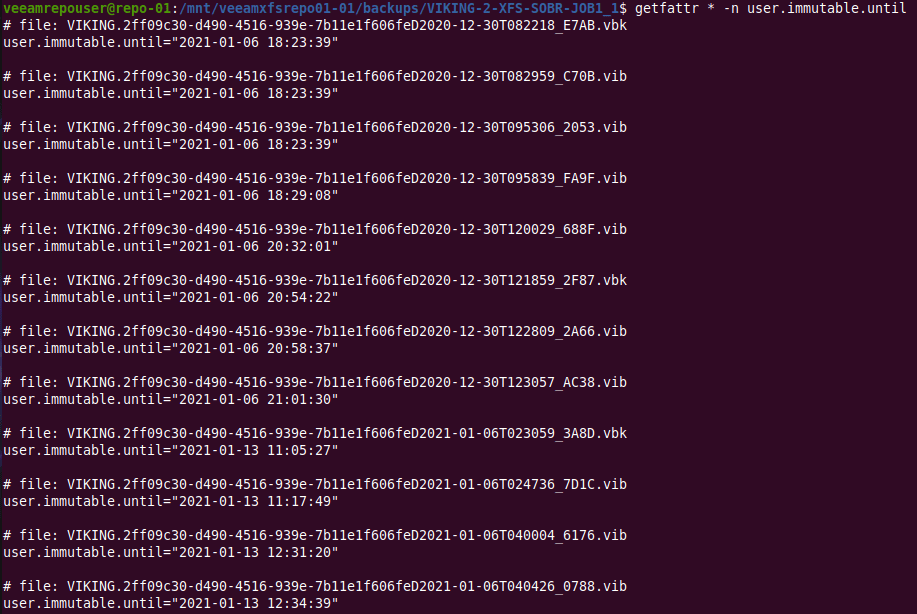

Now, due to the immutability of the backup files changing the owner will fail on those files but that is OK.

For reading and restoring then tests show that this still works. Backup wise this will run just fine. It is all pretty transparent from the Veeam Backup & Replication side of things. I used to think I would need to run a full backup afterward but Veeam seems to handle this like a champ and I do not have to take care of that it ssems. We’ll try to figure out more when V11 is generally available.

Now, you still might want to give Veeam support a call when you want or need to do this. Remember, this is just informational and sharing what I learn in the lab.

Conclusion

Normally you will not need to change this service account. But it might happen, either because of a policy that mandates this, etc. That is the reason I wanted to find out how to do this and if you can do this without breaking the backup cycles. I also think this is why you can convert an existing Linux repository to a hardened one.