Introduction

Is it true that Veeam Backup & Replication leverages SMB Multichannel? That is a question that I was asked recently. The answer is yes, when you have a backup design and configuration that allows for this. If that’s the case it will even happen automatically when possible. That’s how SMB 3 works. That means it’s a good idea to pay attention to the network design so that you’re not surprised by the route your backup traffic flows. Mind you that this could be a good surprise, but you might want to plan for it.

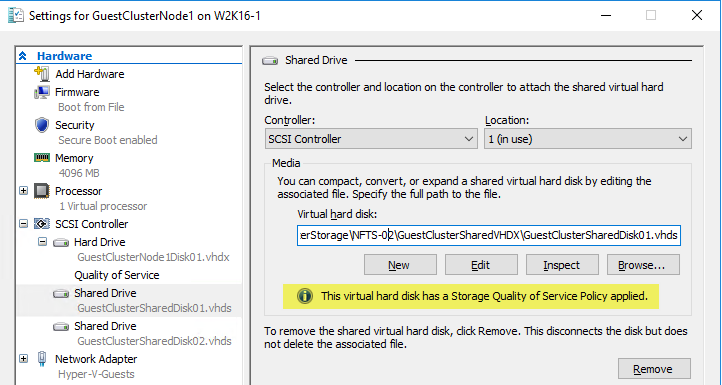

I’ll share a quick lab setup where SMB 3 Multichannel kicks in. Please don’t consider this a reference guide for your backup architectural design but as a demo of how SMB multichannel can be leveraged to your advantage.

Proving Veeam Backup & Replication leverages SMB Multichannel

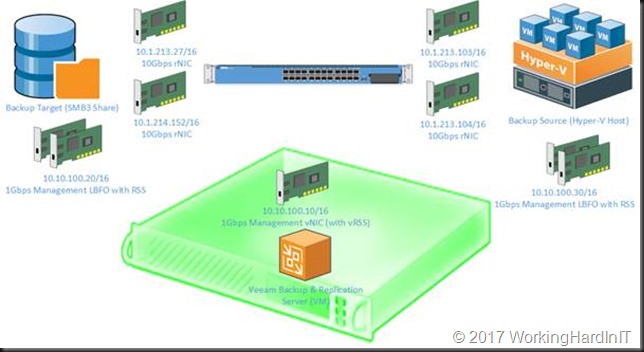

Here’s a figure of a quick lab setup I threw together.

There are a couple of significant things to note here when it comes to the automatic selection of the best possible network path.

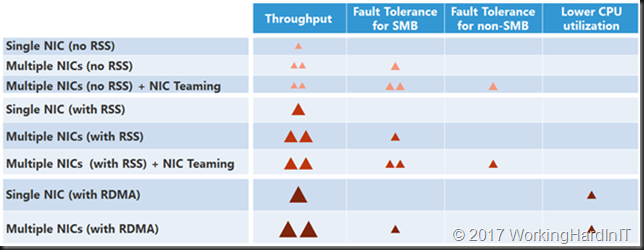

SMB 3 Multichannel picks the best solution based on its logic. You can read more about that here. I’ve included the figure with the overview below.

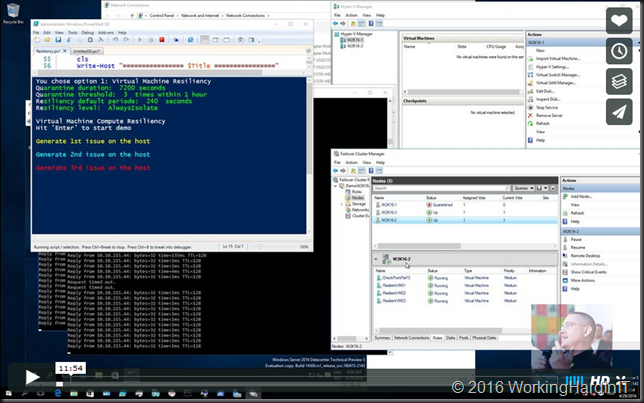

The figure nicely show the capabilities of the NIC situation. To select the best possible network path SMB 3 uses the following logic:

1. RDMA capable NICs (rNICs) are preferred and chosen first. rNICs combine the highest throughput, the lowest latency and bring CPU offloading. on the processor when pushing through large amounts of data.

2. RSS capable NICs: NIcs with Receive Side Scaling (RSS) improve scalability by not being limited to core zero on the server. Configured correctly RSS offers the second-best capabilities.

3. The speed of the NICs is the 3rd evaluation criteria: a 10 Gbps NIC offers way more throughput than a 1 Gbps NIC.

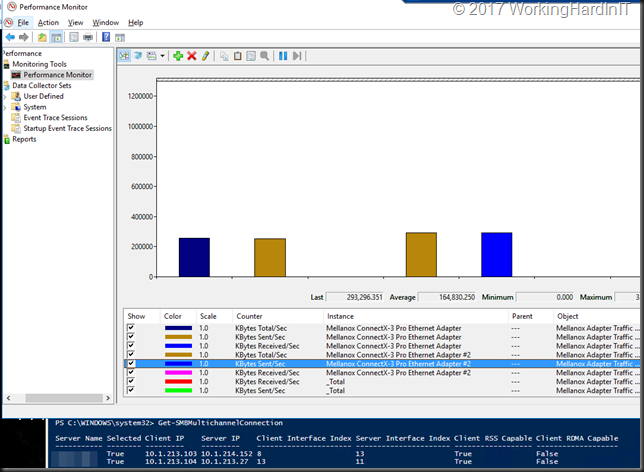

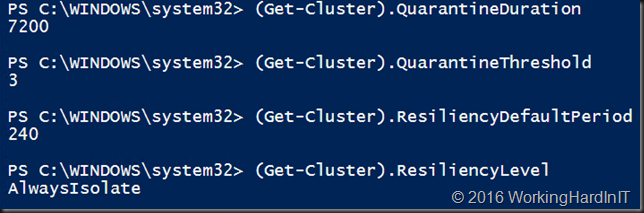

Following this logic it is clear that Multichannel will select our 2 RDMA capable 10Gbps NICs over the management LBFO interface which does not support RDMA and while supporting RSS can only deliver 2Gbps throughput at best. That’s exactly what you see in the screenshot below.

Conclusion

So yes, Veeam Backup & Replication leverages SMB Multichannel! Please note that this did not require us to set SMB 3 Multichannel constraints or a preferred network for backups in Veeam Backup & Replication. It’s possible to do so when needed but ideally you design your solution to have no need for this and let automatic detection chose the best network path correctly. This is the case in our little lab setup. The backup traffic flows over 10.1.0.0/16 network even when our Veeam Backup & Replication VM, the Hyper-V host and the backup target have 10.10.0.0/16 as their management subnet. That’s the one they exist on the Active Directory domain they belong to for standard functionality. But as both the source and the target can be reached via 2*10Gbps RDMA capable NICs on the 10.1.0.0/16 subnet SMB3 will select those according to its selection criteria. No intervention needed.

SMB Direct Support

Now that we have shown that Veeam Backup & Replication backups in certain configurations can and will leverage SMB Multichannel to your benefit another question pops up. Can and does Veeam Backup & Replication leverage SMB Direct? The answer to that is also, yes. If SMB Direct is correctly configured on all the hosts and switches their networks paths in between it will. Multichannel is the mechanism used to detect SMB Direct capabilities, so if multichannel works and sees SMB Direct is possible it will leverage that. That’s why when SMB Direct or RDMA is enabled on your NICs it’s important that it is configured correctly throughout the entire network path used. Badly configured SMB Direct leads to very bad experiences.

Now think about that. High throughput, low latency and CPU offloading, minimizing the CPU impact on your Hyper-V hosts, SOFS nodes, S2D nodes and backup targets. Not bad at all, especially not since you’re probably already implementing SMB Direct in many of these deployments. It’s certainly something that could and should be considered when design solutions or optimizing existing ones.

More SMB3 and Windows Server 2016 Goodness

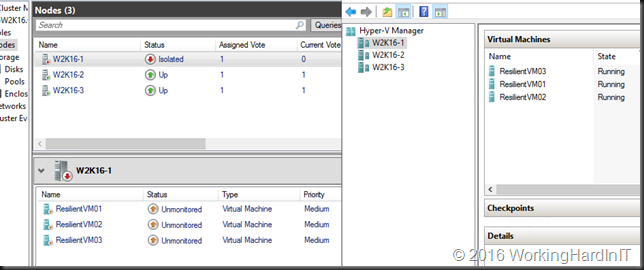

When you put your SMB3 file share continuously available on a Windows 2012 (R2) or Windows Server 2016 cluster (it doesn’t need to be on a CSV disk) you’ll gain high availability trough transparent failover with SMB3 and except for a short pause your backups will keep running even when the backup target node reboots or crashes after the File Server role has failed over. Now, start combing that with ReFSv3 in Windows Server 2016 and the Veeam Backup & Replication v9.5 support of this and you can see a lot of potential here to optimize many aspects of your backup design delivering effective and efficient solutions.

Things to investigate further

One question that pops up in my mind is what happens if we configure a preferred back-up network in Veeam Backup & Replication. Will this affect the operation of SMB multichannel at all? By that I means would enabling a preferred network in Veeam prevent multichannel from using more than one NIC?

I my opinion it should allow for multiple scenarios actually. When you have equally capable NICs that are on different subnets you might want to make sure it uses only one. After all, Veeam uses the subnet to configure a preferred path, or multiple subnets for that matter. Now multichannel will kick in with multiple equally capable NICs whether they are on the same subnet or not and if they are on the same subnet you might want them both to be leveraged even when setting a preferred path in Veeam. Remember that 1 IP / NIC is used to set up an SMB session and then it detects capabilities available, i.e. multiple paths, SMB Direct, RSS, speed, within 1 or across multiple subnets.

I’ll leave the combination of Veeam Backup & Replication and SMB multichannel for a future blog post.