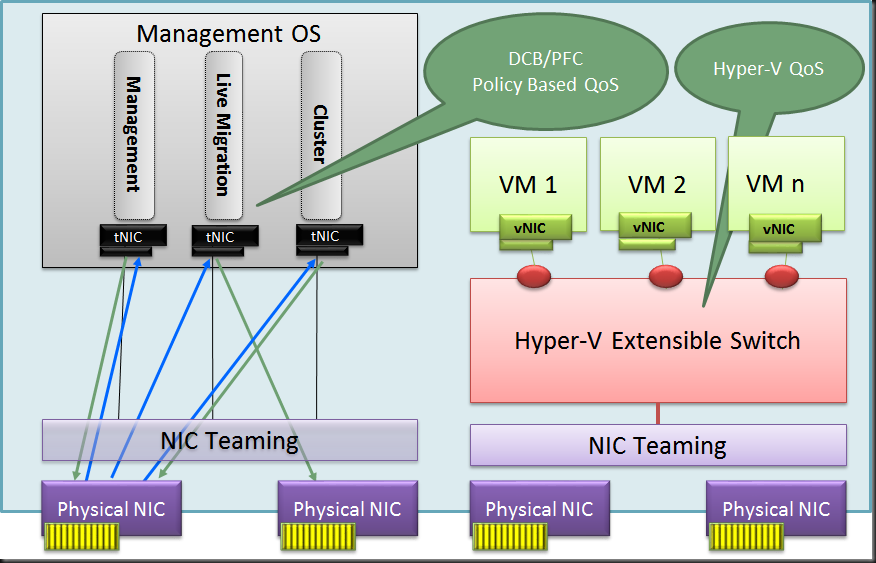

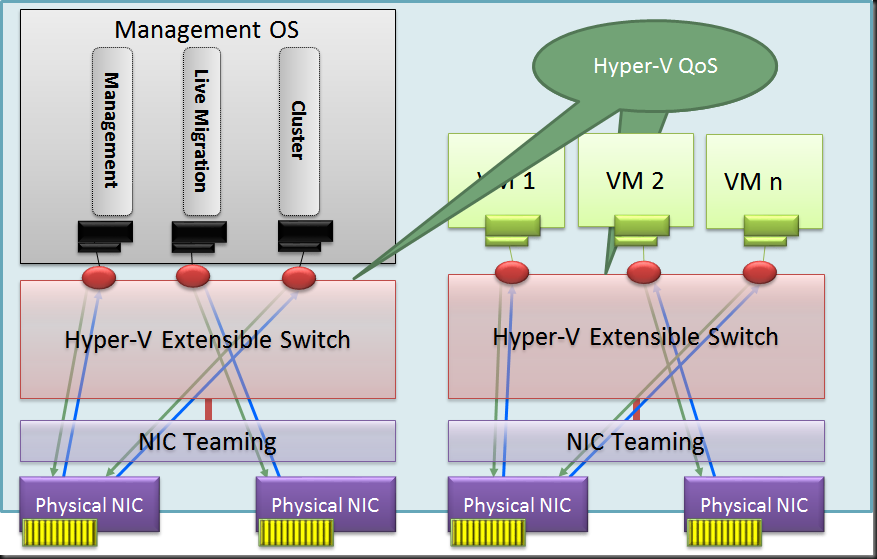

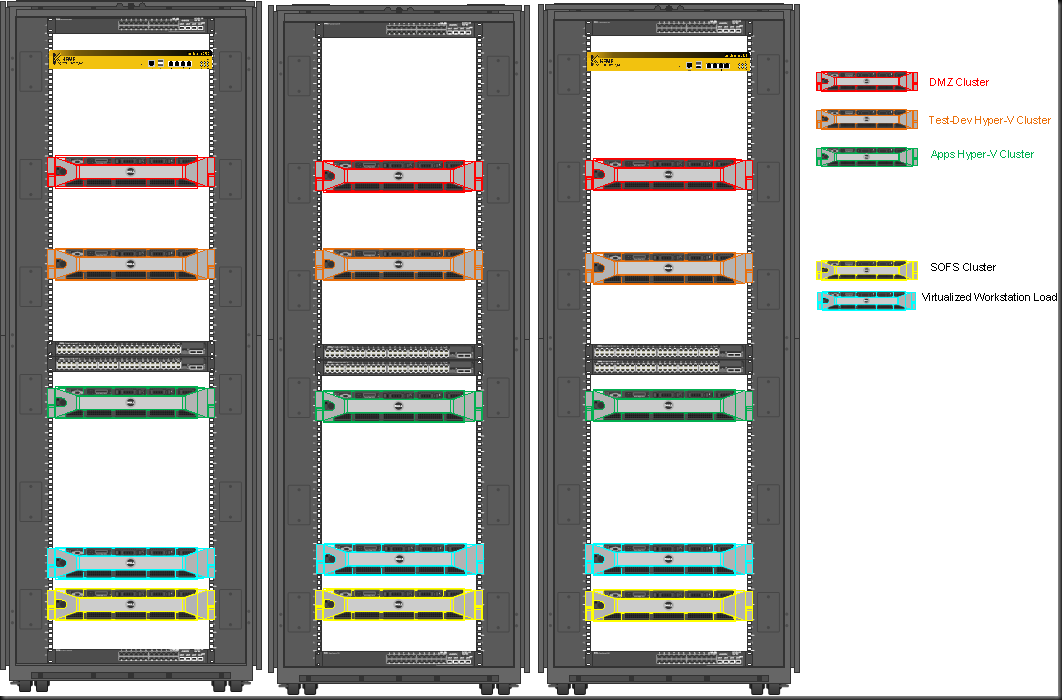

I’m testing & playing different Windows Server 2012 & Hyper-V networking scenarios with 10Gbps, Multichannel, RDAM, Converged networking etc. Partially this is to find out what works best for us in regards to speed, reliability, complexity, supportability and cost.

Basically you have for basic resources in IT around which the eternal struggle for the prefect balance finds place. These are:

- CPU

- Memory

- Networking

- Storage

We need both the correct balance in capabilities, capacities and speed for these in well designed system. For many years now, but especially the last 2 years it very save to say that, while the sky is the limit, it’s become ever easier and cheaper to get what we need when it comes to CPU, Memory. These have become very powerful, fast and affordable relative to the entire cost of a solution.

Networking in the 10Gbps era is also showing it’s potential in quantity (bandwidth), speed (latency) and cost (well it’s getting there) without reducing the CPU or memory to trash thanks to a bunch of modern off load technologies. And basically in this case it’s these qualities we want to put to the test.

The most trouble some resource has been storage and it has been for quite a while. While SSD do wonders for many applications the balance between speed, capacity & cost isn’t that sweet as for our other resources.

In some environments were I’m active they have a need for both capacity and IOPS and as such they are in luck as next to caching a lot of spindles still equate to more IOPS. For testing the boundaries of one resource one needs to make sure non of the others hit theirs. That’s not easy as for performance testing can’t always have a truck load of spindles on a modern high speed SAN available.

RAMDisk to ease the IOPS bottleneck

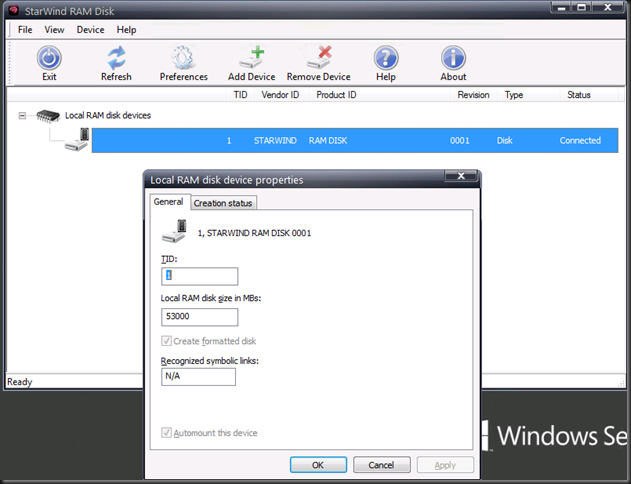

To see how well the 10Gbps cards with and without Teaming, Multichannel, RDMA are behaving and what these configuration are capable of I wanted to take as much of the disk IOPS bottle neck out of the equation as possible. Apart from buying a Violin system capable of doing +1 million IOPS, which isn’t going to happen for some lab work, you can perhaps get the best possible IOPS by combining some local SSD and RAMDisk. RAMDisk is spare memory used as a virtual disk. It’s very fast and cost effective per IOPS. But capacity wise it’s not the worlds best, let alone most cost effective solution.

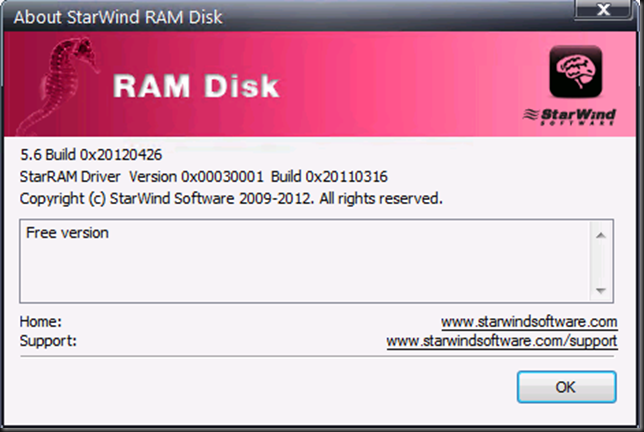

I’m using free RAMDisk software provided by StarWind. I chose this as they allow for large sized RAMDisks. I’m using the ones of 54GB right now to speed test copying fixed sized VHDX files. It install flawlessly on Windows Server 2012 and it hasn’t caused me any issues. Throw in some SSDs on the servers for where you need persistence and you’re in business for some very nice lab work.

You also need to be aware it doesn’t persist data when you reboot the system or lose power. This is not an issue if all we are doing is speed testing as we don’t care. Otherwise you’ll need to find a workaround and realize those ‘”flush the data to persistent storage” isn’t full proof or super fast, the SSDs do help here.

You have to register but the good news is that they don’t spam you to death at all, which I find cool. As said the tool is free, works with Windows Server 2012 and allows for larger RAMDisks where other free ones are often way to limited in size.

It has allowed me to do some really nice testing. Perhaps you want to check this out as well. WARNING: The below picture is a lab setup … I’m not a magician and it’s not the kind of IOPS I have all over the datacenters with 4 Cheapo SATA disks I touched my special magic pixie dust.

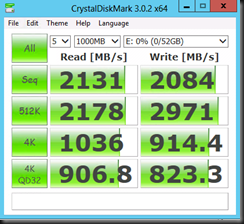

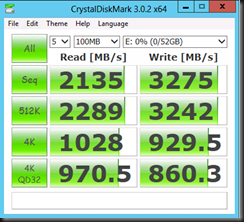

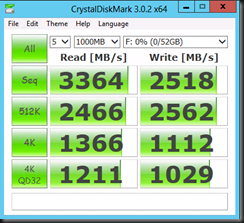

Some quick tests with a 52GB NTFS RAMDisk formatted with a 64K NTFS Allocation unit size.

|

|

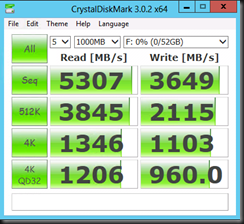

I also tested with another free tool from SoftPerfect ® RAM Disk FREE. It performs well but I don’t get to see the RAMDisk in the Windows Disk Management GUI, at least not on Windows Server 2012. I have not tested with W2K8R2.

| NTFS Allocation unit size 4K | NTFS Allocation unit size 64K |

|

|