You’re wrong

There, I said it. Sure you can. Don’t think you need to be a big data center to make this happen. You just need to think and work outside the box a bit and when you’re not a large enterprise, that’s a bit more easy to do. Don’t do it like a big name brand, traditionalist partner would do it (strip & refit the entire structural cabling in the server room, high end gear with big margins everywhere). You’re going for maximum results & value, not sales margins and bonuses.

I would even say you can’t afford to stay on 1Gbps much longer or you’ll be dealing with the fall out of being stuck in the past. Really some of us are already look at > 10Gbps connections to the servers, actually. You need to move from 1Gbps or you’ll be micro managing a way around issues sucking all the fun out of your work with ever diminishing results and rising costs for both you and the business.

Give your Windows Server 2012R2 Hyper-V environment the bandwidth it needs to shine and make the company some money. If all you want to do is to spent as little money as possible I’m not quite sure what your goal is? Either you need it or you don’t. I’m convinced we need it. So we must get it. Do what it takes. Let me show you one way to get what you need.

Sounds great what do I do?

Take heart, be brave and of good courage! Combine it with skills, knowledge & experience to deliver a 10Gbps infrastructure as part of ongoing maintenance & projects. I just have to emphasize that some skills are indeed needed, pure guts alone won’t do it.

First of all you need to realize that you do not need to rip and replace your existing network infrastructure. That’s very hard to get approval for, takes too much time and rapidly becomes very expensive in both dollars and efforts. Also, to be honest, quiet often you don’t have that kind of pull. I for one certainly do not. And if I’d try to do that way it takes way too many meetings, diplomacy, politics, ITIL, ITML & Change Approval Board actions to make it happen. This adds to the cost even more, both in time and money. So leave what you have in place, for this exercise we assume it’s working fine but you can’t afford to have wait for many hours while all host drains in 6 node cluster and you need to drain all of them to add memory. So we have a need (OK you’ll need a better business case than this but don’t make to big a deal of it or you’ll draw unwanted attention) and we’ve taking away the fear factor of fork lift replacing the existing network which is a big risk & cost.

So how do I go about it?

Start out as part of regular upgrades, replacement or new deployments. The money is their for those projects. Make sure to add some networking budget and leverage other projects need to support the networking needs.

Get a starter budget for a POC of some sort, it will get your started to acquire some more essential missing bits.

By reasonably cheap switches of reasonable port count that do all you need. If they’re readily available in a frame work contract, great. You can get it as part of the normal procedures. But if you want to nock another 6% to 8% of the cost order them directly from the vendor. Cut out the middle man.

Buy some gear as part of your normal refresh cycle. Adapt that cycle life time a bit to suit your needs where possible. Funding for operation maintenance & replacement should already be in place right?

Negotiate hard with your vendor. Listen, just like in the storage world, the network world has arrived at a point where they’re not going to be making tons of money just because they are essential. They have lots of competition and it’s only increasing. There are deals to be made and if you chose the right hardware it’s gear that won’t lock you into proprietary cabling, SPF+ modules and such. Or not to much anyway  .

.

Design options and choices

Small but effective

If you’re really on minimal budget just introduce redundant (independent) stand alone 10Gbps switches for the East-West traffic that only runs between the nodes in the data center. CSV, Live Migration, backup. You don’t even need to hook it up to the network for data traffic, you only need to be able to remotely manage it and that’s what they invented Out Off Band (OOB) ports for. See also an old post of mine Introducing 10Gbps With A Dedicated CSV & Live Migration Network (Part 2/4). In the smallest cheapest scenario I use just 2 independent switches. In the other scenario build a 2 node spine and the leaf. In my examples I use DELL network gear. But use whatever works best for your needs and your environment. Just don’t go the “nobody ever got fired for buying XXX” route, that’s fear, not courage! Use cheaper NetGear switches if that fits your needs. Your call, see my recent blog post on this 10Gbps Cheap & Without Risk In Even The Smallest Environments.

Medium sized excellence

First of all a disclaimer: medium sized isn’t a standardized way of measuring businesses and their IT needs. There will be large differences depending on you neck of the woods  .

.

Build your 10Gbps infrastructure the way you want it and aim it to grow to where it might evolve. Keep it simple and shallow. Go wide where you need to. Use the Spine/Leaf design as a basis, even if what you’re building is smaller than what it’s normally used for. Borrow the concept. All 10Gbps traffic, will be moving within that Spine/Leaf setup. Only client server traffic will be going out side of it and it’s a small part of all traffic. This is how you get VM mobility, great network speeds in the server room avoiding the existing core to become a bandwidth bottleneck.

You might even consider doing Infiniband where the cost/Gbps is very attractive and it will serve you well for a long time. But it can be a hard sell as it’s “another technology”.

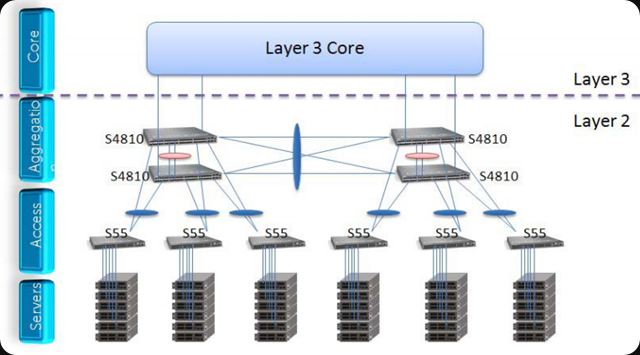

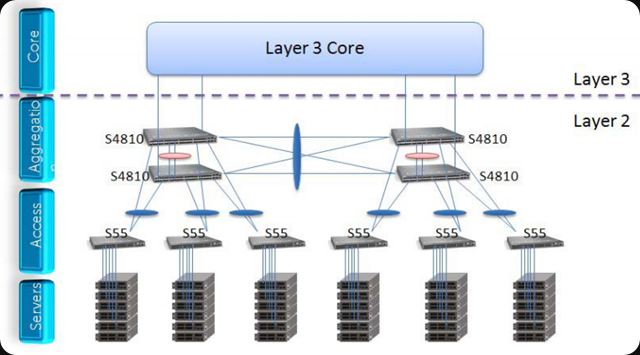

Don’t panic, you don’t need to buy a bunch of Nexus 7000’s or Force10 Z9000 to do this in your moderately sized server room. In medium sized environment I try to follow the “Spine/Leaf” concept even if it’s not true ECMP/CLOSS, it’s the principle. For the spine choose the switches that fit your size, environment & growth. I’ve used the Force10 S4810 with great success and you can negotiate hard on the price. The reasons I went for the higher priced Force10 S4810 are:

- It’s the spine so I need best performance in that layer so that’s where I spend my money.

- I wanted VLT, stacking is a big no no here. With VLT I can do firmware upgrades without down time.

- It scales out reasonably by leveraging eVLT if ever needed.

For the ToR switches I normally go with PowerConnect 81XX F series or the N40XXF series, which is the current model. These provide great value for money and I can negotiate hard on price here while still getting 10Gbps with the features I need. I don’t need VLT as we do switch independent NIC teaming with Windows. That gives me the best scalability wit DVMQ & vRSS and allows for firmware upgrades without any network down time in the rack. I do sacrifice true redundant LACP within the rack but for the few times I might really need to have that I could go cross racks & still maintain a rack a failure domain as the ToRs are redundant. I avoid stacking, it’s a single point of failure during firmware upgrades and I don’t like that. Sure I can could leverage the rack a domain of failure to work around that but that’s not very practical for ordinary routine maintenance. The N40XXF also give me the DCB capabilities I need for SMB Direct.

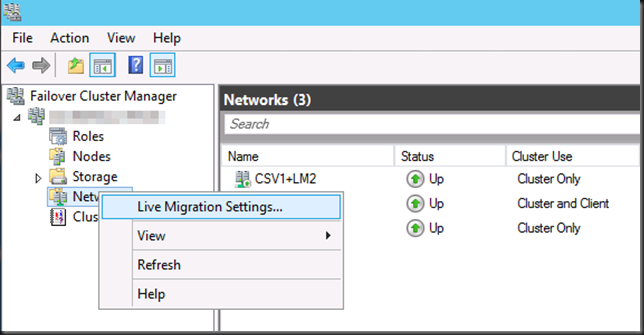

Hook it up to the normal core switch of the existing network, for just the client/server.(North/South) traffic. I make sure that any VLANs used for CSV, live migration, can’t even reach that part of the network. Even data traffic (between virtual machines, physical servers) goes East-West within your Spine/Leave and never goes out anyway unless you did something really weird and bad.

As said, you can scale out VLT using eVLT that creates a port channel between 2 VLT domains. That’s nice. So in a medium sized business you’re pretty save in growth. If you grow beyond this, we’ll be talking about a way larger deployment anyway and true ECMP/CLOS and that’s not the scale I’m dealing with where. For most medium sized business or small ones with bigger needs this will do the job. ECMP/CLOS Spine/leaf actually requires layer 3 in the design and as you might have noticed I kind if avoid that. Again, to get to a good solution today instead of a real good solution next year which won’t happen because real good is risky and expensive. Words they don’t like to hear above your pay grade.

The picture below is just for illustration of the concept. Basically I normally have only one VLT domain and have two 10Gbps switches per rack. This gives me racks as failure domains and it allows me to forgo a lot of extra structural cabling work to neatly provide connectivity form the switches to the server racks .

You have a scalable, capable & affordable 10Gbps or better infrastructure that will run any workload in style.. After testing you simply start new deployments in the Spine/Leaf and slowly mover over existing workloads. If you do all this as part of upgrades it won’t cause any downtime due to the network being renewed. Just by upgrading or replacing current workloads.

The layer 3 core in the picture above is the uplink to your existing network and you don’t touch that. Just let if run until there nothing left in there and you can clean it up or take it out. Easy transition. The core can be left in place or replaces when needed due to age or capabilities.

To keep things extra affordable

While today the issues with (structural) 10Gbps copper CAT6A and NICs/Switches seem solved, when I started doing 10Gbps fibre cabling of Copper Twinax Direct Attach was the only way to go. 10GBaseT wasn’t an option yet and I still love the flexibility of fibre, it consumes less space and weighs less then CAT6A. Fibre also fits easily in existing cable infrastructure. Less hassle. But CAT6A will work fine today, no worries.

If you decide to do fibre, buy OM3, you can get decent, affordable cabling on line. Order it as consumable supplies.

Spend some time on the internet and find the SFP+ that works with your switches to save a significant amount of money. Yup some vendor switches work with compatible non OEM branded SPF+ modules. Order them as consumable supplies, but buy some first to TEST! Save money but do it smart, don’t be silly.

For patch cabling 10Gbps Copper Twinax Direct Attach works great for short ranges and isn’t expensive, but the length is limited and they get thicker & more sturdy and thus unwieldy by length. It does have it’s place and I use them where appropriate.

Isn’t this dangerous?

Nope. Technology wise is perfectly sound and nothing new. Project wise it delivers results, fast, effective and without breaking the bank. Functionally you now have all the bandwidth you need to stop worrying and micromanaging stuff to work around those pesky bandwidth issues and focus on better ways of doing things. You’ve given yourself options & possibilities. Yay!

Perhaps the approach to achieve this isn’t very conventional. I disagree. Look, anyone who’s been running projects & delivering results knows the world isn’t that black and white. We’ve been doing 10Gbps for 4 years now this way and with (repeated) great success while others have to wait for the 1Gbps structural cabling to be replaced some day in the future … probably by 10Gbps copper in a 100Gbps world by the time it happens. You have to get the job done. Do you want results, improvements, progress and success or just avoid risk and cover your ass? Well then, choose & just make it happen. Remember the business demands everything at the speed of light, delivered yesterday at no cost with 99.999% uptime. So this approach is what they want, albeit perhaps not what they say.

![]() .

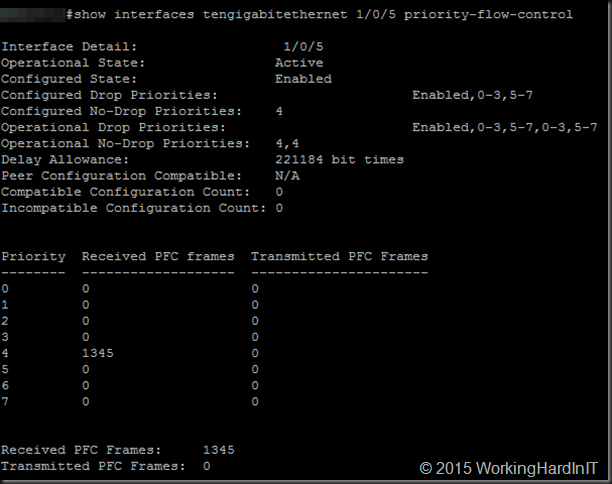

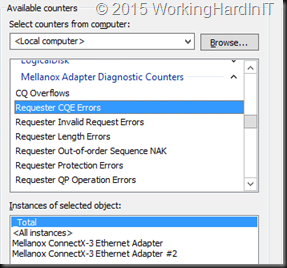

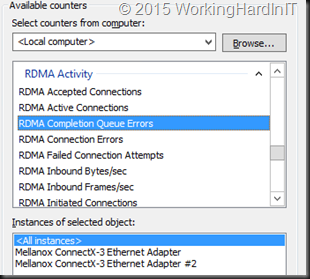

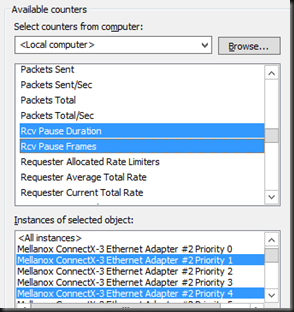

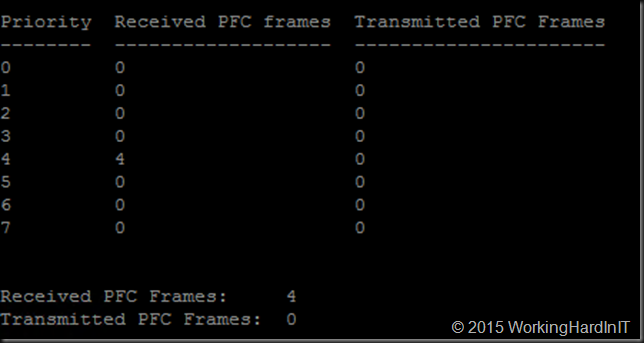

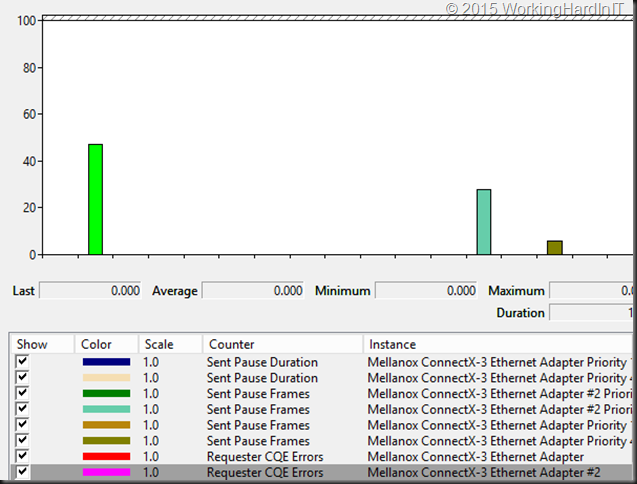

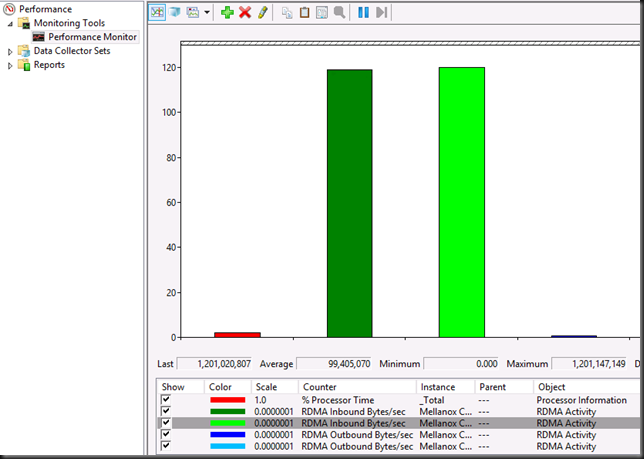

.![]() and you do not have a lossless fabric at all. The counters are cumulative over time so they don’t reset to zero bar resetting the NIC or a reboot.

and you do not have a lossless fabric at all. The counters are cumulative over time so they don’t reset to zero bar resetting the NIC or a reboot.![]() .

.