In this blog post we’ll demo Priority Flow control. We’re using the demo comfit as described in SMB Direct over RoCE Demo – Hosts & Switches Configuration Example

There is also a quick video to illustrate all this on Vimeo. It’s not training course grade I know, but my time to put into these is limited.

I’m using Mellanox ConnectX-3 ethernet cards, in 2 node DELL PowerEdge R720 Hyper- cluster lab. We’ve configured the two ports for SMB Direct & set live migration to leverage them both over SMB Direct. For that purpose we tagged SMB Direct traffic with priority 4 and all other traffic with priority 1. We only made priority lossless as that’s required for RoCE and the other traffic will deal with not being lossless by virtue of being TCP/IP.

Priority Flow Control is about making traffic lossless. Well some traffic. While we’d love to live by Queens lyrics “I want it all, I want it all and I want it now” we are limited. If not so by our budgets, than most certainly by the laws of physics. To make sure we all understand what PFC does here’s a quick reminder: It tells the sending party to stop sending packets, i.e. pause a moment (in our case SMB Direct traffic) to make sure we can handle the traffic without dropping packets. As RoCE is for all practical purposes Infiniband over Ethernet and is not TCP/IP, so you don’t have the benefits of your protocol dealing with dropped packets, retransmission … meaning the fabric has to be lossless*. So no it DOES NOT tell non priority traffic to slow down or stop. If you need to tell other traffic to take a hike, you’re in ETS country 🙂

* If any switch vendor tells you to not bother with DCB and just build (read buy their switches = $$$$$) a lossless fabric (does that exist?) and rely on the brute force quality of their products to have a lossless experience … could be an interesting experiment ![]() .

.

Note: To even be able to start SMB Direct SMB Multichannel must be enabled as this is the mechanism used to identify RDMA capabilities after which a RDMA connection is attempted. If this fails you’ll fall back to SMB Multichannel. So you will have ,network connectivity.

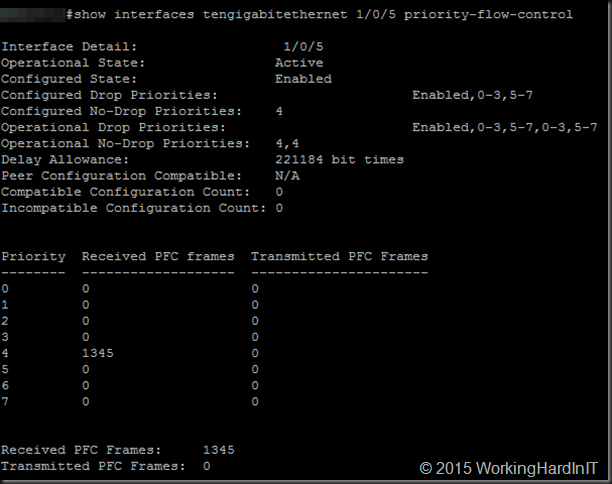

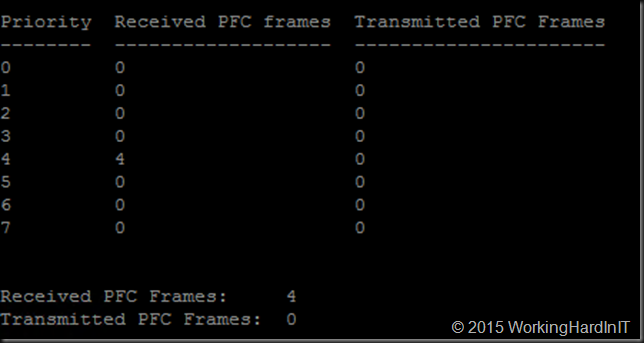

You want RDMA to work and be lossless. To visualize this we can turn to the switch where we leverage the counter statistics to see PFC frames being send or transmitted. A lab example from a DELL PowerConnect 8100/N4000 series below.

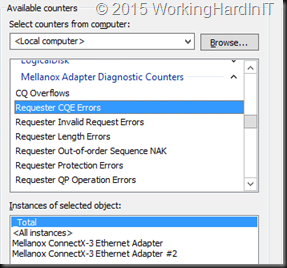

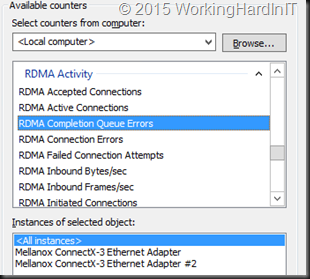

To verify that RDMA is working as it should we should also leverage the Mellanox Adapter Diagnostic and native Windows RDMA Activity counters. First of all make sure RDMA is working properly. Basically you want the error counters to be zero and stay that way.

Mellanox wise these must remain at zero (or not climb after you got it right):

- Responder CQE Errors

- Responder Duplicate Request Received

- Responder Out-Of-Order Sequence Received

- … there’s lots of them …

Windows RDMA Activity wise these should be zero (or not climb after you got it right):

- RDMA completion Queue Errors

- RDMA connection Errors

- RDMA Failed connection attempts

The event logs are also your friend as issues will log entries to look out for like

PowerShell is your friend (adapt severity levels according to your need!)

Get-WinEvent -ListLog “*SMB*” | Get-WinEvent | ? { $_.Level -lt 4 -and $_. Message -like “*RDMA*” } | FL LogName, Id, TimeCreated, Level, Message

Entries like this are clear enough, it ain’t working!

The network connection failed.

Error: The I/O request was canceled.

Connection type: Rdma

Guidance:

This indicates a problem with the underlying network or transport, such as with TCP/IP, and not with SMB. A firewall that blocks port 445 or 5445 can also cause this issue.

RDMA interfaces are available but the client failed to connect to the server over RDMA transport.

Guidance:

Both client and server have RDMA (SMB Direct) adaptors but there was a problem with the connection and the client had to fall back to using TCP/IP SMB (non-RDMA).

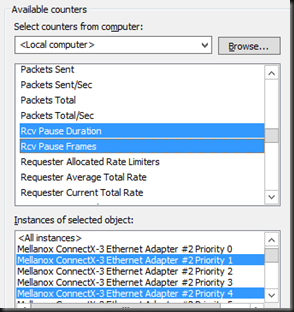

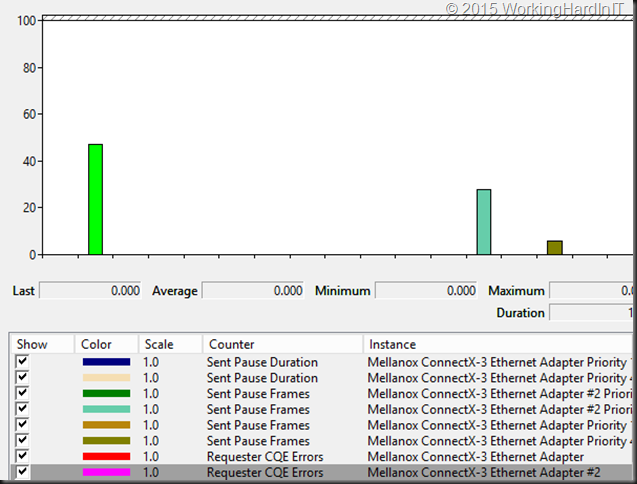

To view PFC action in Windows we rely on the Mellanox Adapter QoS Counters

Below you’ll see the number of pause frames being sent & received on each port. Click on the image to enlarge.

An important note trying to make sense of it all: … pauze and receive frames are sent and received hop to hop. So if you see a pause frame being sent on a server NIC port you should see them being received on the switch port and not on it’s windows target you are live migrating from. The 4 pause frames sent in the screenshot above are received by the switchport as you can see from the PFC Stats for that port.

People, if you don’t see errors in the error counters and event viewer that’s good. If you see the PFC Pause frame counters move up a bit that’s (unless excessive) also good and normal, that PFC doing it’s job making sure the traffic is lossless. If they are zero and stay zero for ever you did not buy a lossless fabric that doesn’t need DCB, it’s more likely you DCB/PFC is not working ![]() and you do not have a lossless fabric at all. The counters are cumulative over time so they don’t reset to zero bar resetting the NIC or a reboot.

and you do not have a lossless fabric at all. The counters are cumulative over time so they don’t reset to zero bar resetting the NIC or a reboot.

When testing feel free to generate lots of traffic all over the place on the involved ports & switches this helps with seeing all this in action and verifying RDMA/PFC works as it should. I like to use ntttcp.exe to generate traffic, the most recent version will let you really put a load on 10GBps and higher NICs. Hammer that network as hard as you can ![]() .

.

Again a simple video to illustrate this on Vimeo.

Hi there. I have setupa PFC network with Mellanox SX1012 switches and ConnectX-3 cards, and I’m getting (what appears to be) strange results.

If I copy/paste a 50GB file within a Hyper-V VM to itself, so just using the SMB network for traffic, I get literally hundreds of send pause frames on my SOFS server and hundreds of receive pause frames on my Hyper-V server. If I do a few at the same time, then I actually get other VMs on the host rebooting or going into critical states as they lose connectivity to the SOFS.

Any clues on where to start? I assume my PFC must be setup right, or I wouldn’t be getting any pause frames at all. The SOFS and Hyper-V servers have dedicated 10Gbit ConnectX-3 ports for just SMB/RDMA/RoCE traffic – no other traffic goes down the NICs.

Cheers,

Andy

Just to add to that – I also have bagillions of CQE errors. Not really sure what the heck is going on 🙁

I’m getting “Responder CQE Errors” when SMB sessions close. This blog says the counter should remain zero or not increment if things are configured correctly. I also read this though:

Note: “Responder CQE Error” counter may raise at an end of SMB direct tear-down session. Details: RDMA receivers need to post receive WQEs to handle incoming messages, if the application does not know how many messages are expected to be received (e.g. by maintaining high level message credits) they may post more receive WQEs than will actually be used. On application tear-down, if the application did not use up all of it’s receive WQEs the device will issue completion with error for these WQEs to indicate HW does not plan to use them, this is done with a clear syndrome indication of “Flushed with error”.

From

Thus, I don’t know if it is OK to receive Responder CQE Errors or not?

It is under certain heavy use conditions yes – live migrations, storage traffic, … with the Mellanox counters, only the responder ones actually. I’ve written an little article on that recently that will be published soon and it’s also mentioned in my presentation on the subject. The Windows RDMA Activity counters however don’t show this as such unless you have real issues.

Thanks for the response! Looking forward to reading the article!