The cluster database

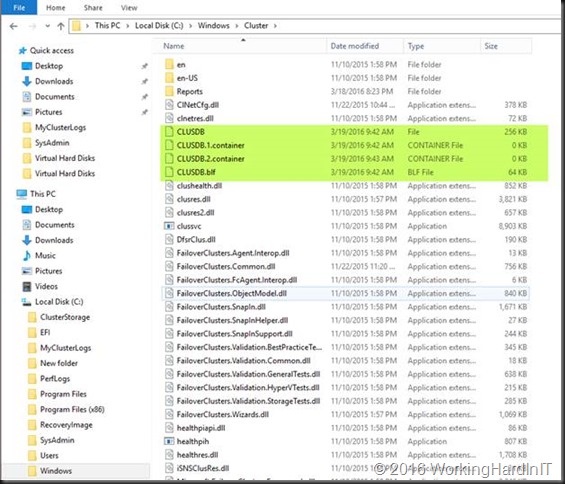

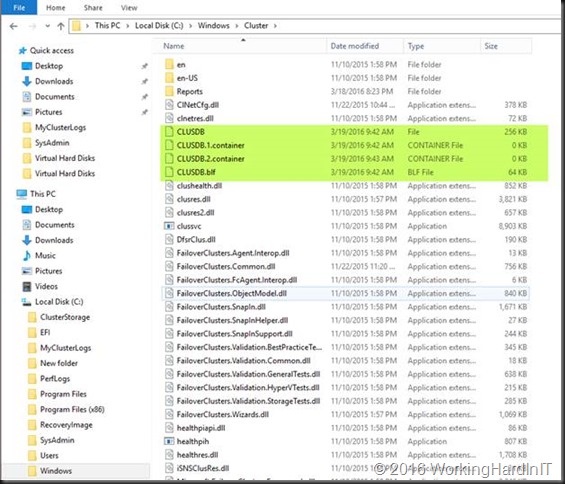

In a Windows Server Cluster the cluster database is where the cluster configuration gets stored. It’s a file called CLUSDB with some assisting files (CLUSDB.1.container, CLUSDB.2.container, CLUSDB.blf) and you’ll find those in C:\Windows\Cluster (%systemroot%\Cluster).

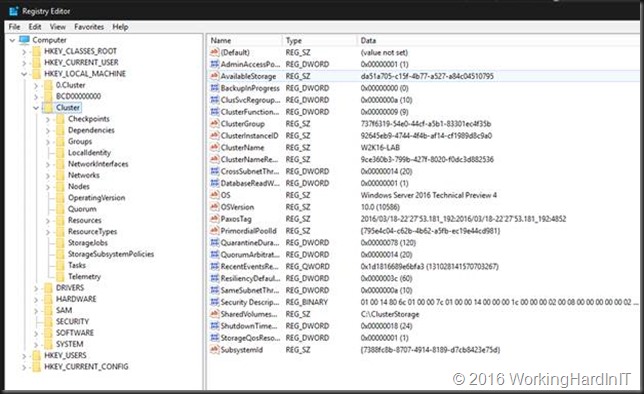

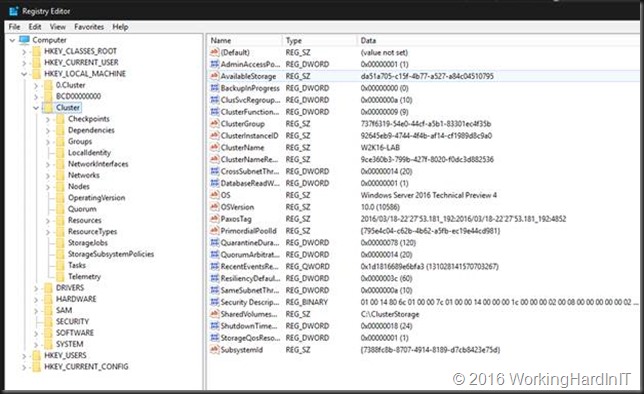

But the cluster database also lives in a registry hive that gets loaded when the cluster service gets started. You’ll find under HKEY_LOCAL_MACHINE and it’s called Cluster. You might also find a 0.Cluster hive on one of the nodes of the cluster.

The 0.Cluster hive gets loaded on a node that is the owner of the disk witness. So if you have a cloud share or a file share witness this will not be found on any cluster node. Needless to say if there is no witness at all it won’t be found either.

On a lab cluster you can shut down the cluster service and see that the registry hive or hives go away. When you restart the cluster service the Cluster hive will reappear. 0.Cluster won’t as some other node is now owner of the disk witness and even when restarting the cluster service gets a vote back for the witness the 0.Cluster hive will be on that owner node.

If you don’t close the Cluster or 0.Cluster registry hive and navigate to another key when you test this you’ll get an error message thrown that the key cannot be opened. It won’t prevent the cluster service from being stopped but you’ll see an error as the key has gone. If you navigate away, refresh (F5) you’ll see they have indeed gone.

So far the introduction about the Cluster and 0.Cluster Registry Hives.

How is the cluster database kept in sync and consistent?

Good so now we know the registry lives in multiple places and gets replicated between nodes. That replication is paramount to a healthy cluster and it should not be messed with. You can see an DWORD value under the Cluster Key called PaxosTag (see https://support.microsoft.com/en-us/kb/947713 for more information). That’s here the version number lives that keep track of any changes and which is important in maintaining the cluster DB consistency between the nodes and the disk witness – if present – as it’s responsible for replicating changes.

You might know that certain operations require all the nodes to be on line and some do not. When it’s require you can be pretty sure it’s a change that’s paramount to the health of the cluster.

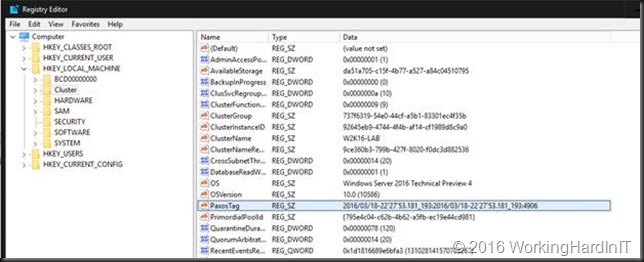

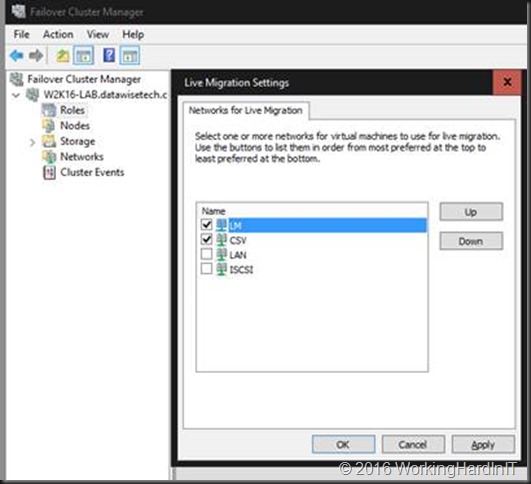

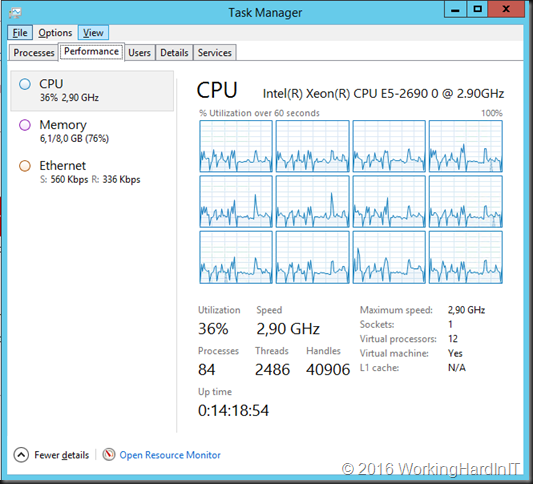

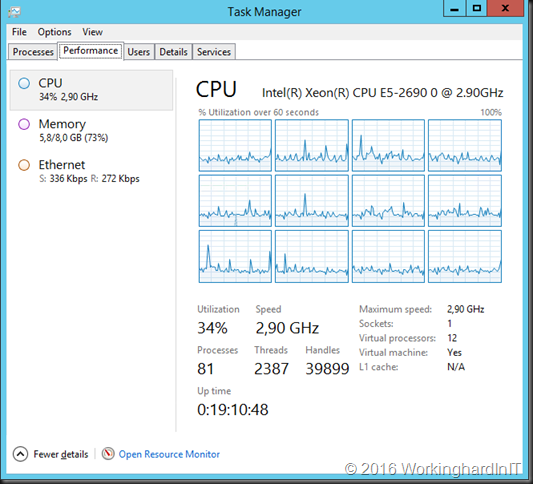

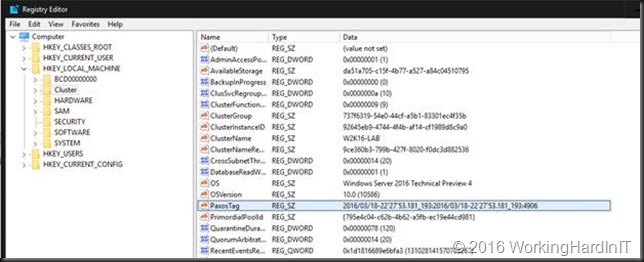

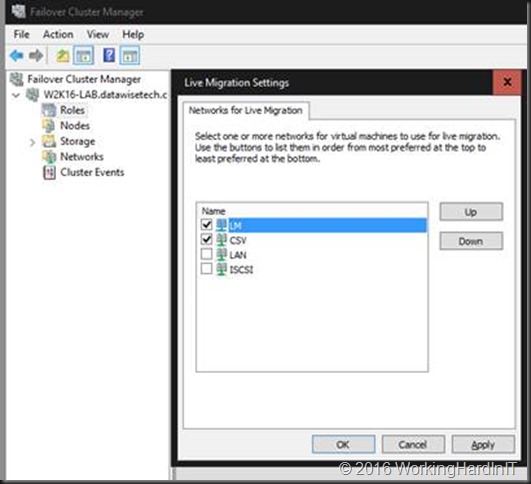

To demonstrate the PaxosTag edit the Cluster Networks Live Migration settings by enabling or disable some networks.

Hit F5 on the registry Cluster/0.cluster Hive and notice the tag has increased. That will be the case on all nodes!

As said when you have a disk witness the owner node of the witness disk also has 0.Cluster hive which gets loaded from the copy of the cluster DB that resides on the cluster witness disk.

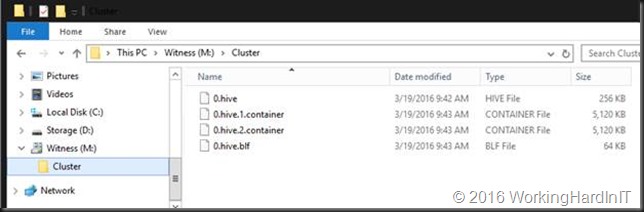

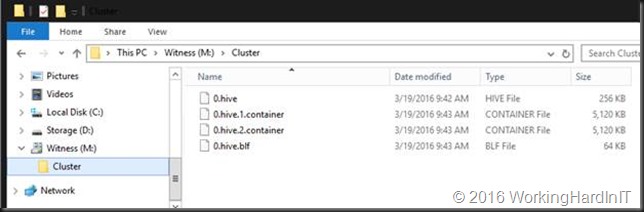

As you can see you find 0.hive for the CLUSTERDB and the equivalent supporting files (.container, .blf) like you see under C:\Windows\Cluster on the cluster disk un the Cluster folder. Note that there is no reason to have a drive letter assigned to the witness disk. You don’t need to go there and I only did so to easily show you the content.

Is there a functional difference between a disk witness and a file share or cloud witness?

Yes, a small one you’ll notice under certain conditions. Remember a file share of cloud witness does not hold a copy of the registry database. That also means there’s so no 0.Cluster hive to be found in the registry of the owner node. In the case of a file share you’ll find a folder with a GUID for its name and some files and with a cloud witness you see a file with the GUID of the ClusterInstanceID for its name in the storage blob. It’s bit differently organized but the functionality of these two is exactly the same. This information is used to determine what node holds the latest change and in combination with the PaxosTag what should be replicated.

The reason I mention this difference is that the disk witness copy of the Cluster DB is important because it gives a disk witness a small edge over the other witness types under certain scenarios.

Before Windows 2008 there was no witness disk but a “quorum drive”. It always had the latest copy of the database. It acted as the master copy and was the source for replicating any changes to all nodes to keep them up to date. When a cluster is shut down and has to come up, the first node would download the copy from the quorum drive and then the cluster is formed. The reliance on that quorum copy was a single point of failure actually. So that’s has changed. The PaxosTag is paramount here. All nodes and the disk witness hold an up to date copy, which would mean the PaxosTag is the same everywhere. Any change as you just tested above updates that PaxosTag on the node you’re working on and is replicated to every other node and to the disk witness.

So now when a cluster is brought up the first node you start compares it’s PaxosTag with the one on the disk witness. The higher one (more recent one) “wins” and that copy is used. So either the local clusterDB is used and updates the version on the disk witness or vice versa. No more single point of failure!

There’s a great article on this subject called Failover Cluster Node Startup Order in Windows Server 2012 R2. When you read this you’ll notice that the disk witness has an advantage in some scenarios when it comes to the capability to keep a cluster running and started. With a file share or cloud witness you might have to use -forcequorum to get the cluster up if the last node to be shut down can’t be started the first. Sure these are perhaps less common or “edge” scenarios but still. There’s a very good reason why the dynamic vote and dynamic witness have been introduced and it makes the cluster a lot more resilient. A disk witness can go just a little further under certain conditions. But as it’s not suited for all scenarios (stretched cluster) we have the other options.

Heed my warnings!

The cluster DB resides in multiple places on each node in both files and in the registry. It is an extremely bad idea to mess round in the Cluster and 0.Cluster registry hives to clean out “cluster objects”. You’re not touching the CLUSDB file that way or the PaxosTag used for replicating changes and things go bad rather quickly. It’s a bad situation to be in and for a VM you tried to remove that way you might see:

- You cannot live or quick migrate that VM. You cannot start that VM. You cannot remove that VM from the cluster. It’s a phantom.

- Even worse, you cannot add a node to the cluster anymore.

- To make it totally scary, a server restart ends up with a node where the cluster service won’t start and you’ve just lost a node that you have to evict from the cluster.

I have luckily only seen a few situations where people had registry corruption or “cleaned out” the registry of cluster objects they wanted to get rid of. This is a nightmare scenario and it’s hard, if even possible at all, to recover from without backups. So whatever pickle you get into, cleaning out objects in the Cluster and/or 0.Cluster registry hive is NOT a good idea and will only get you into more trouble.

Heed the warnings in the aging but still very relevant TechNet blog Deleting a Cluster resource? Do it the supported way!

I have been in very few situations where I managed to get out of such a mess this but it’s a tedious nightmare and it only worked because I had some information that I really needed to fix it. Once I succeeded with almost no down time, which was pure luck. The other time cluster was brought down, the cluster service on multiple nodes didn’t even start anymore and it was a restore of the cluster registry hives that saved the day. Without a system state backup of the cluster node you’re out of luck and you have to destroy that cluster and recreate it. Not exactly a great moment for high availability.

If you decide to do muck around in the registry anyway and you ask me for help I’ll only do so if it pays 2000 € per hour, without any promise or guarantee of results and where I bill a minimum of 24 hours. Just to make sure you never ever do that again.