Introduction

When you perform a cluster OS rolling upgrade of Windows Server 2012 R2 cluster to a Windows Server 2016 Cluster you’ll have two options.

1. You evict the nodes, one after the other, perform a clean OS install and join them to the existing cluster.

2. You do an in-place OS upgrade of the nodes (no need to evict the nodes, you can if you want to). I tested this and blogged about it in In Place upgrades of cluster nodes to Windows Server 2016

Both of these give you the benefits that you can keep your workloads (Hyper-V, SOFS, SQL Server) running and you don’t have to create a new cluster to do so. The moment you have Windows Server 2016 Nodes added to an existing Windows Server 2012 R2 cluster you are running in Mixed mode. Until all your nodes have been upgraded to Windows Server 2016 will remain running in mixed mode.

When there are only Windows Server 2016 nodes you can decide to also upgrade the cluster functional level. This enables all the new capabilities in Windows Server 2016 Failover Clustering and also means you cannot go back to a Windows 2012 R2 cluster anymore. So, only take this step after a final validation of all drivers and firmware to make sure you don’t need to go back and you’re ready to fully commit to a fully functional Windows Server 2016 Failover Cluster.

A cluster operating system rolling upgrade does leave some traces, but that’s OK. Let’s take a look.

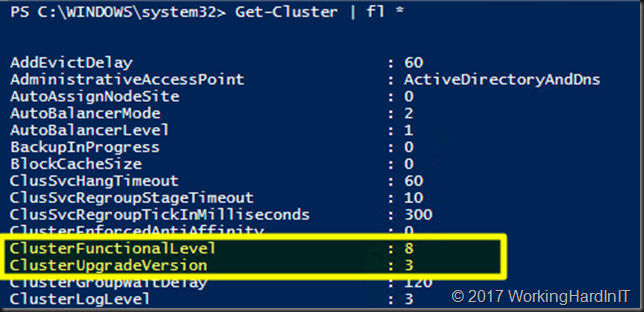

This is what a get-cluster against Windows Server 2016 that was upgraded from Windows Server 2012 R2 looks like.

As you can see the cluster functional level is 8 and not 9 yet. This means that we have not yet run the Update-ClusterFunctionalLevel command on this cluster yet. Which still allows us to roll back all the way to a cluster running only Windows 2012 R2 nodes. The ClusterUpgradeVersion has a value of 3.

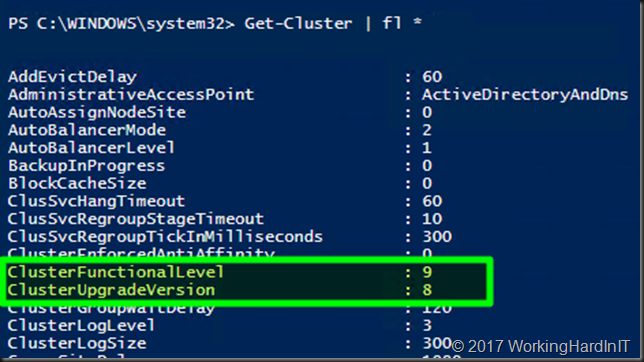

We now execute the Update-ClusterFunctionalLevel command and take a look at Get-Cluster again.

As you can see we are now at cluster functional level 9 which enables all the capabilities offered by Windows Server 2016 Failover Clustering. The cluster Upgrade version is 8. That’s the previous cluster functional level we were at before we executed Update-ClusterFunctionalLevel.

Note that both properties ClusterFunctionalLevel and ClusterUpgradeVersion are only available with Windows Server 2016. You will not find it on a Windows Server 2012 R2 or lower cluster. If you run this command from Windows Server 2016 against a Windows Server 2012 R2 cluster both properties will be empty. If you run it on a Windows Server 2012 R2 host against Windows Server 2012 R2 or lower and even a Windows Server 2016 cluster these properties are not even there. The commandlet is older on those OS versions and didn’t know about these properties yet.

What about if you create a brand-new cluster, perhaps even on freshly installed windows Server 2016 Nodes? What does ClusterUpgradeVersion have as a value then? Well it’s also 8. In the end, there is no difference between an in-place upgrade Windows Server 2016 cluster and a cleanly created one. So where are those traces?

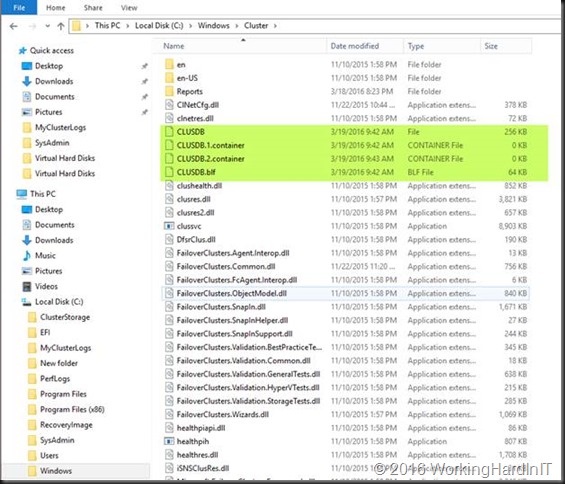

Cluster Operating System Rolling Upgrade Leaves Traces

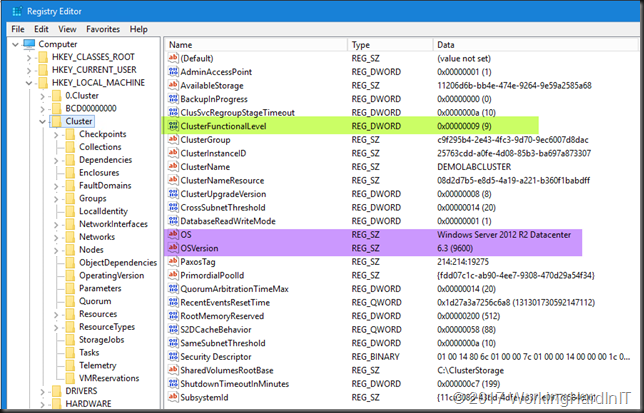

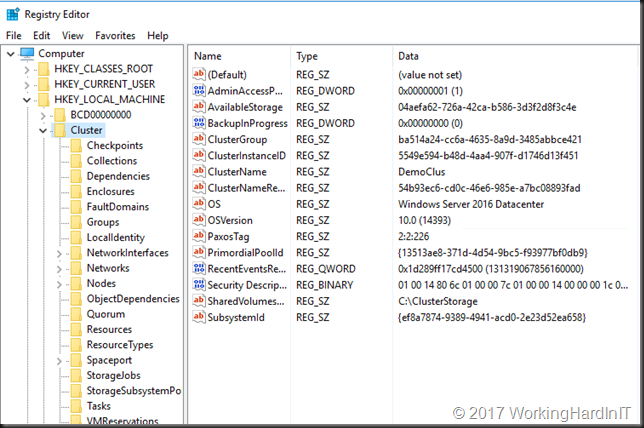

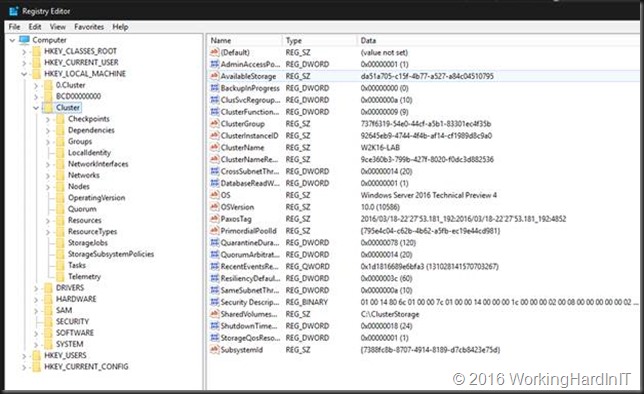

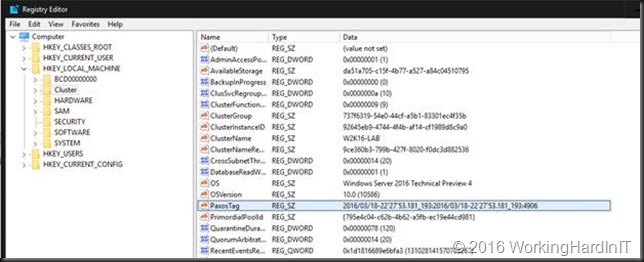

What gives a rolling upgrade away is that in the registry, under the HKLM\Cluster the OS and OSVersion values are not updated (purple in the picture below). This is a benign artifact and I don’t know if this if on purpose or not. I have changed them to Windows Server 2016 Datacenter as an experiment and I have not found any issues by doing so. Now, please don’t take this as recommendation to do so. The smartest and safest thing is to leave it alone. These are not used, so don’t worry about them.

But even if you would change those values a cluster resulting from of a cluster operating system rolling upgrade still has other ways of telling it was not born as a Window Server 2016 Cluster.

Under HKLM\Cluster (and Cluster.0) you’ll find the value CusterFunctionaLevel that does not exist on a cleanly installed Windows Server 2016 Cluster (green in the picture above). As you can see this is a Window Server 2016 cluster running at functional level 9.

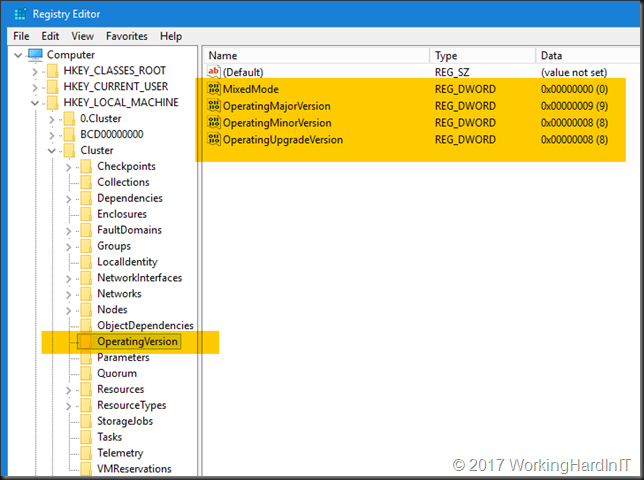

There is even an extra key OperatingVersion under HKLM\Cluster that you will not find on a cleanly installed cluster either. It also has a Mixed Mode value under that key which indicates whether the cluster is still running in mixed mode or not.

Here is a screenshot of newly installed/created Windows Server 2016 cluster. No ClusterFunctionalLevel value, the OS and OSVersion Values are correct and there is no OperatingVersion key to be found.

What if you don’t like traces?

First of all, these traces are harmless. One thing you can do if you want to weed out all traces of a rolling upgrade (as far as the cluster is concerned) is to destroy the cluster and create one with the same CNO (and IP address if that was a fixed one). This might all be a bit more involved when it comes to CSV naming and other existing resources but then these remnants will be gone in a supported way. Now this does defeat one of the main purposes of this feature: no down time. The operating system itself might also contain traces if you did in-place OS upgrades but the cluster will not. Just adapting OS/OSVersion, ClusterFunctionalLevel and deleting the key OperatingVersion from HKLM\Cluster (and HKLM\Cluster.0) are not supported actions and messing around in the cluster registry keys can lead to problems, so don’t! The advice is to just leave it all alone. Microsoft developed cluster operating system rolling upgrade the way they did for a reason and by leaving things as Microsoft has set or left them will make sure you are always in a fully supported condition. So, use it if it fits the circumstances & you comply with all the prerequisites. Look at these traces a flag of honor, not a smudge on your shining armor. When I see these artifacts, I see people who have used this feature to their own benefits. Well done I say.

Learn more about the Cluster OS Rolling Upgrade process

Next to my blogs like First experiences with a rolling cluster upgrade of a lab Hyper-V Cluster (Technical Preview) and In Place upgrades of cluster nodes to Windows Server 2016 there are many resources out there by fellow blogger and Microsoft. A great video on the subject is Introducing Cluster OS Rolling Upgrades in Windows Server 2016 with Rob Hindman, who actually works on this feature and knows it inside out.

An important thing to keep in mind is that this can be automated using PowerShell or by leveraging SCVMM for orchestration for example. 3rd party tools could also support this and help you automate this process in order to scale it when needed.

Finally, the official documentation can be found here Cluster operating system rolling upgrade