IPsec has been around for a while now. In an ever more security conscious & regulated world you want and/or are required to protect your network communication by

authenticating and encrypting the contents of at least some of your network traffic. Think about SOX and HIPPA and you’ll see that trade or government security requirements are not going anywhere but up for us all. This is not just restricted to military of intelligence organizations.

We’ve seen the ability to offload IPsec traffic to the NIC for a while now. This is great as the IPsec processing is a very CPU intensive workload. Unfortunately it didn’t work for virtual machines . Until now IPsec offloads was only available to host/parent workloads in using Windows Server 2008 R2. The virtualization of high volume network traffic workloads that require encryption means a serious hit on the resources on the host. If you’re willing to pay you might get by by throwing extra host & CPU power at the issue. But what if the load means a single virtual machine with 4 vCPUs can’t hack it? Game over. Sure Windows Server 2012 Hyper-V allows for 32 vCPUs now, but that is very costly, so this is not a very cost effective solution. So in some cases this lead to those workloads being marked as “unsuited for virtualization”.

But with Windows Server 2012 Hyper-V we get a very welcome improvement, that is the fact that a virtual machine can now also offload the IPsec processing to the physical NIC on the host. That frees up a lot of CPU cycles to perform more application-level work, resulting in better virtualization densities, which means less costs etc.

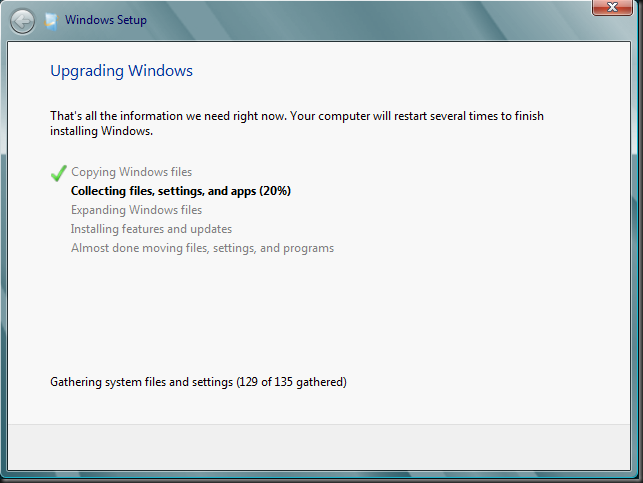

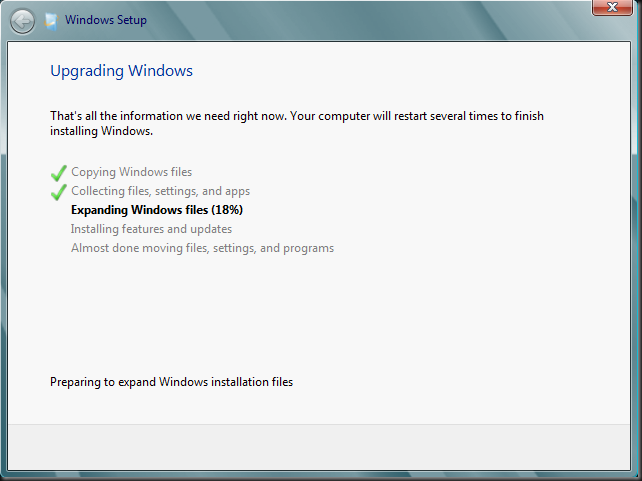

Let’s take a look where you can set this in the Hyper-V GUI where you’ll find it under the network adaptor /Hardware Acceleration.

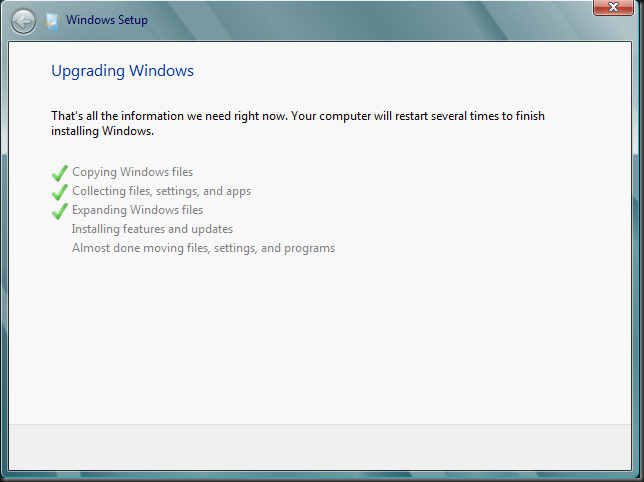

IPsec offload is also managed by the Hyper-V switch, this controls whether the offloading will be active or not. This is to prevent that the IPsec offload stopping the services if insufficient resources are available. Please do note that IPsec when required in the guest will be done anyway creating an extra CPU burden. So this does not disable IPsec, just the offloading of it. On top of this and in the gravest extreme you can guarantee that IPsec servers can get the resources they need by sacrificing less important guest if needed. by using virtual machine prioritization. The fact that you can configure the number of security associations helps balancing the needs of multiple virtual machines requiring IPsec offload.

To conclude, this wouldn’t be Windows Server 2012 if you couldn’t do all this with PowerShell. Take a look at Set-VMNetworkAdapter and notice the following parameter:

-IPsecOffloadMaximumSecurityAssociation<UInt32>

This specifies the maximum number of security associations that can be offloaded to the physical network adapter that is bound to the virtual switch and that supports IPSec Task Offload. The thing to notice here is that specify a zero value is used to disable the IPsec Offload feature.