UNMAP & ODX Video

Some things are easier to show using a video so have a look at a video on UNMAP/ODX used with Windows Server 2012 R2 and Compellent SAN:

You can also go directly to the Vimeo page by clicking on the below screen shot

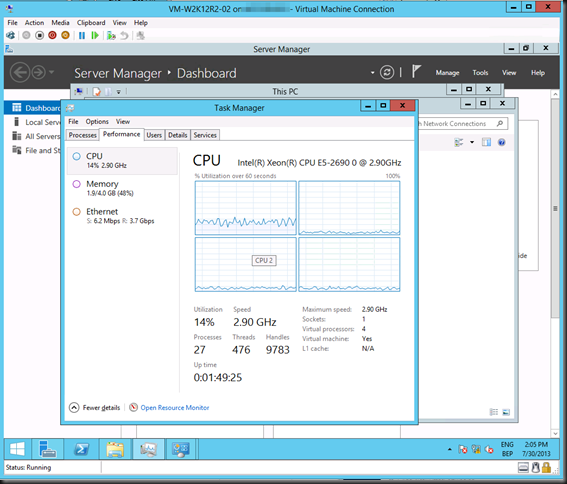

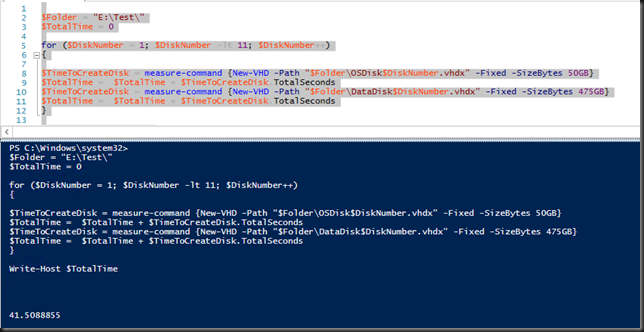

We start out with a 10.5TB large thinly provisioned LUN that has about 203GB of space in use on the SAN. So the LUN on the SAN might be 10.5TB and windows sees a volume that is 10.5TB only the effective data stored consumes storage space on the SAN. That ought to demonstrate the principle of thin provisioning adequately ![]() . The nice PowerShell counter is made possible via the Compellent PowerShell Command Set.

. The nice PowerShell counter is made possible via the Compellent PowerShell Command Set.

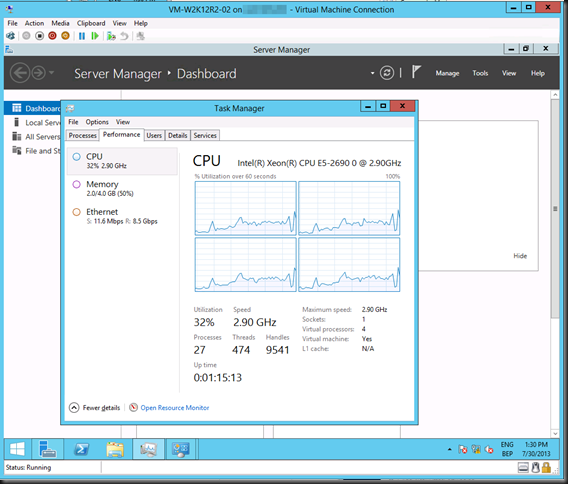

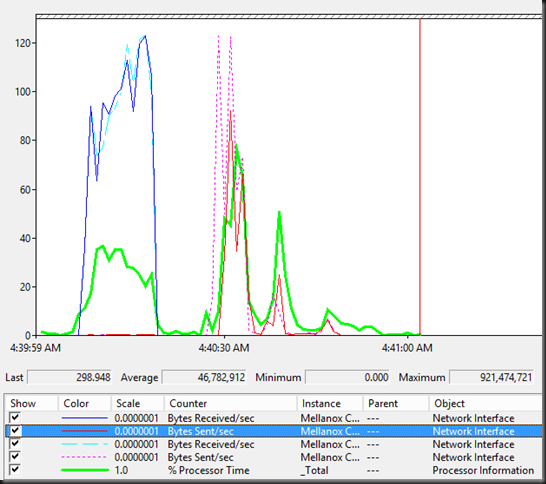

We then copy 42GB worth of ISO files inside a Windows Server 2012 virtual machine from a fixed VHD to a dynamically expanding VDHX. Those are nice speeds. And look at how the size of the VHDX file grows on the CSV volume and how the space used on the SAN is growing. That’s because the LUN is thinly provisioned.

Secondly we copy the same ISO files to a fixed size VHDX. Again, some really nice speeds. As the VHDX is fixed in size you do not see it grow. When looking at the little SAN counter however we do see that the thinly provisioned LUN is using more storage capacity.

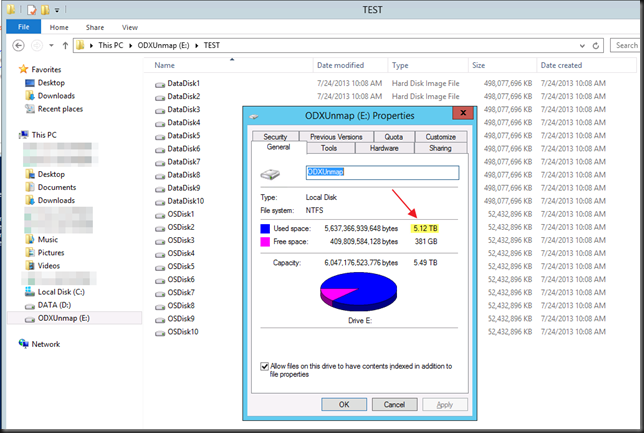

Once that is done we see that the total space consumed on the SAN for that CSV LUN has risen to 284GB. We then delete the data from both dynamically expanding VHDX and are about to run the Optimize-Volume command when we notice that the SAN has already reclaimed the space. So we don’t run the optimize command. Keep that in mind. By the way, this process is done as part of standard maintenance (defrag) and some NTFS check pointing mechanism that’s run every 5 minutes and sends down the info from the virtual layer to the physical layer to the SAN. During demo’s it’s kind of boring to sit around and wait for it to happen ![]() . Just remember that in real life it’s a zero touch feature, you don’t need to baby sit it.

. Just remember that in real life it’s a zero touch feature, you don’t need to baby sit it.

We then also delete the ISO files from the fixed VHDX and run Optimize-Volume G –Retrim and as result you see the space reclaimed on the SAN. As this is a fixed disk the size of the VDHX will not change. But what about the dynamically expanding VHDX? Well you need to shut it down for that. But hey, nothing happens. So we fire it up again and do run Optimize-Volume H –Retrim before shutting it down again and voila.

So what do you need for this?

Rest assured. You don’t need the most high end, most expensive, complex and proprietary SAN hardware to get this done. What you need is good software (firmware) on quality commodity hardware and you’re golden. If any SAN vendor wants to charge you a license fee for ODX/UNMAP just throw them out. If they don’t even offer it walk away from them and just use storage spaces. There are better alternatives than overpriced SANs lacking features.

I’ve found that systems like Equalogic & Compellent are in the sweet point for 90 % of their markets based on price versus capabilities and features. Let’s look at the a Compellent for example. For all practical intend this SAN runs on commodity hardware. It’s servers & disk bays. SAS to the storage & FC, iSCSI or SMB/NFS for access. With capable hardware the magic is in the software. Make no mistake about it, commodity hardware when done right, is very, very capable. You don’t need a special proprietary hardware & processors unless for some specialized nice markets. And if you think you do, what about buying commodity hardware anyway at 50% of the cost and replacing it with the latest of the greatest commodity hardware after 4 years and still come out on top cost wise whilst beating the crap out of that now 4 year old ASIC and reaping the benefits of a new capabilities the technology evolutions offers? Things move fast and you can’t predict the future anyway.