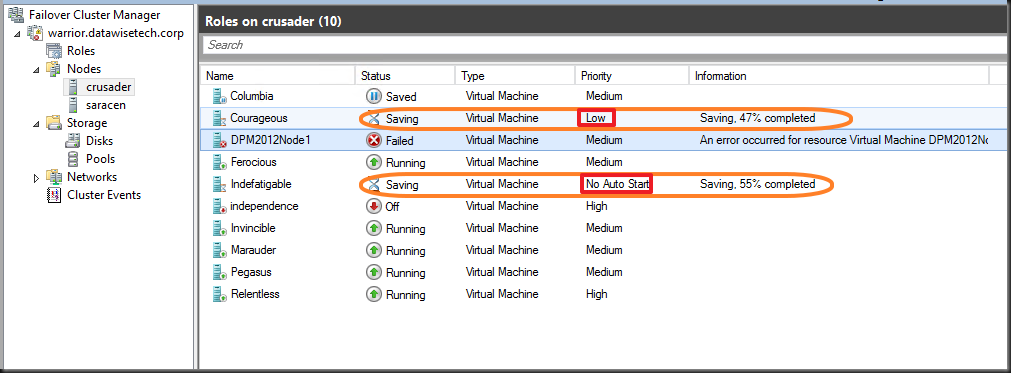

One night I was doing some maintenance on a Hyper-V cluster and I wanted to Pause and drain one of the nodes that was up next for some tender loving care. But I was greeted by some messages:

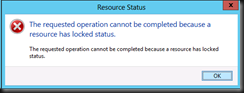

[Window Title]

Resource Status[Main Instruction]

The requested operation cannot be completed because a resource has locked status.[Content]

The requested operation cannot be completed because a resource has locked status.[OK]

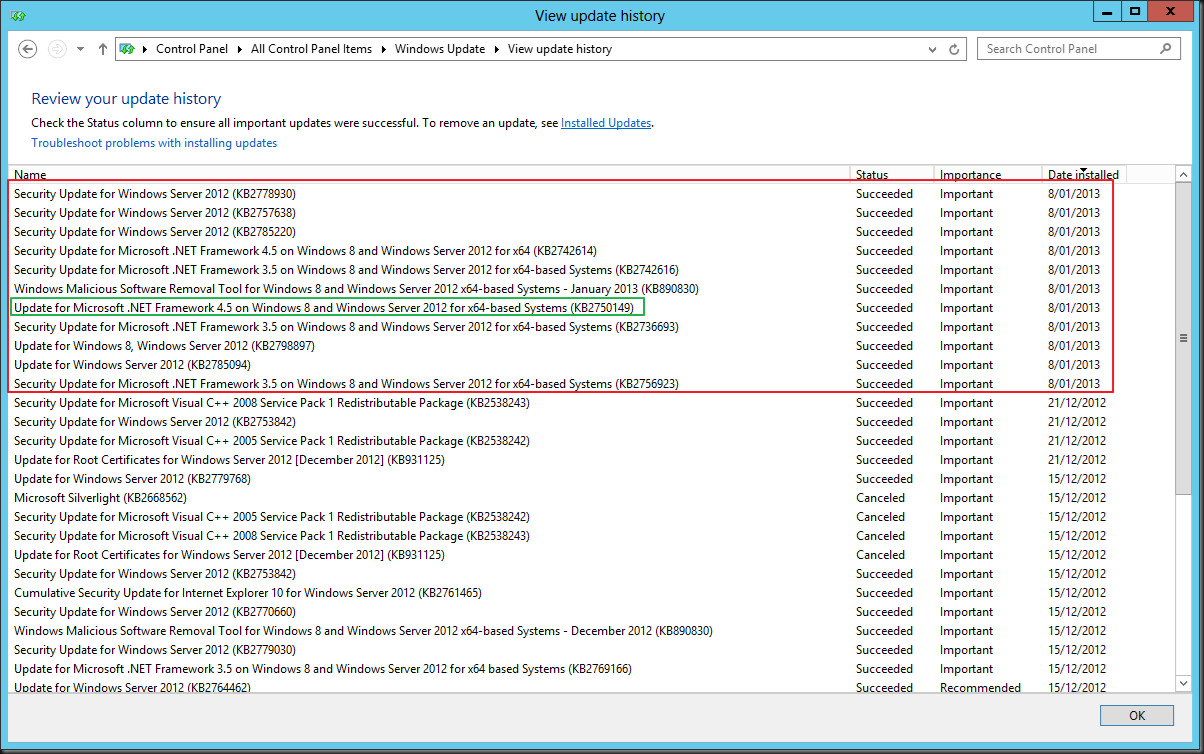

Strange, the cluster is up and running, none of the other nodes had issues and operational wise all VMs are happy as can be. So what’s up? Not to much in the error logs except for this one related to a backup. Aha …We fire up disk part and see some extra LUNs mounted + using “vssadmin list writers“ we find:

Writer name: ‘Microsoft Hyper-V VSS Writer’

…Writer Id: {66841cd4-6ded-4f4b-8f17-fd23f8ddc3de}

…Writer Instance Id: {2fa6f9ba-b613-4740-9bf3-e01eb4320a01}

…State: [5] Waiting for completion

…Last error: Unexpected error

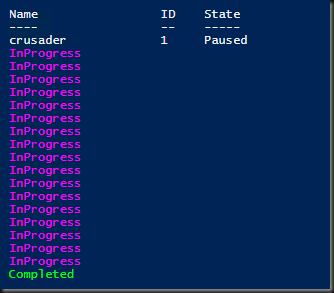

Bingo! Hello old “friend”, I know you! The Microsoft Hyper-V VSS Writer goes into an error state during the making of hardware snapshots of the LUNs due to almost or completely full partitions inside the virtual machines. Take a look at this blog post on what causes this and how to fix fit. As a result we can’t do live migrations anymore or Pause/Drain the node on which the hardware snapshots are being taken.

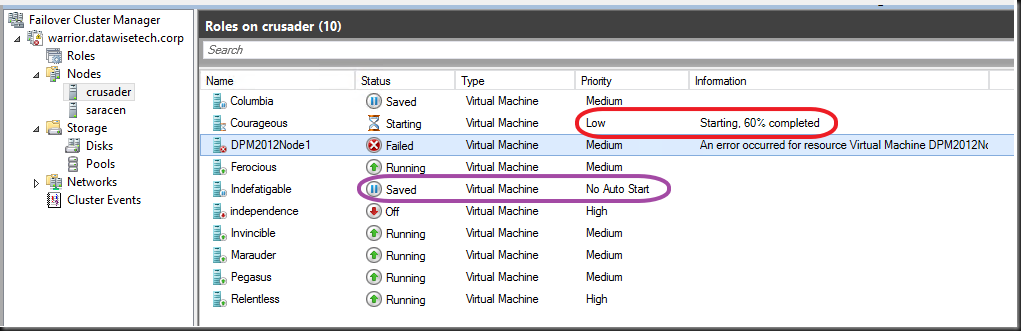

And yes, after fixing the disk space issue on the VM (a SDT who’s pumped the VM disks 99.999% full) the Hyper-V VSS writer get’s out of the error state and the hardware provider can do it’s thing. After the snapshots had completed everything was fine and I could continue with my maintenance.