What is it?

One of the cool new features that takes scalability in Windows Server 2012 R2 Hyper-V to a new level is virtual Receive Side Scaling (vRSS). While since In Windows Server 2012, Receive Side Scaling (RSS) over SR-IOV is supported it’s best suited for some specialized environments that require the best possible speeds at the lowest possible latencies. While SR-IOV is great for performance it’s not as flexible as for example you can’t team them so if you need redundancy you’ll need to do guest NIC Teaming.

vRSS is supported on the VM network path (vNIC, vSwitch, pNIC) and allows VMs to scale better under heavier network loads. The lack of RSS support in the guest means that there is only one logical CPU (core 0) that has to deal with all the network interrupts. vRSS avoid this bottleneck by spreading network traffic among multiple VM processors. Which is great news for data copy heavy environments.

What do you need?

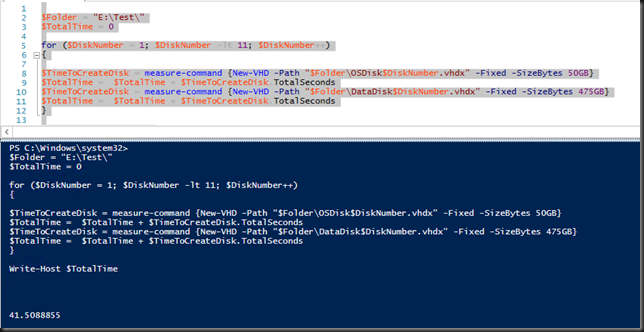

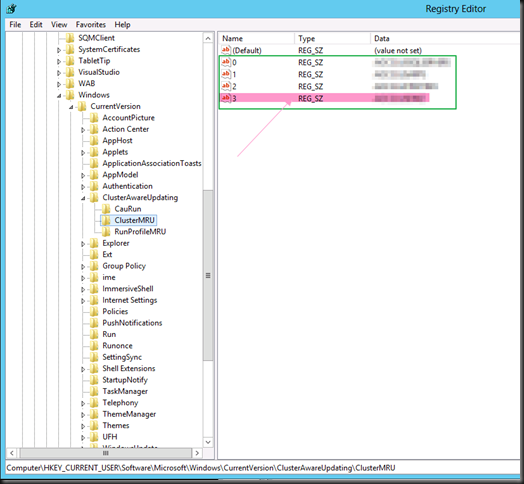

Nothing special, it works with any NICs that supports VMQ and that’s about all 10Gbps NICs you can buy or posses. So no investment is needed. It’s basically the DVMQ capability on the host NIC that has VMQ capabilities that allows for vRSS to be exposed inside of the VM over the vSwitch. To take advantage of vRSS, VMs must be configured to use multiple cores, and they must support RSS => turn it on in the vNIC configuration in the guest OS and don’t try to use a home PC 1Gbps card ![]()

vRSS is enabled automatically when the VM uses RSS on the VM network path. The other good news is that this works over NIC Teaming. So you don’t have to do in guest NIC Teaming.

What does it look like?

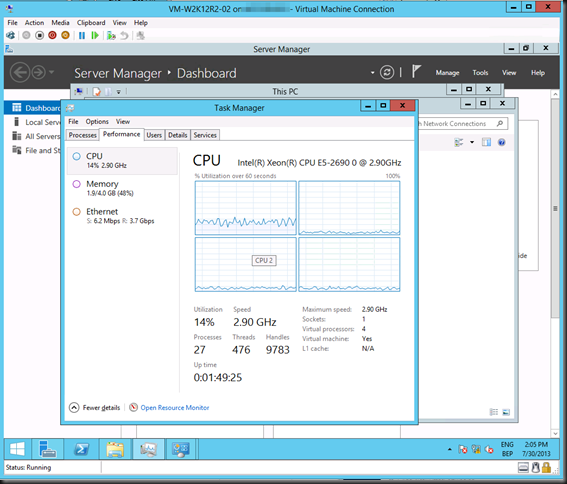

Now without SR-IOV it was a serious challenge to push that 10Gbps vNIC to it’s limit due to all the interrupt handling being dealt with by a single CPU core. Here’s what a VMs processor looks like under a sustained network load without vRSS. Not to shabby, but we want more ![]()

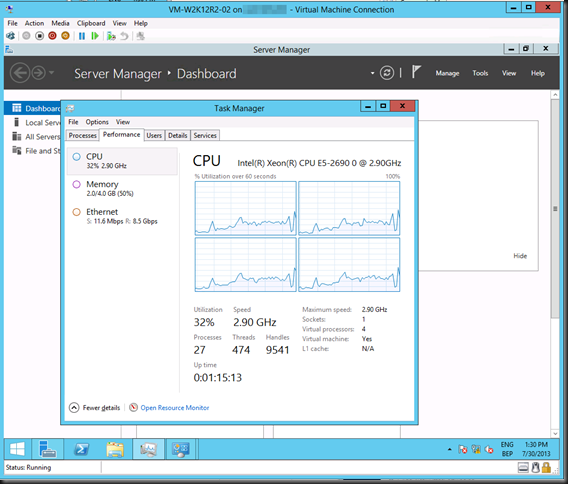

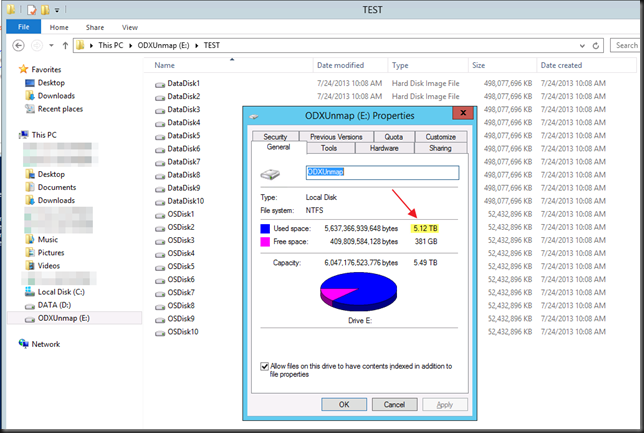

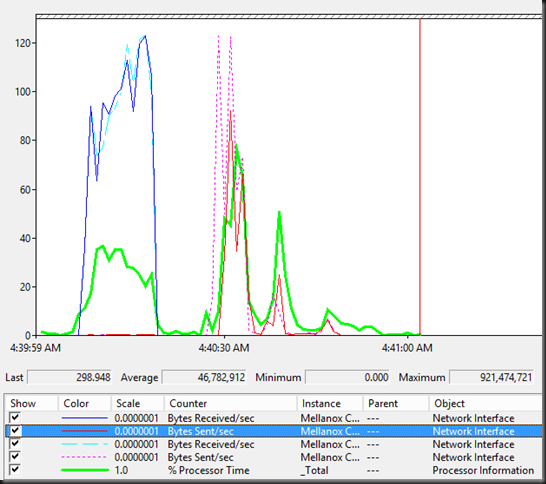

As you can see the incoming network traffic has the be dealt with by good old vCore 0. While DVMQ allows for multiple processors on the host dealing with the interrupts for the VMs it still means that you have a single core per VM. That one core is possibly a limiting factor (if you can get the network throughput and storage IO, that is). vRSS deals with this limitation. Look at the throughput we got copying lot of data to the VM below leveraging vRSS. Yeah that’s 8.5Gbps inside of a VM. Sweet ![]() . I’m sure I can get to 10Gbps …

. I’m sure I can get to 10Gbps …