Disclaimer: These are my musing on the limited info available about Windows Server vNext and based on the Technical Preview bits at the time of writing. So it’s not set in stone & has a time limited value.

Reading the documentation that’s already available on vNext of Windows it’s clear that Microsoft is continuing it’s push towards the software defined data center. They are also pushing high to continuous availability ever more towards the “continuous” side of things.

It’s early days yet and we just only downloaded the Technical Preview but what do we read in What’s New in Storage Services in Windows Server Technical Preview

Storage Quality of Service

- They are giving us more Storage Quality of Service tied into the use of SOFS as storage over SMB3. As way to many NAS solutions don’t support SMB3 or only partially (in a restricted way) it’s clear too me that self build SOFS solution on a couple of servers is and remains the best SMB3 implementation on the market and has just gotten storage QoS.

Little Rant here: To the people that claim that this is not capable of high performance, I usually laugh. Have you actually build a SOFS or TFFS with 10Gbps networking on modern enterprise grade servers line the DELL R720 or 730? Did you look at the results form that relative low cost investment? I think not, really. And if you did and found it lacking, I’ll be very impressed of the workload you’re running. You’ll force your storage to the knees earlier than your Windows file server nowadays.

- It’s in the SOFS layer, so this does not tie you into to Storage Space if you’re not ready for that yet but would like the benefits of SOFS. As long as you have shared storage behind the SOFS you’re good.

- It’s policy based and can apply to virtual machines, groups of virtual machines a service or a tenant

- The virtual disk is the level where the policy is set & enforced.

- Storage performance will dynamically adjust to meet the policies & when tied the performance will be fairly distributed.

- You can monitor all this.

It’s right there in the OS.

Storage Replica

This gives us “storage-agnostic, block-level, synchronous replication between servers for disaster recovery, as well as stretching of a failover cluster for high availability. Synchronous replication enables mirroring of data in physical sites with crash-consistent volumes ensuring zero data loss at the file system level. Asynchronous replication allows site extension beyond metropolitan ranges with the possibility of data loss.”

Look for Hyper-V we already had Hyper-V replica (which is also being improved), but for other workloads we still rely on the storage vendors or 3rd party solutions. But now I can have my storage replicas for service protection and continuity out of the box with Windows. WOW!

and as we read on ..

- Provide an all-Microsoft disaster recovery solution for planned and unplanned outages of mission-critical workloads.

- Use SMB3 transport with proven reliability, scalability, and performance.

- Stretch clusters to metropolitan distances.

Use Microsoft software end to end for storage and clustering, such as Hyper-V, Storage Replica, Storage Spaces, Cluster, Scale-Out File Server, SMB3, Deduplication, and ReFS/NTFS.- Help reduce cost and complexity as follows:

Hardware agnostic, with no requirement to immediately abandon legacy storage such as SANs.

Allows commodity storage and networking technologies.

Features ease of graphical management for individual nodes and clusters through Failover Cluster Manager and Microsoft Azure Site Recovery.Includes comprehensive, large-scale scripting options through Windows PowerShell.

- Helps reduce downtime, and increase reliability and productivity intrinsic to Windows.

- Provide supportability, performance metrics, and diagnostic capabilities.

I have gotten this to work in the lab with some trial and error but this is the Technical Preview, not a finish product. If they continue along this path I’m pretty confident we’ll have functional & operational viable solution by RTM. Just think about the possibilities this brings!

Storage Spaces

Now I have not read much on Storage Space in vNext yet but I think its safe to assume we’ll see major improvements there as well. Which leads me to reaffirm my blog posy here: TechEd 2013 Revelations for Storage Vendors as the Future of Storage lies With Windows 2012 R2

Microsoft is delivering more & great software defined storage inbox. This means cost effective yet very functional storage solutions. On top of that they put pressure on the market to deliver more value if they want to stay competitive. As a customer, whatever solution fits my needs the best, I welcome that. And as a consumer of large amounts of storage in a world where we need to spend the money where it matters most I like what I’m seeing.

Tip for Microsoft: configurability, reliability and EASY diagnostics and remediation are paramount to success. Sure some storage vendor solution aren’t to great on that front either but some are awesome. Make sure your in the awesome category. Make it a great user experience from start to finish in both deployment and operations.

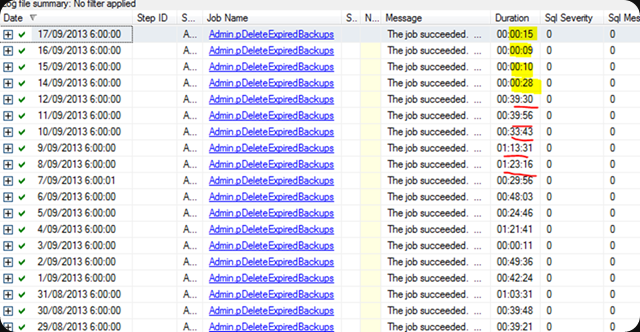

Tip for you: If you’re not ready for prime time with Storage Spaces , SMB Direct etc … do what I’ve done. Use it where it doesn’t kill you if you hit some learning curves. What about storage spaces as a backup target where you can now replicate the backups of to your disaster recovery site?