Introduction

I was investigating a very problematic Windows Server 2016 Hyper-V cluster. That cluster was just performing horribly. “Everything” was hanging, stalling, crashing and RHS.exe errors where flying around while WER dumps got created by the dozen. Things were extremely slow up to the points functionality was just failing. The “fun” thing was that the cluster validation wizard while slow gave that cluster a big thumb up and a supported status as all was well.

Prying around

Time to pry around a bit and see if we could find something wrong. We save live migrations stall, fail, last forever in pending or get stuck at a certain percentage, sometimes finally succeeding with ridiculous blackout times. We could not open up virtual machine properties or very slowly. The FCI GUI was highly unresponsive but so was the Hyper-V Manager GUI or even PowerShell. Those were hanging at even loading the virtual machines or enumerating them with Get-VM. Everything was slow to the point it timed out or crashed. Restarting the services (Cluster, Hyper-V) didn’t do anything and restarting VMMS was super slow or just got stuck. It was a depressing sight for which people tended to blame Hyper-V / Microsoft.

As the title gives away it was latency. Not just ordinary high latency. Real bad latency. That kind of latency kills. Extreme latency produces symptoms that are similar to bugs or corrupt components of roles and features. We have a tendency to look at those first in the event logs and then we look at the network and its usual suspects (VMQ, SET, DCB). But nothing pointed to an issue that I could find.

So, storage maybe?Well we did find one Hyper-V host in the cluster with one HBA port producing too many error so we disabled that FC port for testing. No joy the Hyper-V cluster after a clean reboot of all nodes remained problematic. So on to the storage array itself.

Well holy smoke! On the two volumes for CSV in those cluster we saw latencies that were so bad I could not even believe a single VM would boot. It actually made my appreciation for Hyper-V and clustering grow as it managed to do at least a couple of things. With such latencies I would expect the services to just crash & call it a day.

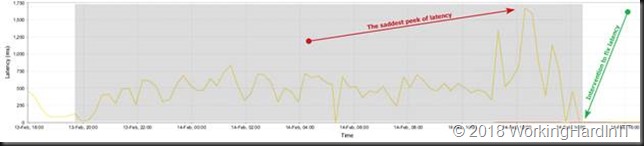

The horrific latency on one of the CSV LUNs.

Looking at the logs we saw that the latencies occurred on the FC HBAs of the controllers. Each one above 50ms, peaking to 150-250ms and one huge peak at almost 500ms. We saw this on all four HBA’s.

The latency on one of the 4 FC HBA’s on one of the controllers. Not a good day. All HBAs had high latencies like this.

The issues were not at the host level (host HBA’s) or not even at the IOPS/bandwidth level of the storage itself. The latency for some reason was spiking. Further investigation lead to the conclusion that the issue was related to synchronous replication going totally wrong. Moving the replication mode to asynchronous fixed that. We’re now investigating why this happened and how to prevent this from happening again. But that’s another story.

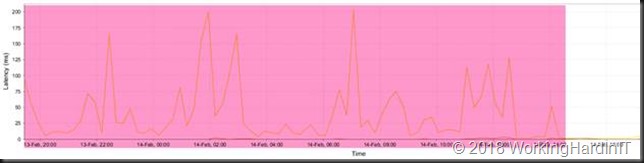

Latency on one of the 4 FC HBAs on one of the controllers after we fixed the issue.

Do not assume anything

So, there you go. Everything depends on everything in some direct or indirect way. It’s all connected and that my friends, is why I’m a proponent of “service resilience engineering” where the responsible team owns the entire stack. That’s is how you can act fast.