Introduction

Hyper-V offers 3 ways of managing or tweaking the CPU scheduler to provide the best possible configuration for certain scenarios and use cases. The defaults normally work fine but of certain conditions you might want to tweak them for the best possible outcome. The CPU resource controls at your disposal are:

- Virtual machine reserve – Think of this as the minimum CPU “QoS”

- Virtual machine limit – Think of this as the maximum CPU “QoS”

- Relative Weight – Think of this as the scale defining what VM is more important.

Note that you should understand what these setting are and can do. Threat them like spices. Select the ones you need and don’t overdo it. They’re there to help you, if needed you can leverage all three. But it’s highly unlikely you’ll need to do so. Using one or two will server you best if and when you need them.

In this blog post we’ll look at the virtual machine reserve.

Virtual Machine Reserve

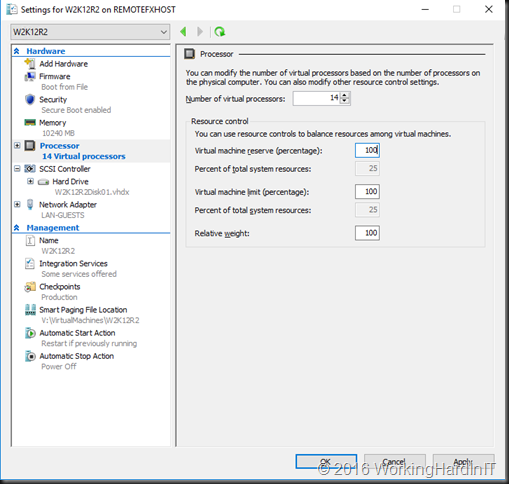

The virtual machine reserve is a minimum CPU guarantee. This is configured at the virtual machine level for all vCPUs of that virtual machine. This means a couple of things. The reserve is guaranteed for the virtual machine that has it configured. This means that when there is contention for CPU resources the reserve is enforced. When there is no reserve configured are left to compete for the available resources, they don’t have any “SLA” minimum guaranteed CPU cycles however

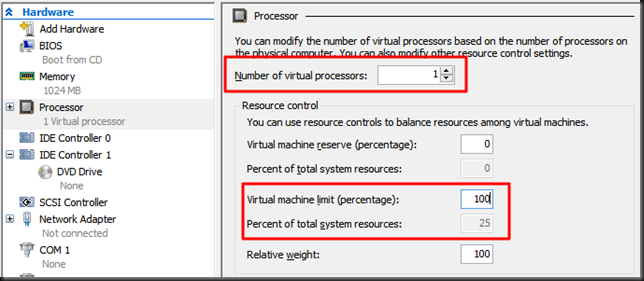

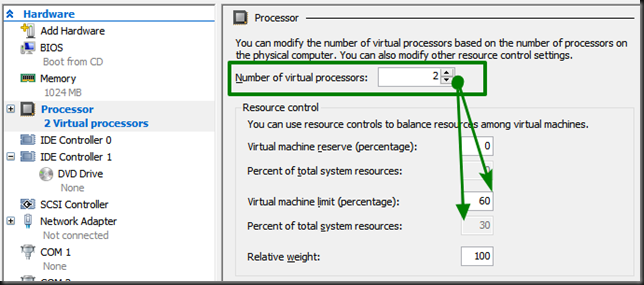

The virtual machine reserve is configured as the minimal % of CPU usage we’ll guarantee a virtual machine to get when there is contention. 0% reserve means that the virtual machine reserve is disabled. This is the default.

So how is this reserve accomplished? When a virtual machine is started there is a check if the virtual machine reserve can be met. If not, the virtual machine will not be started. This is also the case when you want to quick or live migrate a virtual machine. You cannot migrate a virtual machines to a host that cannot deliver the reserve.

The reserve is guaranteed. When you have started enough virtual machines with a reserve to consume 100% of the host CPU resources you cannot start another virtual machines that has a reserve configured. This is even the case if all the running virtual machines who together have reserved 100% of the host CPU resources are completely idle. That’s the cost of reserving CPU resources.

Also note you can mix virtual machines with and without a reserve configured. That’s perfectly fine.

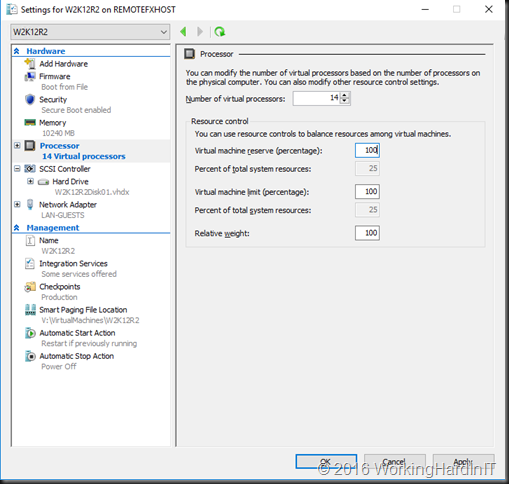

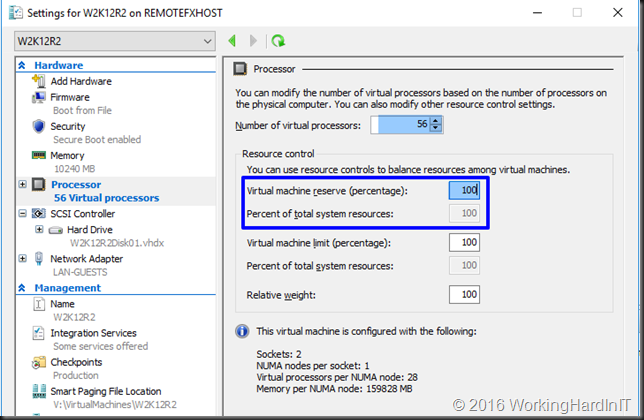

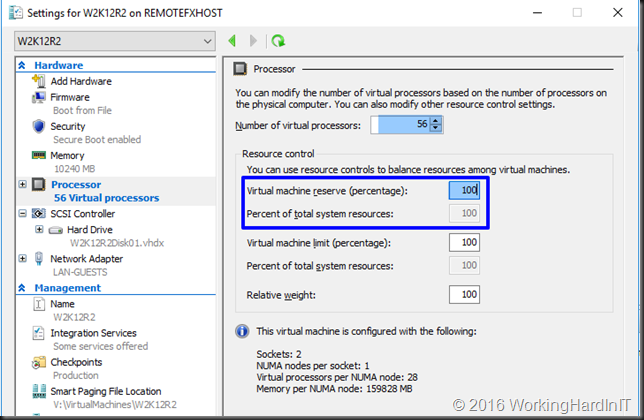

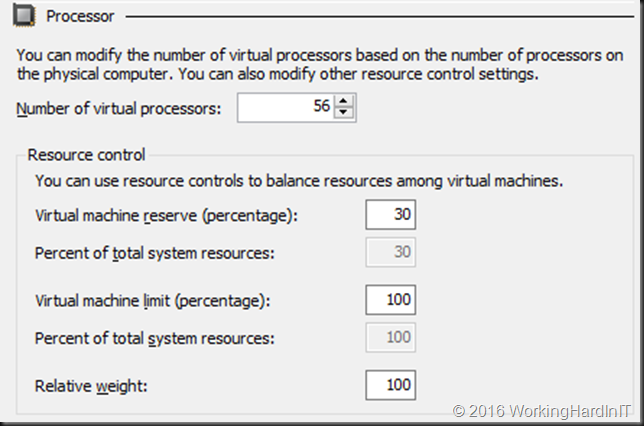

Below is an extreme example. This single virtual machines has 56 vCPU configured with a 100% reserve. This means that this virtual machines will only start on a host that has no other virtual machines with a reserve running. Virtual machines without a reserve will run but when this virtual machines requires the minimum to be met they’ll lose their CPU cycles.

So how does this work

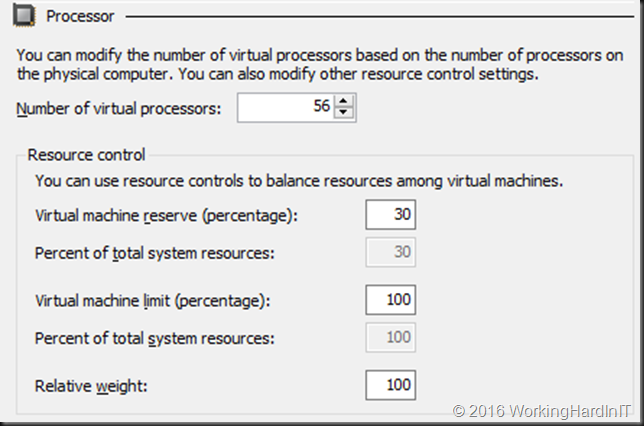

When the virtual machines are running and contention for CPU resources materializes on the host than the reserve will kick in and guarantee the virtual machines get there guaranteed minimum. If there is no contention this isn’t enforced and all virtual machines can grab CPU resources as long as they are available. Only when a reserve can’t be met due to lack of resources the CPU scheduler intervenes. This means there is no waste by not allowing other virtual machines to use what is not needed at any given moment by others. Yes virtual machines can use more than their reserve if it’s available and other virtual machines don’t have a need for their reserved CPU cycles. But the moment there is contention, the reserve is guaranteed. That’s why you cannot reserve more that 100% in total. This however makes things a bit more complex in real life. A reserve is not enforced when not needed but it does have to be there. This means that if you reserve a 30% for a virtual machine with 56vCPU you can’t even reserve 80% for a virtual machine with 8 vCPU and run those together.

Even when you have 56 cores left with 70% CPU unreserved you cannot find one single CPU with 80% free, let alone 8 of them. This means you cannot start such a virtual machine when the first one is running and vice versa. So that’s another caveat you need to consider.

Use Cases

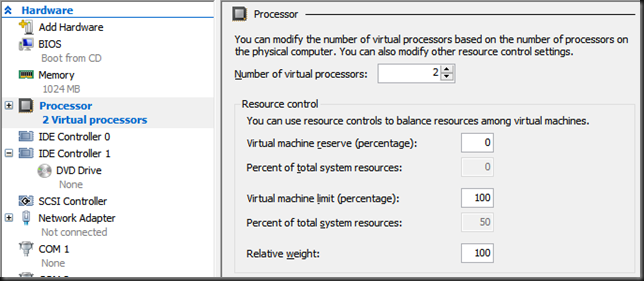

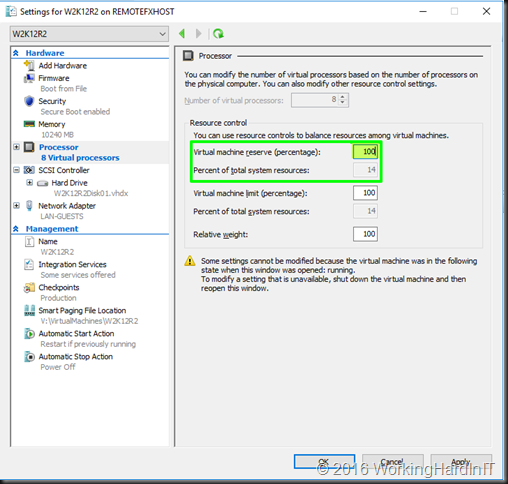

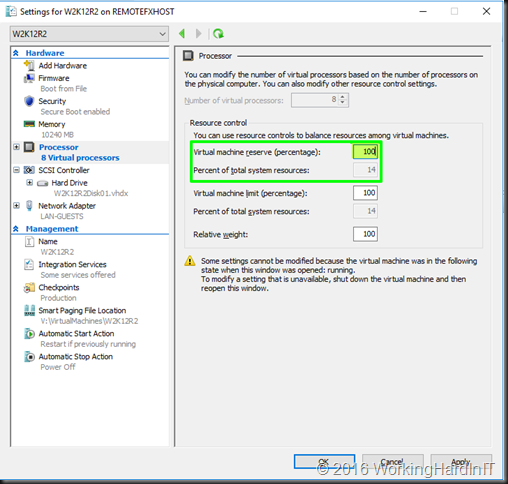

The primary use case of virtual machine reserve is to enforce an SLA for a virtual machines compute needs. When a DBA demands 100% of the 8 vCPUs his SQL Server virtual machine has configured you can set the reserve to 100%, which is 14*% of all CPU resources on the host. On our 56 core host that means at least 8 CPU have to have 100% of resources free or that SQL Server virtual machine won’t even start as the reserve can’t be guaranteed.  When it’s running and there is no contention for resources other virtual machines can grab whatever they need. The CPU scheduler will only take resources away from the other virtual machines if it’s needed to guarantee the reserve configured for the SQL Server virtual machine.

When it’s running and there is no contention for resources other virtual machines can grab whatever they need. The CPU scheduler will only take resources away from the other virtual machines if it’s needed to guarantee the reserve configured for the SQL Server virtual machine.

Some people get creative and use the virtual machine reserve to allocate 100% of all host CPU resources to all virtual machines running on a host. This means all resources are taken and they’ll need to rebalance the reservation if you want to add more virtual machines with a certain reserve. This only works if you can enforce all the virtual machines are created with a reserve. If not some of the new virtual machines might not get any CPU cycles when here is a lot of contention.

Limitations

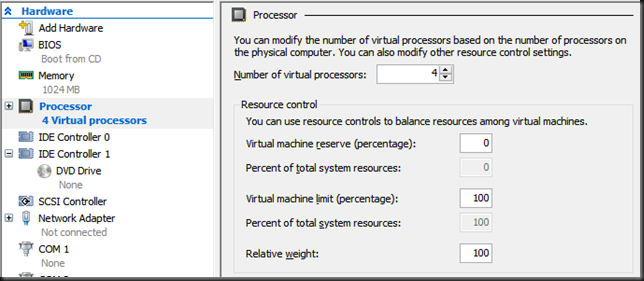

It can become high maintenance. When people set up CPU reserves for all virtual machines (let’s say 14 of them each with 2 vPCU) on a host with 2*14 cores. That means we can give each virtual machine a reserve of 10%. They all get 2*10% of 28 cores.

When you now add a virtual machine with a reserve to the host you’ll need to reset the reserves to accommodate 11 virtual machines. As said this can be used to make sure no VM get’s deployed and started without you noticing (some one will complain when a VM doesn’t start) but this is tedious to manage.

Also note that this game of percentages plays per host but those can be part of a cluster. So when you do this on all nodes on a cluster things will go wrong if you have reserved 100% of the CPU resources on all nodes, live migration and failover will run into issues as extra virtual machines can’t start on nodes that haven’t got sufficient CPU resources left. You need to account and plan for for this

Conclusion

Virtual machine reserve is a powerful tool to have when needed. You do need to understand its behavior very well and take that into behavior for startup, quick and live migrations.

If you do that and use it wisely it can help you achieve SLA’s and the best possible guaranteed performance when needed.