So how do you like THEM apples?

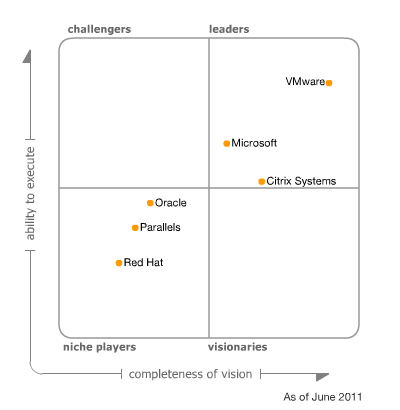

Well take a look at this people, Gartner published the following on June 30th Magic Quadrant for x86 Server Virtualization Infrastructure

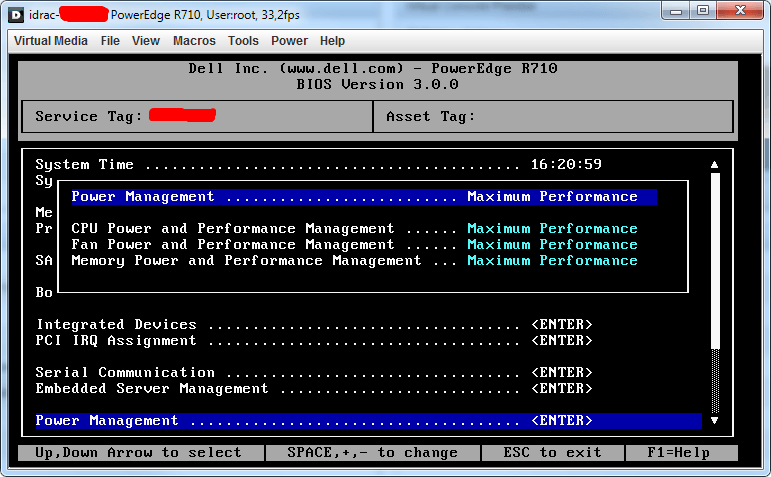

Figure 1: Magic Quadrant for x86 Server Virtualization Infrastructure (Source: Gartner 2011)

That’s not a bad spot if you ask me. And before the “they paid there way up there” remarks flow in, Gartner works how Gartner works and it works like that for everyone (read” the other vendors” on there) so that remark could fly right back into your face if you’re not careful. To get there in 3 years time is not a bad track record. And if you believe some of the people out there this can’t be true. Now knowing that they only had Virtual Server to offer before Hyper-V was available and I was not using that anywhere. No, not even for non-critical production or testing as the lack of X64 bit VM support made it a “no go” product for me. So the success if Hyper-V is quite an achievement. But back in 2008, I did go with Hyper-V as a high available virtualization solution, after having tested and evaluated it during the Beta & Release Candidate time frame. Some people thought I was making a mistake.

But the features in Hyper-V were “good enough” for most needs I needed to deal with and yes I knew VMware had a richer offering and was proven technology, something people never forget to mention that to me for some reason. I guess they wanted to make sure I hadn’t been living under a rock the last couple of years. They never mentioned the cost and some trends however or looked at the customer’s real needs. Hyper-V was a lot better than what most environments I had to serve had running at the time. In 2008 those people I needed to help were using VMware Server or Virtual Server. Both were/are free but for anything more than lightweight applications on the “not that important” list they are not suitable. If you’re going to do virtualization structurally you need high availability to avoid the risks associated with putting all your eggs in one basket. However, as you might have guessed these people did not use ESX. Why? In all honesty, the cost associated.

In the 2005-2007 time frame servers were not yet at the cost/performance ratio spent they reached in 2008 and far cry from where they are now. Those organizations didn’t do server virtualization because from the cost perspective in both licensing fees for functionality and hardware procurement. It just didn’t fit in yet. The hardware cost barrier had come down and now with Hyper-V 1.0 we got a hypervisor that we knew could deliver something that was good enough to get the job done at a cost they could cover. We also knew that Live Migration and Dynamic Memory were in the pipelines and the product would only become better. Having tested Hyper-V I knew I had a technology to work with at a very reasonable price (or even for free) and that included high availability. Combine this with the notion at the time that hypervisors are becoming commodities and that people are announcing the era of the cloud. Where do you think the money needs to go? Management & Applications. Where did Microsoft help with that? The System Center suite. System Center Virtual Machine Manager and Operations Manager. Are those perfect at their current incarnations? Nope. But have you looked at SCVMM 2012 Beta? Do you follow the buzz around Hyper-V 3.0 or vNext? Take a peek and you know where this is going. Think private & hybrid cloud. The beef with the MS stack lies in the hypervisor & management combination. Management tools and integration capability to help with application delivery and hence with the delivery of services to the business. Even if you have no desire or need for the public cloud, do take a look. Having a private cloud capability enhances your internal service delivery. Think of it as “Dynamic IT on Steroids”. Having a private cloud is a prerequisite for having a Hybrid cloud, which aids in the use of the public cloud when that time comes for your organization. And if never, no problem, you have gotten the best internal environment possible, no money or time lost. See my blog for more Private Clouds, Hybrid Clouds & Public Clouds musings on this.

Is Hyper-V and System Center the perfect solution for everyone in every case? No sir. No single product or product stack can be everything to everyone. The entire VMware versus Hyper-V mud-slinging contests are at best amusing when you have the time and are in the mood for it. Most of the time I’m not playing that game. The consultant’s answer is correct: “It depends”. And very few people know all virtualization products very well and have equal experience with them. But when you’re looking to use virtualization to carry your business into the future you should have a look at the Microsoft stack and see if can help you. True objectivity is very hard. We all have our preferences and monetary incentives and there are always those who’ll take it to extreme levels. There are still people out there claiming you need to reboot a Windows server daily and have BSODs all over the place. If that is really the case they should not be blaming technology. If the technology was that bad they would not need to try and convince people not to use it, they would run away from it by themselves and I would be asking you if you want fries with your burger. Things go “boink” sometimes with any technology, really, you’d think it was created by humans, go figure. At BriForum 2011 in London this year it was confirmed that more and more we’re seeing multi hypervisors in use with large to medium organizations. That means there is a need for different solutions in different areas and that Hyper-V was doing particularly well in greenfield scenarios.

Am I happy with the choices I made? Yes. We’re getting ready to do some more Hyper-V projects and those plans even include SCVMM 2012 & SCOM 2012 together with and upgrade path to Hyper-V vNext. I mailed the Gartner link to my manager, pointing out my obstinate choice back then turned out rather well ![]() .

.