Transparent Failover and node fault tolerance with SMB 2.2 in Windows 8 Server is something that caught my attention immediately. The entire effort in infrastructure has been to keep the plumbing as invisible & unnoticed as possible. In some areas we had great success in others not so much. Planned & unplanned down time of file servers has always been an issue as there was always a short or longer outage and any failover meant disconnecting & reconnecting leading to all kinds of end users problems and confusion. To them the network is down. But the same issues exist on the server side with apps depending on files shares or servers like SQL Server that are writing backups to remote share or read data from such a share. Often it needs some kind of human intervention to correct the situation. No not even 3rd party clustered file systems and active-active clustering software could achieve this. The SMB protocol prior to 2.2 did not allow for it.

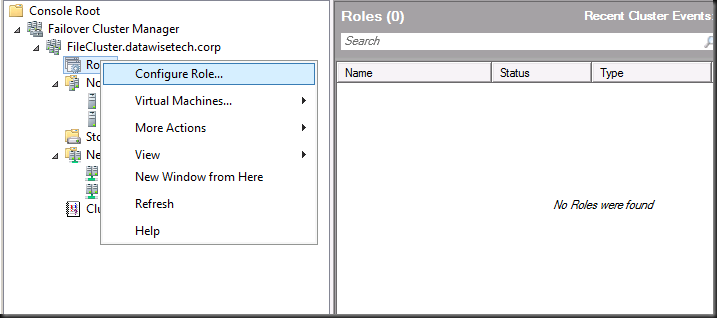

So when one hears it is a possibility now we want to test this! So we throw some virtual machines on the test cluster and build a file cluster with windows 8 server and we also have a 3rd server to act as a client with SMB 2.2. Open the Failover Cluster Manager right click roles and choose to configure a role.

You’ll see the familiar wizard, click next

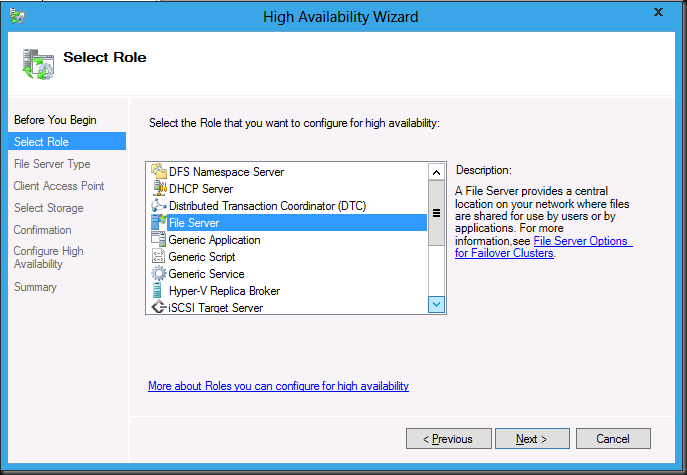

And choose the file server role

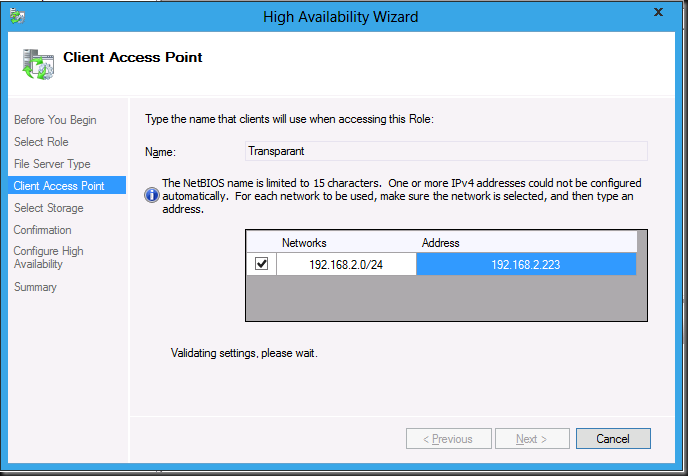

Give the Client Access Point a name and add an IP address.

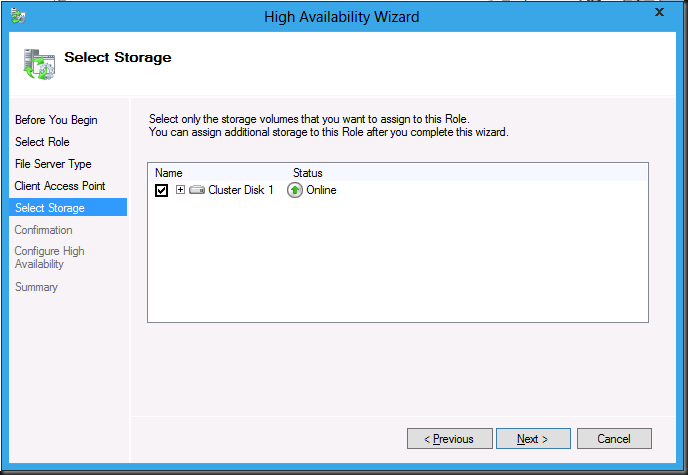

Add some storage

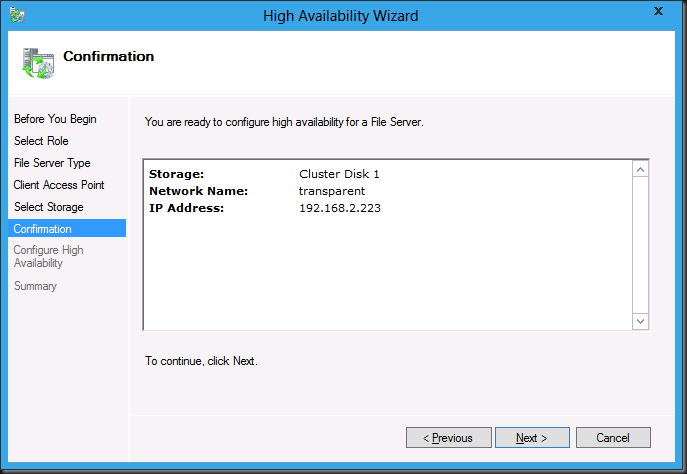

And voila … after the confirmation we’re asked to configure high availability

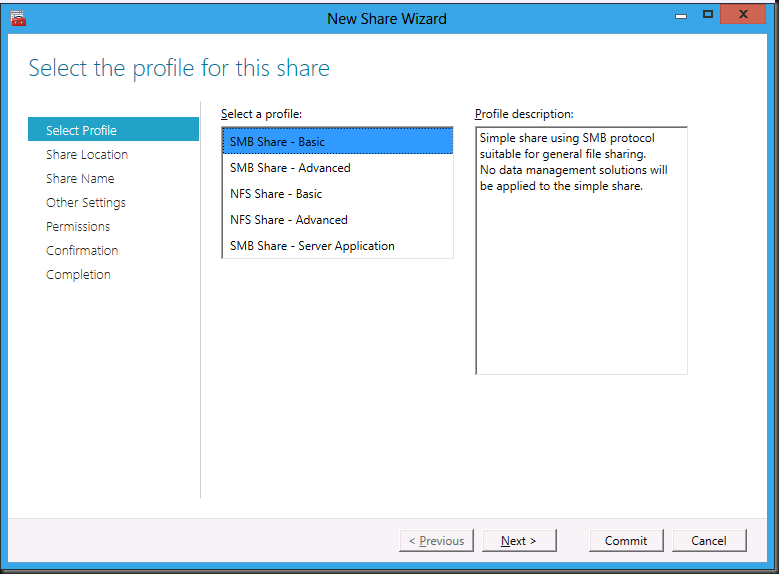

This opens the New Share Wizard

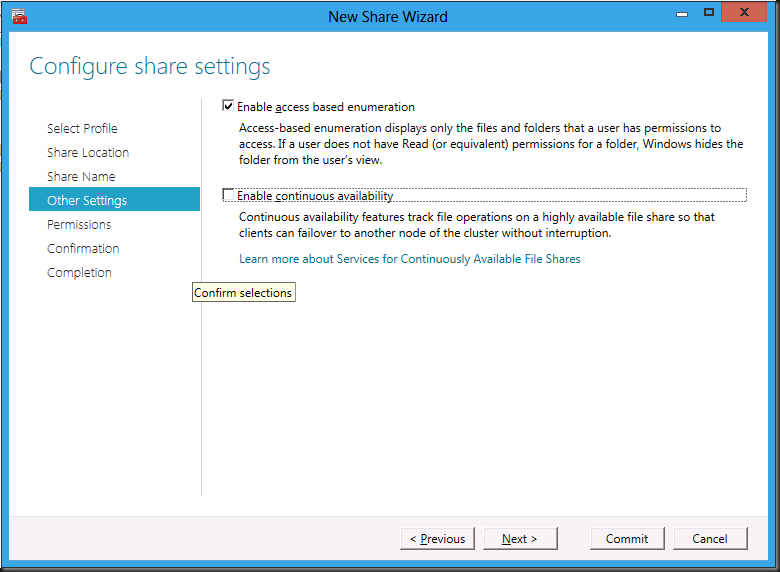

…this is all pretty straight forward so I’ll leave out the screenshots but for the most important one where we explicitly uncheck the “Enable continuous availability” as we want to first run a test without it ![]()

Continue through the wizard & voila you have a clustered file server with a Client Access Point as a single namespace. Please not that you can connect to this using that single name space. No need for \serverABizzShare & \ServerBBizzShare and going fancy with redundant DFS name spaces and the like.

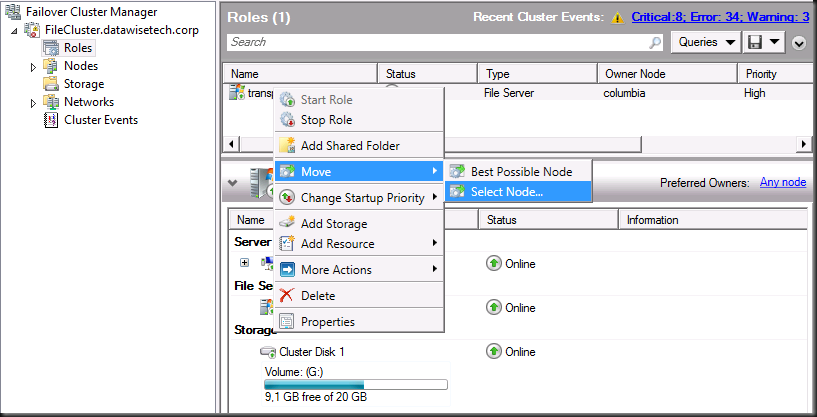

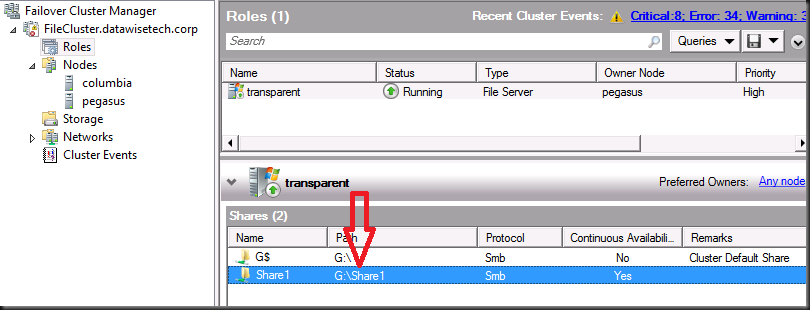

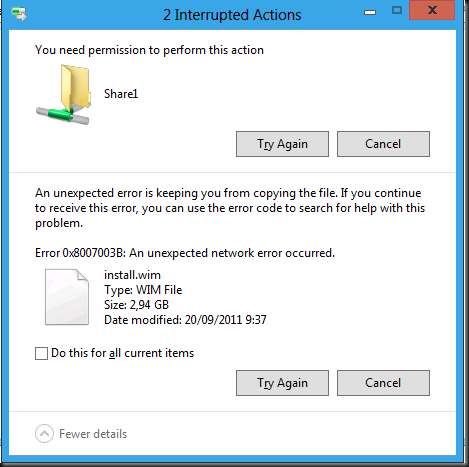

Remember we still need to make this share highly available but let’s do some file copies and fail over the node to see what this looks like without transparent failover. Select the role transparent, right click, choose “Move” and “Select Node”.

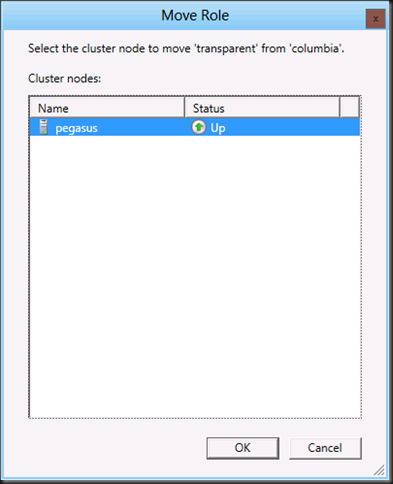

Choose an available node and click OK

As you can see this looks rather familiar.

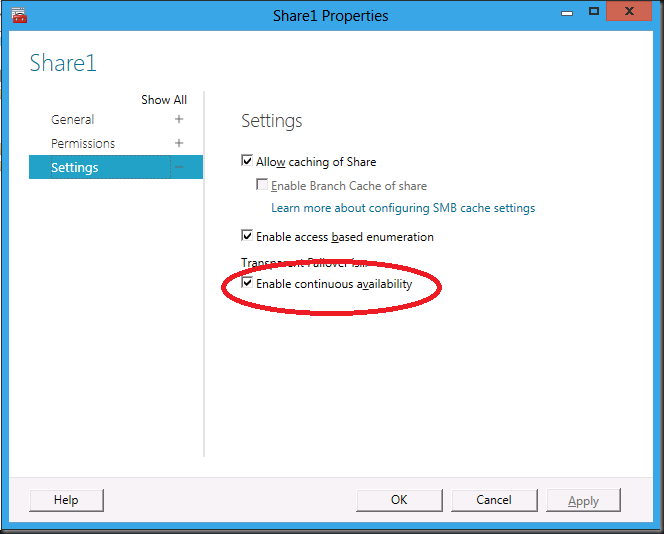

Let’s make that share continuously available. Go to and double click on the share you want to configure.

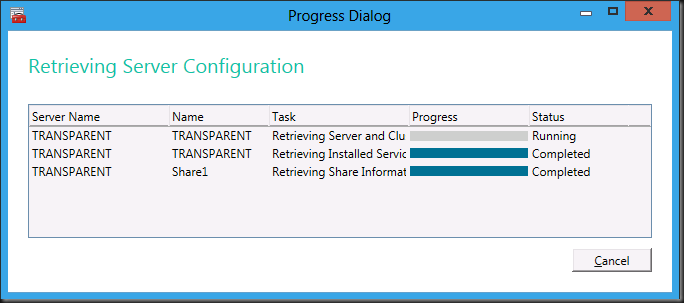

You’ll see a progress dialog whilst information is retrieved …

… and then the share properties are presented, most is familiar stuff but we need the bottom one “Settings”. Select the check box to make the share continuously available.

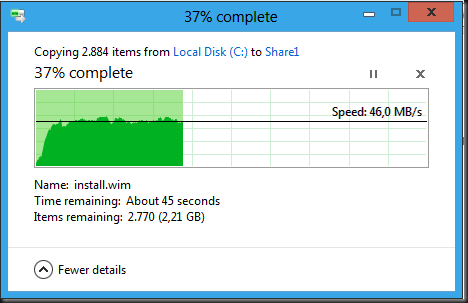

Now let’s try that file copy of again whilst failing over the file server role to another node.

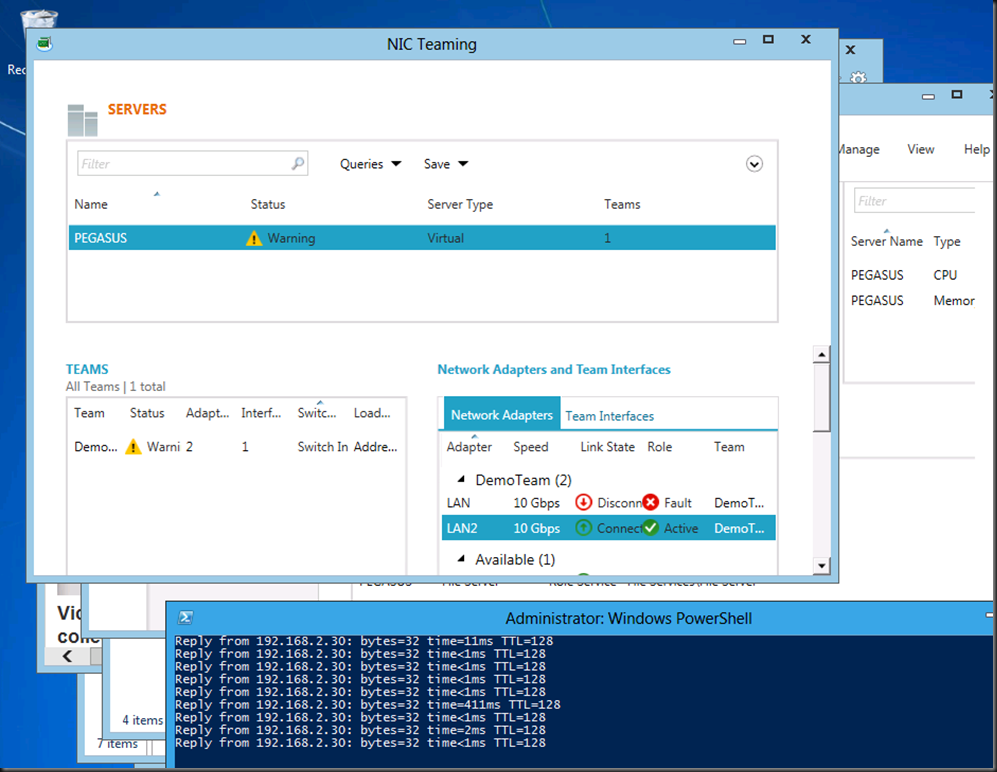

So there is no loss of data, no need to the client to reconnect, you don’t have to retry but you do have a freeze that lasts for about 20 seconds on my test lab. I hope this will still improve before RTM.

What we learned here is that we can have Transparent (File Share) failover with SMB 2.2 in a virtualized environment and we can give it a “Client Access Point” name like “MyOldFileServer” so that users are not confused or need to learn another UNC path. There are many options to achieve keeping old namespaces around for end user ease of use but this is an extra ace up our sleeve. For now planned (patching, server maintenance) or unplanned (crash) is a 20 second freeze experience right now as the file share fails over. This freeze is probably due to active–passive clustering. For now active-active is not recommended/supported for file sharing in an end user scenario. I think they are “worried” of huge file shares with a zillion meta data updates to sync. But this is supported for apps like hyper-V, SQL Server backups or apps needing file data etc. I’m going to try it next and for user data. Things might change before RTM and with multichannel, RDAM, 10Gbps, NIC teaming in the OS perhaps that active-active scenario might be feasible for user file data? PLEASE? Otherwise here’s another request for “Windows 8 Server R2” ![]()

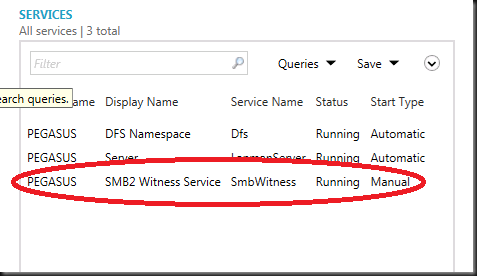

The secret sauce is in:

- SMB 2.2 on both client & server

- Resume Key

- SMB 2.2 Witness service which is stet to running when you make an share continuous available.

Go watch the sessions from the Build conference to hear more on al this. The work they’ve put in to this + some of the complexities are quite amazing. http://www.buildwindows.com/Sessions

Things to find out: how to rename a Client Access Point or how to delete it. Adding a new one is easy.

Warning: It’s September 23rd 2011 and the Developer Preview is a little rough around the edges don’t run this on anything you need to get your bills paid yet ![]()