Introduction

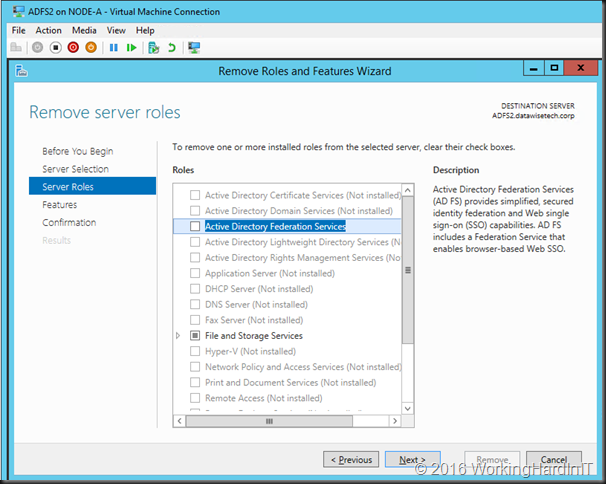

When you have Windows Server 2016 RD Gateway server and you expect to be able to import a configuration XML file you’ll might find yourself in a pickle when you are also using local resources. Because the import of RD Gateway configuration file with policies referencing local resources wipes all policies clean! With local resources I mean local user accounts and groups. These are leveraged more than I imagined at first.

When does it happen?

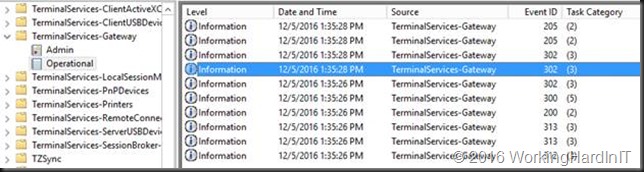

In the past I have blogged about migrating RD Gateway servers that contain policies referencing local resources here: Fixing Event ID 2002 “The policy and configuration settings could not be imported to the RD Gateway server “%1” because they are associated with local computer groups on another RD Gateway server”.

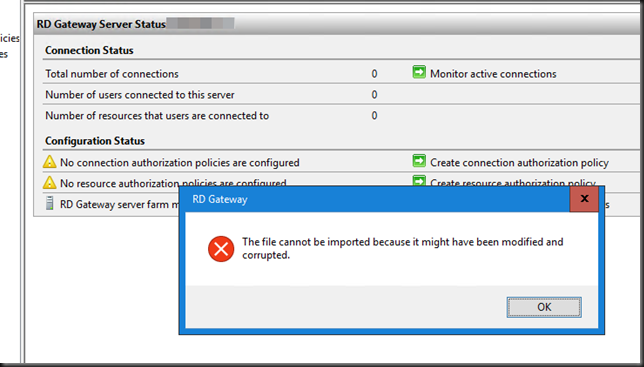

We used to be able to use the trick of making sure the local resources exist on the new server (either by recreating them there via the server migration wizard or manually) and changing the server name in the exported configuration XML file to successfully import the configuration. That no longer works. You get an error.

As far as migrations go from older versions, they work fins as long as you don’t have policies with local resources. Otherwise you’d better do an in place upgrade or recreate the resources & policies on the new servers. The method described in my blog is not working any more. That’s to bad. But it gets worse.

Import of RD Gateway configuration file with policies referencing local resources wipes all policies clean!

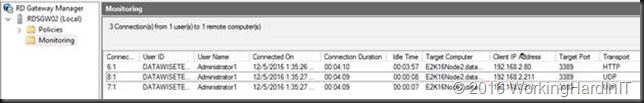

As said,it doesn’t end there. The issue is there even when you try to import the configuration on to the same server you exported it from.That’s really bad as it a quick way to protect against any mistakes you might make, and allows to get back to the original configuration.

What’s even worse, when the import fails it wipes ALL the policies in the RD Gateway Server => dangerous! So yes, the import of RD Gateway configuration file with policies referencing local resources wipes all policies clean!

Precautions

Only a backup or a checkpoint can save your then (or recreate the all manually)! Again this is only when the exported configuration file references local resources! The fasted way to clean out an RD Gateway configuration on Windows Server 2016 is actually importing a configuration export which contains a policy referring to local resource. Ouch! I’m not aware of a fix up to this date.

For now you only protection is a checkpoint or a backup. Depending on where and how you source your virtual machines you might not have access to a checkpoint.

You have been warned, be careful.