Thin Provisioning With Hyper-V

Windows Server 2012 provides thins provisioning at the virtual layer via the VHDX file format. It also provides it at the physical storage layer when your storage supports it. For the later don’t forget that this also means Storage Spaces! So even in environments where budgets are really tight you can leverage this on the physical storage now. So its not just for the feature rich SAN owners anymore  .

.

Even if you use a storage sub system that does not support thin provisioning at the physical layer you will benefit from this mechanism when you use dynamic VHDX files. Not only will these grow less but during shut down they shrink by the size of the empty blocks. Pretty cool! I do however see a potential risk for increased fragmentation. This has a negative impact on performance and needs defragmentation to remediate which also has an impact on IO performance. How much this is a concern depends on your environment and needs. We’ll also have to see in real life how well dynamic VHDX files live up to their performance improvements they got with Windows Server 2012 to entice more people to use this. You have proponents and naysayers. I’m selective and let the circumstances and needs/requirements decide.

Thin Provisioning at the Virtual Layer

You can take a look at the TechEd 2012 session VIR301 by Senthil Rajaram to see how VHD versus VHDX behaves in regards to thin provisioning. I will not repeat all of this here. What I am going to do is look at some other situations.

Important note: You get this UNMAP feature automatically in Windows. There’s no need to manually run the Optimize-Volume command we’ll use in the scenarios below. It’s run automatically for us when the standard Defrag scheduled task runs or during the NTFS check pointing mechanisms that sends the info down every 5 minutes. So these will normally take care of all that. But the defrag “only” runs every week by default you might want to tweak it or create your own scheduled task in your environment if needed. In demos and labs we’re rather inpatient geeks so even the 5 minute interval for the check pointing mechanisms are to long so we run “Optimize-Volume -DriveLetter X –ReTrim” to get immediate gratification while testing. In real life it’s zero touch feature, you don’t need to baby sit it.

Fixed VHDX versus Dynamic VHDX

Apart from the fact that you’ll have no shrink on shutdown this optimization does nothing for the file size. The only benefit here is that the UNMAP can be passed to the physical storage where it can help if that supports it. At the virtual layer it doesn’t matter for a fixed sized VDHX disk.

Dynamic VHDX Disk

You’ll profit from the savings in storage when the dynamically expanding VHDX file doesn’t need to grow as much this. This reduces the overhead of expanding the disk, which is a performance benefit and it even helps your non thin provisioning capable storage go further.

Watch Senthil’s presentation (from around minute 20) to see the benefits in action. With VHDX, If you “shift delete” the files inside the VM, then run “Optimize-Volume -DriveLetter X –ReTrim” or the defrag job and then copy new files you’ll see that there is no additional file growth as long as you don’t exceed the current size of the VHDX. If you don’t do this both the VHD and VHDX file will grow.

But is another potential benefit why this might be important. Even with the block sizes that have been increased to have less overhead when growing dynamic VDHX files we still have to deal with fragmentation of the VHDX files on the storage where they live. The better/more empty blocks are reused, the less the dynamic files will have to grow. This means you’ll have less opportunity for fragmentation. Whether this compensates for potential of more fragmentation due to the shrinking when they are shutdown I don’t know. If all the performance improvements for dynamic disks are good enough will depend on your environment and needs. Defragmentation can help mitigate this but IO performance during the defragmentation process suffers. Do it or better, schedule it, wisely!

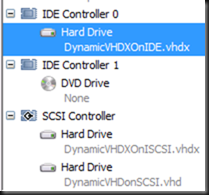

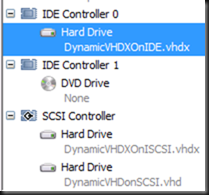

Virtual SCSI controller attached versus virtual IDE controller attached

What about a guest (boot) VHDX disk attached to an IDE controller? I see a lot of one disk virtual machines out there, so it would be a pity if it didn’t work for those and just for the one who have extras vSCSI disk attached.So let’s test this.

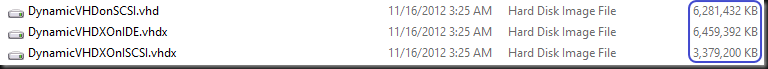

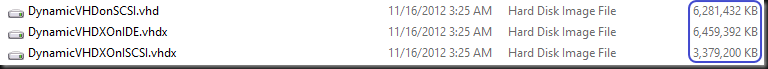

Below you see the disk size of the VHD and VHDX files and what type of controller they are attached to. As you can see this they had one or two 3.3 GB ISO files copied to them and where then “shift deleted”. The size of the VHD(X) files reflects the amount of data that they stored.

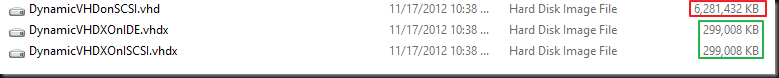

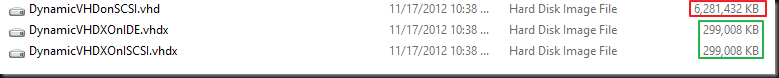

Now after running the defrag job or executing “Optimize-Volume -DriveLetter X –ReTrim” inside the VM you’ll see the results below after you shut down the VM

So as it turns out, the thin provisioning benefits it work with an IDE attached VHDX files as well! Yes inside a Windows Server 2012 virtual machine you get the UNMAP support with IDE attached VHDX disks to. Think of Hosting companies with many thousands single disk virtual machines who can leverage this as well. So this is something you might not expect when having watch the video as there they only talk about virtual SCSI/ FC controllers.

Conclusion

Doing tests like these are a bit artificial but they do demonstrate how the technology works. In real life it will translate into efficiencies over time, based on the data creation and deletion in your VHDX files. Think about hundreds or thousands of virtual machines in your environment leveraging this mechanism. Over time, on that scale, the amount of storage consumed will be reduced which results in better economies. Now leverage that together with thin provisioning support in Storage Spaces and you see that there are some very interesting scenarios to investigate. Some how it’s starting to look like you can have your cookie and eat it to  . You don’t need an expensive SAN to get these efficiencies at the physical storage layer, but if you have and use to have to mess around with sdelete or agents, it’s easy to see the benefit you get from this here as well.

. You don’t need an expensive SAN to get these efficiencies at the physical storage layer, but if you have and use to have to mess around with sdelete or agents, it’s easy to see the benefit you get from this here as well.

![clip_image001[10] clip_image001[10]](https://blog.workinghardinit.work/wp-content/uploads/2012/11/clip_image00110_thumb.png)