Introduction

SMB Direct RoCE Does Not Work Without DCB/PFC. “Yes”, you say, “we know, this is well documented. Thank you.” but before you sign of hear me out.

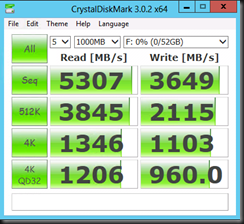

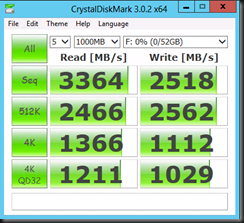

Recently I plugged to RoCE cards into some test servers and linked them to a couple of 10Gbps switches. I did some quick large file copy testing and to my big surprise RDMA kicked in with stellar performance even before I had installed the DCB feature, let alone configure it. So what’s the deal here. Does it work without DCB? Does the card fail back to iWarp? Highly unlikely. I was expecting it to fall back to plain vanilla 10Gbps and not being used at all but it was. A short shout out to Jose Barreto to discuss this helped clarify this.

DCB/PFC is a requirement RoCE

The more busy the network gets the faster the performance will drop. Now in our test scenario we had two servers for a total of 4 RoCE ports on the network consisting of a beefy 48 port 10Gbps switches. So we didn’t see the negative results of this here.

DCB (Data Center Bridging) and Priority Flow Control are considered a requirement for any kind of RoCE deployment. RDMA with RoCE operates at the Ethernet layer. That means there is no overhead from TCP/IP, which is great for performance. This is the reason you want to use RDMA actually. It also means it’s left on it’s own to deal with Ethernet-level collisions and errors. For that it needs DCB/PFC other wise you’ll run into performance issues due to a ton of retries at the higher network layers.

The reason that iWarp doesn’t require DCB/PCF is that it works at the TCP/IP level also offloaded by using a TCP/IP stack on the NIC instead of the OS. So errors are handled by TCP/IP at a cost: iWarp results in the same benefits as RoCE but it doesn’t scale that well. Not that iWarp performance is lousy, far form! Mind you, for bandwidth management reasons,you’d be better of using DCB or some form of QoS as well.

Conclusion

So no, not configuring DCB on your servers and the switches isn’t an option, but apparently it isn’t blocked either so beware of this. It might appear to be working fine but it’s a bad idea. Also don’t think it defaults back to iWarp mode, it doesn’t, as one card does one thing not both. There is no shortcut. RoCE RDMA does not work error free out of the box so you do have the install the DCB feature and configure it together with the switches.