Upgrading MySQL 5.7.21 to 8.0.11 for WordPress 4.9.6

The process of Upgrading MySQL 5.7.21 to 8.0.11 for WordPress 4.9.6 is actually quite easy (official MySQL documentation). And as I maintain my WordPress version, plugins regularly that’s not big of a deal for me.

Prepare the upgrade

- Backup your VM

- Backup your MySQL database

- Verify the restores work

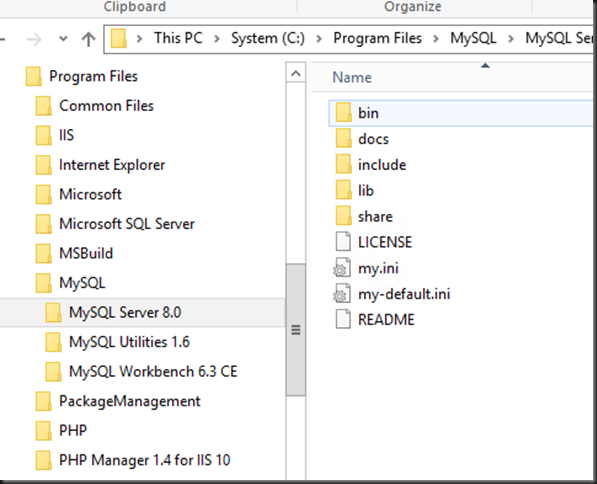

- I also copy the data folder (in my case C:\MySQLDataFolder\Data) which I keep separate from the MySQL installation files as it helps me with upgrades. The only things that are in the C:\Program Files\MySQL\MySQL Server X.Y folder (I use the x64 bits) are the MySQL application files and the my.ini file.

- Create the C:\Program Files\MySQL\MySQL Server 8.0 (x64 bit version of MySQL, otherwise use the C:\Program Files (x86) folder). Copy the content of the zip file with MySQL files and folders in there.

I then copy the my.ini file form the current installation (C:\Program Files\MySQL\MySQL Server 5.7. into the C:\Program Files\MySQL\MySQL Server 8.0 as well. It might be necessary to edit this file a bit more later but I start out with and exact copy and one change to point to the new basedir: 5.7 becomes 8.0 in this case.

As you notice, I don’t keep the MySQL Data and the ini file in the ProgramData folder on windows. It’s fine to leave it all there, if you prefer that.

# Path to installation directory. All paths are usually resolved relative to this.

basedir=”C:/Program Files/MySQL/MySQL Server 8.0/”

Perform the upgrade

- Stop the MySQL Service:NET STOP MYSQL

- I the remove the service form the OS:

mysqld –remove - Install the MySQL service again, now with the new version path. As you see I explicity specify the default name of the service as MySQL and point it to where my ini file lives so I know which ini files this service uses.

“C:\Program Files\MySQL\MySQL Server 8.0\bin\mysqld.exe” –install MySQL –defaults-file=”C:\Program Files\MySQL\MySQL Server 8.0\my.ini” - Start the MySQL Service

NET START MYSQL

If all goes well that’s it, your new MySQL version is running. If so we, can jump to the part where you run the upgrade command to upgrade the system and user databases. You can verify all went well in the error log. The name (mine is called WORKINGHARDINIT.err) as defined in the my.ini and is to be found under the data folder. Any problems will be logged there as well. This approach makes it easy to go back if the service won’t start as all files of the previous MySQL install are still there and you just have to install it as a service again.

Most common issues I have seen

My.ini file mistakes

The things that go wrong the most often and cause the MySQL service not to start -based on some of the support I have given to some people (including myself) are the following: certain options in your ini file are not compatible with the MySQL version you just installed.

Specifically for MySQL 8.0 make sure you comment out query_cache_size=0 (put a # in front of it) or remove the entry from the my.ini file.

#query_cache_size=0

If not the MySQL service won’t start. The error logged is:

[ERROR] [MY-011071] [Server] unknown variable ‘query_cache_size=0’

Next to that if you have the sql-mode entry in there this some times causes issues, so comment out that line as well. at least remove the offending entry, which might take some trial and error.

# sql-mode=”STRICT_TRANS_TABLES,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION”

As you can see the error log is the pointer to many issues with the MySQL service failing to start so do look there, that’s how you find what to do.

Data file ibdata1 is not writable

Sometimes you can see an error like this:

ERROR] [MY-012271] [InnoDB, InnoDB: The innodb_system data file ‘ibdata1’ must be writable

The 2 most common reasons are that the file is locked because you started mysqld.exe manually as you didn’t close a process you don’t know about/ cannot find that accesses it will also cause this error. A restart is then normally the easiest solution.

Sometimes people run into this due to permission problems (but with an upgrade this should not really happen). The MySQL service account (the default or the one you created and assigned) the need full control over the data folder. Check that.

Thinking you lost your blog

Another issue might be that MySQL is running but WordPress can’t find your blog. This might be 1 or more missing files such as ibdata1in the data folder root (hence I always make a copy of the entire data folder before I start an upgrade for safe keeping). The service might start but WordPress might not find your blog. In that case you’ll see a lot o warning similar to this in the error log:

Warning] InnoDB: Cannot open table wordpress592/wp_options from the internal data dictionary of InnoDB though the .frm file for the table exists.

So if these files are gone or corrupted, place them back from your spare copy or grab them from a backup. Most often these files are gone because some bad advise to delete these to fix a permission issue or a mistake. If they are not there they are recreated with the correct permissions but that causes the above issue.

Once you know the basics and you are careful, an upgrade process is mostly straightforward for most IT enthusiasts. Once MySQL is up and running and you can access your wordpress database again it’s time for the last step. Upgrade the databases.

Perform the MySQL upgrade run

Finally whenever you have done an upgrade don’t forget to run the mysql_upgrade.exe. This will take care of any upgrades needed to you system and user databases. Until you do you’ll see in the error log. If you don’t look there you might not even notice much but it pays to complete the complete upgrade process.

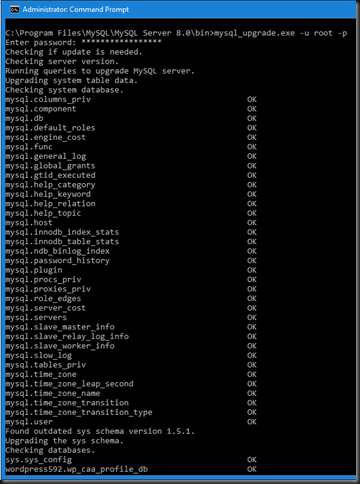

To do so, from an elevated command prompt navigate to C:\Program Files\MySQL\MySQL Server 8.0\Bin and run mysql_upgrade.exe-u root –p

Then enter the password and the upgrade process will kick off. This takes a while and it also depends on the amount of work the script has to do.

It 1st deals with the system database, when it finds out of date issues it will take care of those like in this case the sys schema.

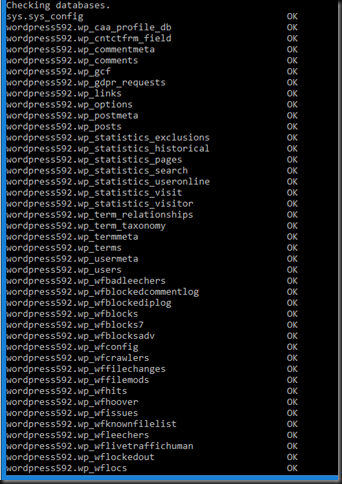

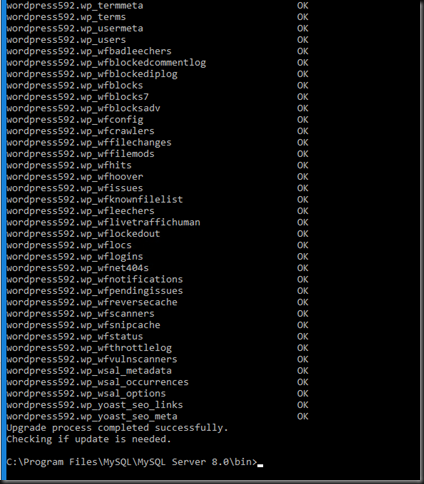

Next it checks the user databases (in my case the word press database),

… not much work to do here clearly.

That’s it. You’re all up to date. I normally do this about once or twice per year to make sure the blog server is up to date (performance, security, capabilities) & I don’t introduce tech debt and potentially more involved and risky upgrade scenarios. With a free community edition, that’s a zero cost game,bar a little effort on your part.

As a final note, when everything has proven to be up and running as it should do some housekeeping and clean up the old files / folders you no longer need of previous MySQL version installs.