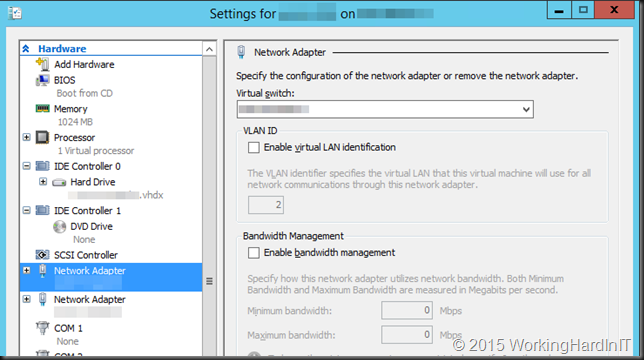

During some trouble shooting recently I needed to find all virtual machines with a duplicate static MAC address on a Hyper-V cluster with PowerShell. I didn’t feel like doing this via the GUI for obvious reasons. I needed this because while trying to find the reason why a VM lost connectivity to one of it two NICs I discovered it had a static MAC address. No one had a good reason for this VM to have a static MAC address I stopped the VM, switched that NIC to a dynamic MAC address and rebooted. All was well afterwards

But I still needed to find out what potentially caused the issue, my guess was a duplicate MAC address (what else?). The biggest candidates for having a duplicate MAC was another VM or VMs. So here’ s some PowerShell that will list all clustered VMs that have a static MAC address.

Get-ClusterGroup | ? {$_.GroupType -eq 'VirtualMachine'} `

| get-VM | Get-VMNetworkAdapter | where-object {$_.DynamicMacAddressEnabled -eq $False}

Let’s elaborate the code a bit and search for the occurrence of duplicates in MAC address

$AllNicsWithStaticMAC = Get-ClusterGroup | ? {$_.GroupType -eq 'VirtualMachine'} `

| get-VM | Get-VMNetworkAdapter | where-object {$_.DynamicMacAddressEnabled -eq $False}

$AllNicsWithStaticMAC.GetEnumerator() | Group-Object MacAddress | ? {$_.Count -gt 1} | ft * -autosize

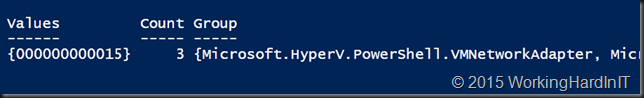

The result is as follows

So in our lab simulation we have found a static MAC address that occurs 3 time!

If you have 200 VMs running on that cluster you might not want to look over the list manually, not that I’m hoping you have 200 VMs with the same MAC address, but just to find the servers that have the same MAC address fast. For this we adapt the above PowerShell a bit

$AllNicsWithStaticMAC = Get-ClusterGroup | ? {$_.GroupType -eq 'VirtualMachine'} `

| get-VM | Get-VMNetworkAdapter | where-object {$_.DynamicMacAddressEnabled -eq $False}

$AllNicsWithStaticMAC.GetEnumerator() | Group-Object MacAddress | ? {$_.Count -gt 1} | ft * -autosize

if($AllNicsWithStaticMAC -ne $null)

{

(($AllNicsWithStaticMAC).GetEnumerator() | Group-Object MacAddress `

| ? {$_.Count -gt 1}).Group | Ft MacAddress,Name,VMName -GroupBy MaCAddress -AutoSize

}

Else

{

"No Static MAC addresses where found on your cluster"

}

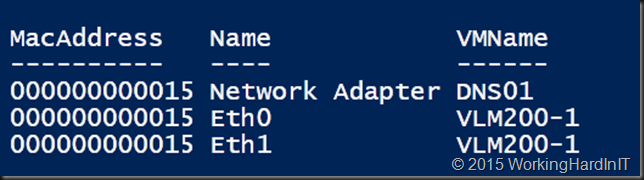

Which results in a nice list of the duplicate MAC address, on what Network adapter is sits an on what virtual machine. It sorts by (duplicate) MAC address, Network Adapter Name and VMName.

The lab demo is a bit fabricated as I’m not creating duplicate MAC address for this blog on my lab clusters.

I hope this helps some of you when you need to find all virtual machines with a duplicate static MAC address on a Hyper-V cluster with PowerShell. Now you can adapt the code to only look for dynamic duplicate MAC addresses or both static and dynamic MAC addresses. You get the gest. Thank your for reading.