In The Mysterious Case of Infrequent Network Connectivity Issues on 2 Hyper-V VMs Out of 40 Guests I share a trouble shooting experience with you. I was asked if I could possibly take a look at a weird, but very infrequent network issue with 2 VMs (W2K12R2) on a cluster (W2K12R2) running over 40 guests? Sure! These 2 virtual machines worked well 98% of the time. About 2% of the time they just fell of the network, sometimes both vNICs, sometimes both VMs. Asking what they meant, they said unreachable. But we can’t find anything wrong as all other VMs run fine with the same configuration on the same hosts. They told me there was nothing in the event logs of either the host or the guests to explain any of this. A reboot or 2 or even a live migration sometimes fix the issue. Normally the monthly patch cycle prevent to many problems with connectivity. Pretty weird! Usually bad firmware, drivers or bad offload feature support can cause issues, but that would not target just 2 out of 40 VMs that have the same settings.

It was only these 2 VMs, not matter what host the were running on in the cluster. As the the vNICs shared the same 2 vSwitches (teamed) with all other VMS that never had issues I was pretty sure the configuration of the switches, NIC, teams and vSwitch were OK. This was verified for due diligence and it checked out on all hosts as expected. All firmware, drivers and offloads were done correctly.

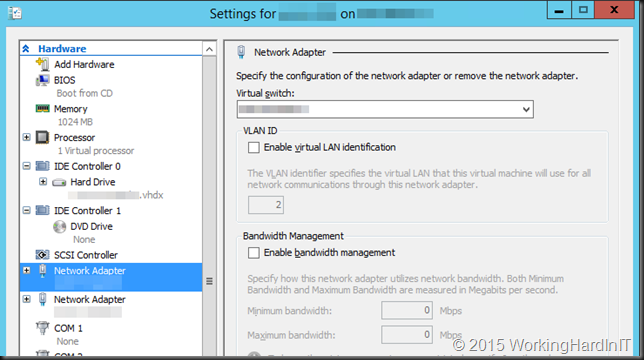

I also checking the VLANs settings of the vNIC themselves for those two VMs and compared them a couple of VMs that had no issues what so ever and found them to be identical.

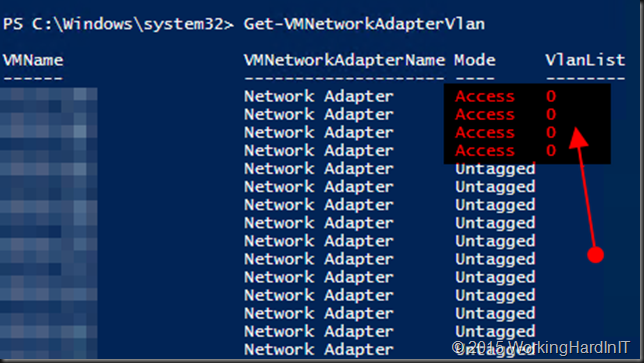

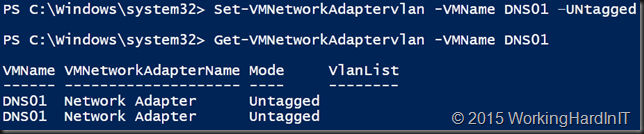

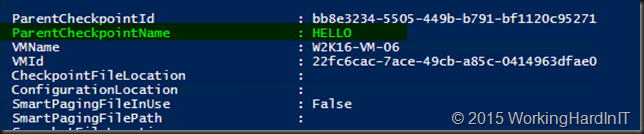

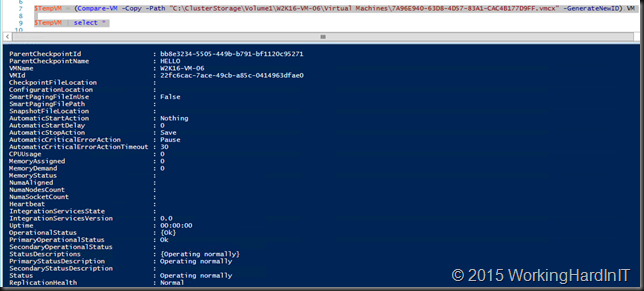

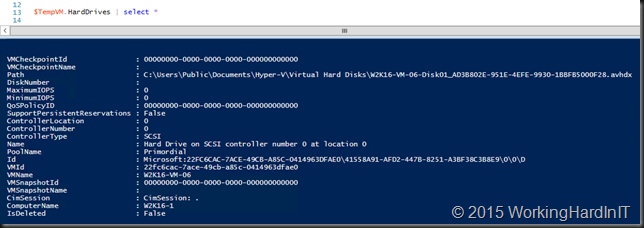

At first everything seemed fine and I was stumped. The event logs both in the VMs as on the hosts were squeaky clean. After that exercise I started running some PowerShell command lets to take a look at the configuration of the VMs on the hosts. You see the GUI does not expose all possible configurations and I wanted to look every configuration option. That’s when I found the following

The vNIC for the 2 offending VMs were in Access mode while the VlanList had a single value 0 (basically meaning untagged, it’s a reserved VLAN for priority tagging and the use is not 100% standard across switch vendors). This just didn’t compute. In the GUI we did not see this, there things looked normal.

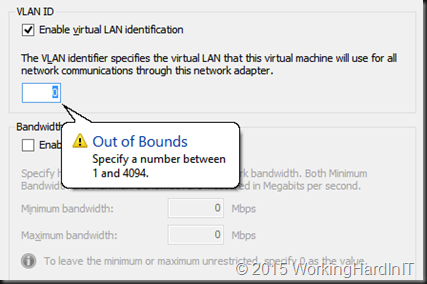

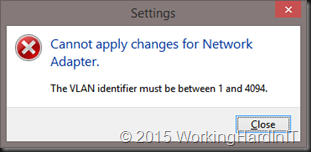

You cannot even set this in the GUI, it won’t allow you.

But when run in a PowerShell command it allows you to make this configuration. So maybe that’s what’s happened.

Set-VMNetworkAdaptervlan -VMName DNS01 -Access -VlanId 0

No one knew, nor can I tell you. But I tested to verify this does run and makes that configuration without any issue, weird. Anyway, I resolved the issue by running the following command.

Set-VMNetworkAdaptervlan -VMName DNS01 –Untagged

The rare connectivity issue disappeared and all was well in 100% of the cases. That how The Mysterious Case of Infrequent Network Connectivity Issues on 2 Hyper-V VMs Out of 40 Guests came to a happy end.