Introduction

In this blog series we’ll walk you through the process of migrating a Windows Server 2008 R2 Hyper-V Cluster to a Windows Server 2012 R2 Hyper-V Cluster in another Active Directory domain. You are now reading part 2.

- Migrating A Windows Server 2008 R2 Hyper-V Cluster To Windows Server 2012 R2 Hyper-V Cluster In Another Active Directory Domain – PART 1

- Migrating A Windows Server 2008 R2 Hyper-V Cluster To Windows Server 2012 R2 Hyper-V Cluster In Another Active Directory Domain – PART 2

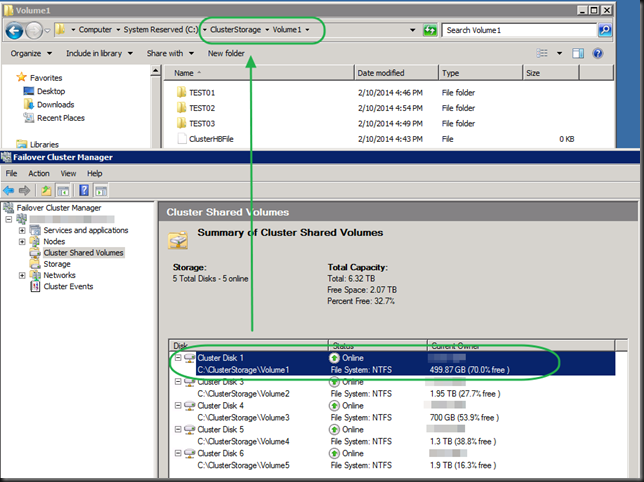

The source W2K8R2 Hyper-V cluster is a production environment. To test the procedure for the migration we created a new CSV on the source cluster with some highly available test virtual machines with production like network configurations (multi homed virtual machined). This allows us to demonstrate the soundness of the process on one CSV before we tackle the 4 production CSVs.

We left off in part 1 with the virtual machines on the CSV LUN we are going to migrate shutdown. We’ll now continue the process of moving the CSV LUN from the old Windows Server 2008 R2 SP1 cluster to the new Windows Server 2012 R2 cluster. After that we can import them and, start them up, test that all is well and finally make them highly available in the cluster. Don’t forget the upgrade the integration components when all is done.

Removing the CSV LUN from the the source W2K8R2 Hyper-V Cluster

Just leave the VMs where they are on the LUN, un-present that LUN from the old source W2K8R2 Hyper-V cluster and present it to the new W2K12R2 Hyper-V Cluster. In our case, with a dealing with a cluster so we use a CSV. So when the LUN is presented and added to the cluster don’t forget to add it to the CSVs. Well

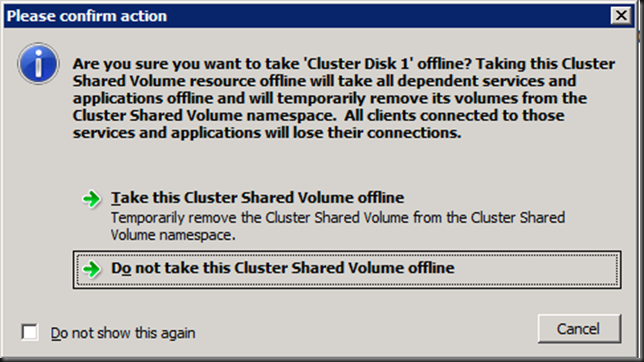

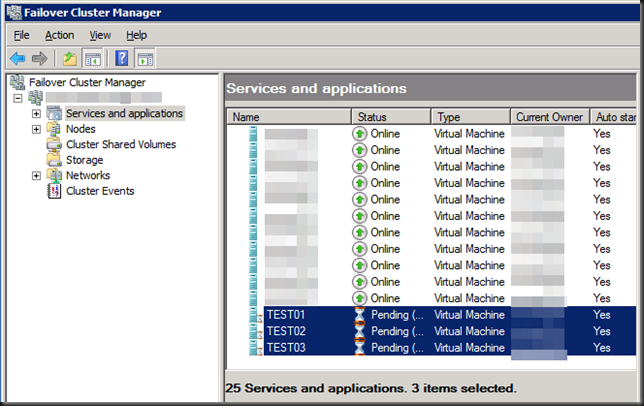

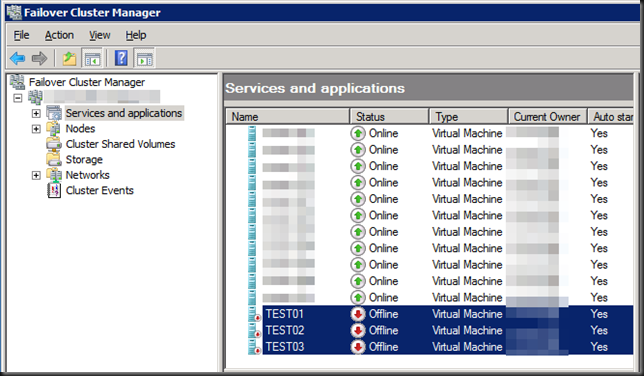

In Failover Cluster Manager bring the CSV that you are migrating off line. Make sure you have the correct one (green circles/arrow) to avoid down time in production.

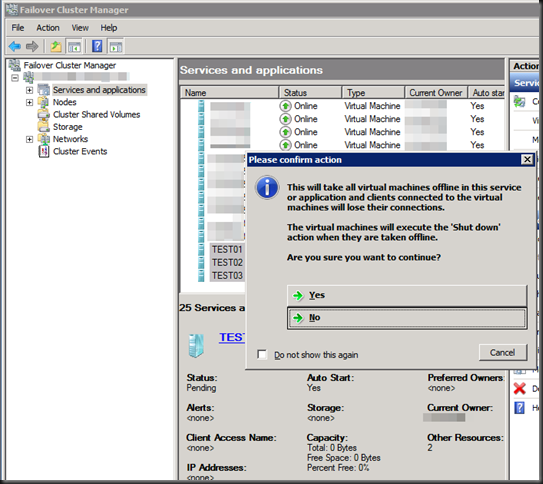

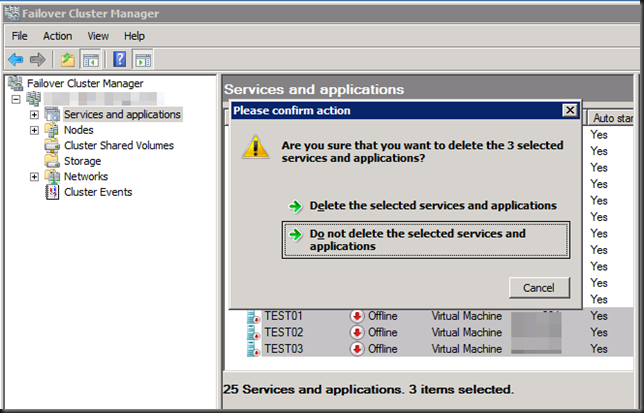

When asked if you’re sure, confirm this

When asked if you’re sure, confirm this

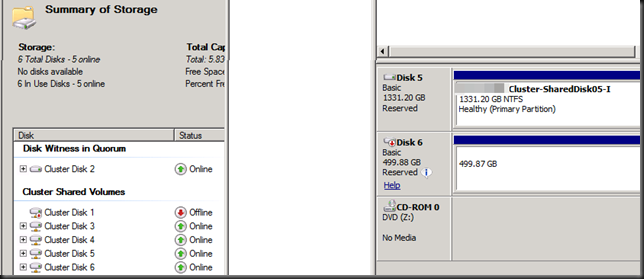

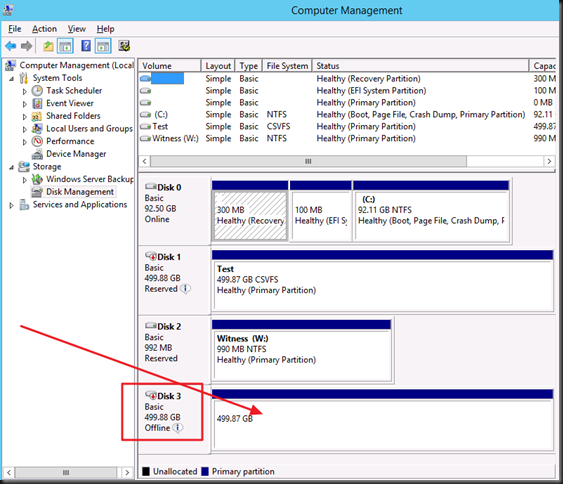

The CSV will be brought of line, which you can verify in Disk Management

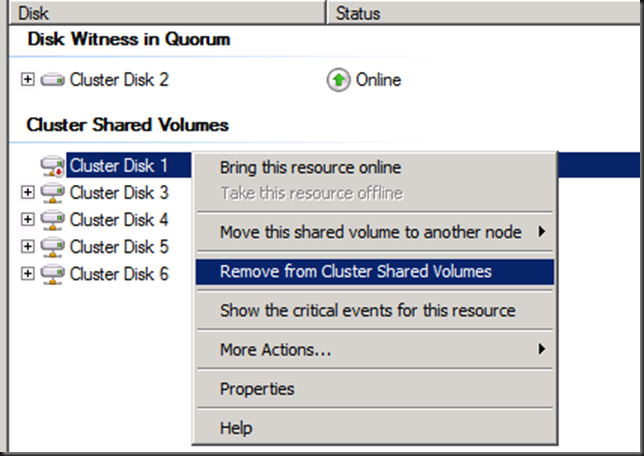

We’re going to do our clean up already. You could wait until after the migration but we want the old cluster to look as clean and healthy for the operations people as possible so they don’t worry. So we go and remove this LUN from Cluster Shared Volumes.

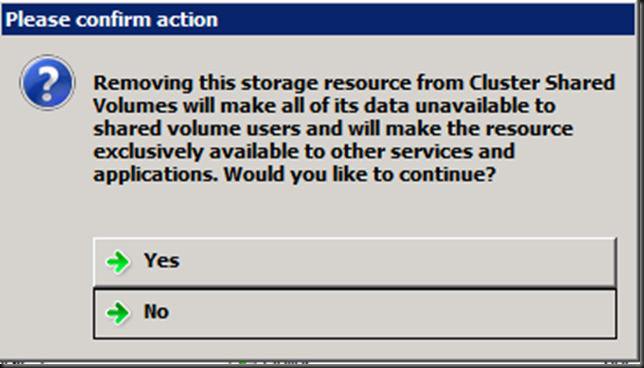

Which you’ll need to confirm

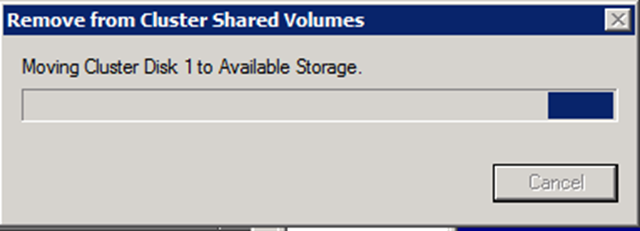

after which your disk will be move to available storage

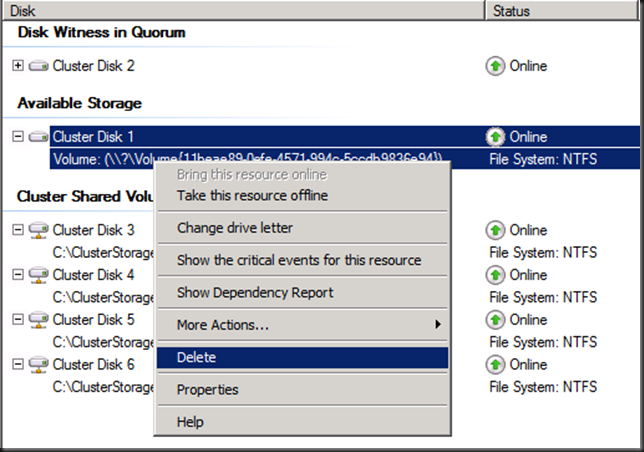

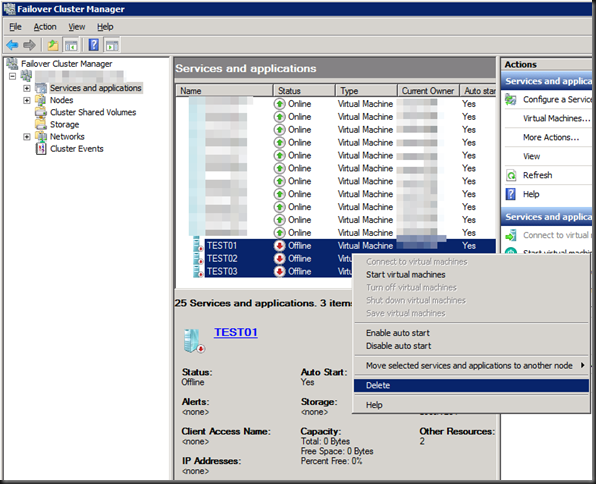

Do note that if you do this it brings the LUN back on line. As it’s still a clustered diskand there is no IO (all VMS are shut down) that’s OK. We’ll remove it form available cluster storage (“Delete” isn’t a bad as it sounds in this context)

The storage will be gone form the cluster and off line in disk manager.

On the SAN / Shared Storage

We create a SAN snapshot for fall back purposes (we throw it away after all has gone well). If you have this option I highly advise you to do so. It’s not easy to move back form Windows Server 2012 R2 to W2K8R2 in the unlikely event you would need to do so. It also protects the VM against any errors & mishaps that might occur, if you understand how to use the snapshot to recover.

On the SAN we un map the CSV LUN from the old cluster. We could wait but this is an extra protection against two clusters seeing the same storage.

On the SAN we map that CSV LUN to the new cluster. It will appear in disk manager.

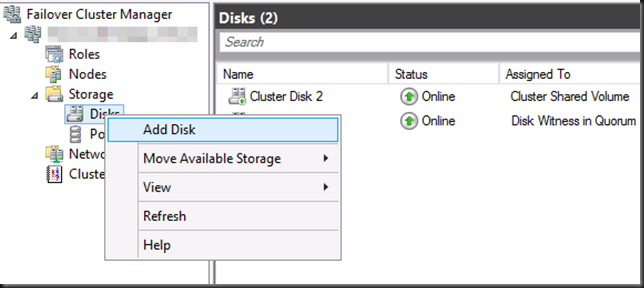

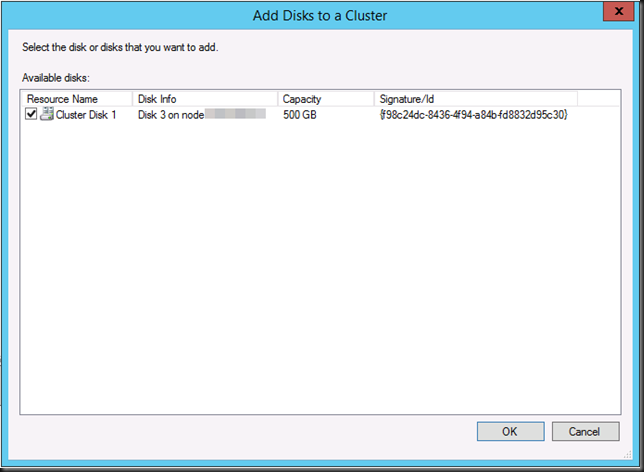

We add this disk to the new cluster

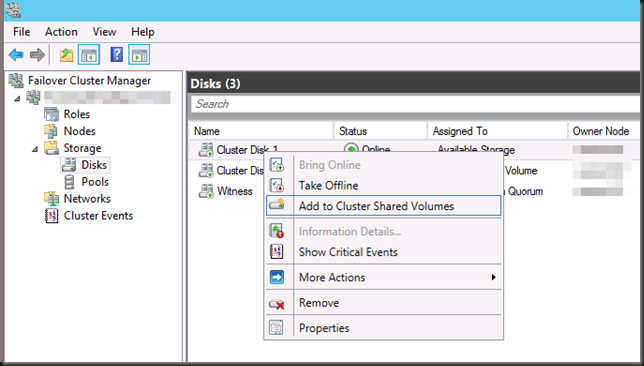

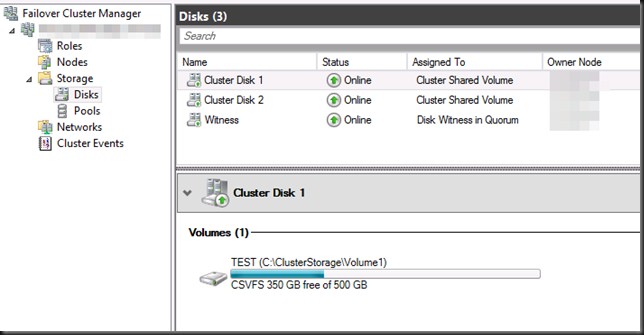

We add it to the CSV on the new cluster, which brings it on line.

It uses the default naming convention of clustered disks. So this is the moment to change the name if you need or want to do so.

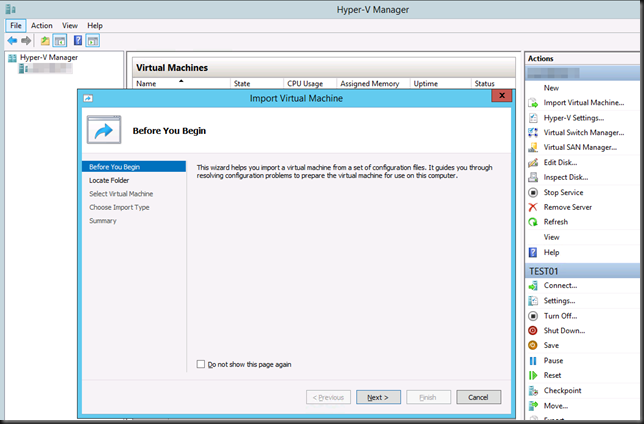

So now it’s time to go Hyper-V Manager and do the actual import.

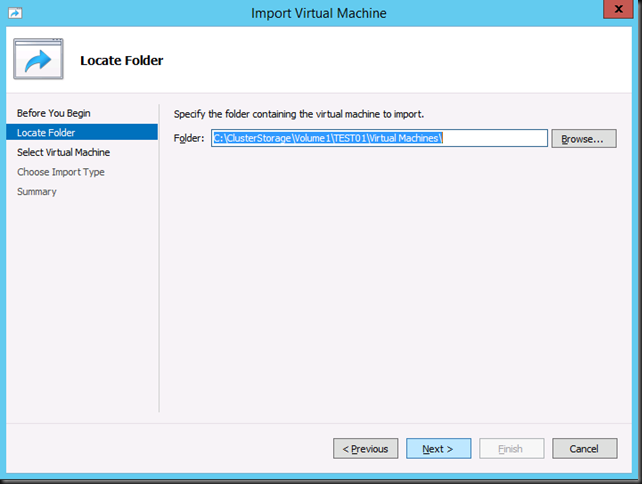

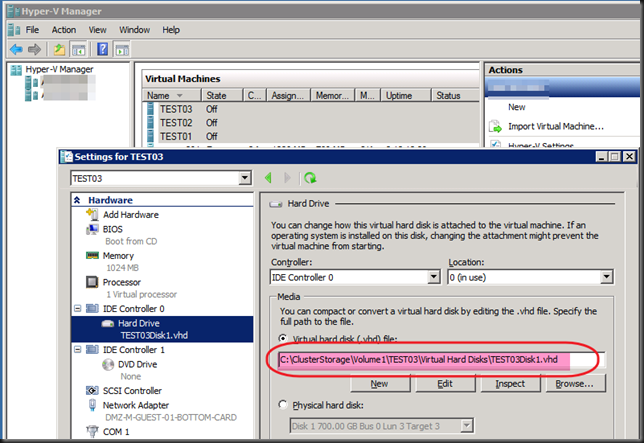

Navigate to the folder where you Hyper-V Virtual Machine Configuration lives. This location can be central for all VM or individual per VM, depending on how the virtual machines were organized on the old source cluster. In our example it is the latter. Also note that we only have one CSV involved per VM here, so it easy. Otherwise you will need to move multiple CSVs across together, all the ones the VM or VMs depend on.

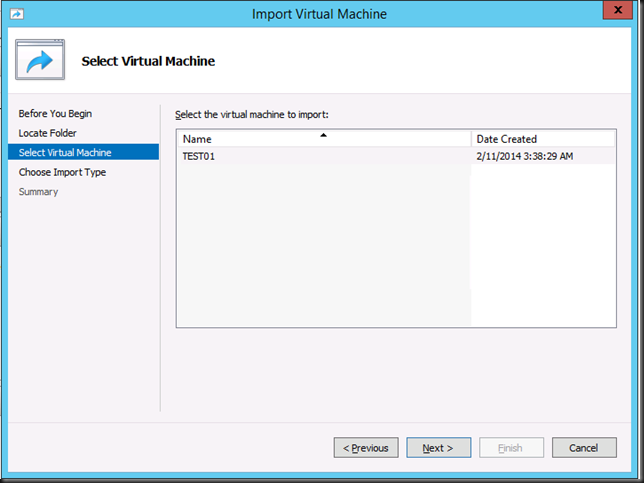

It has found a virtual machine to import.

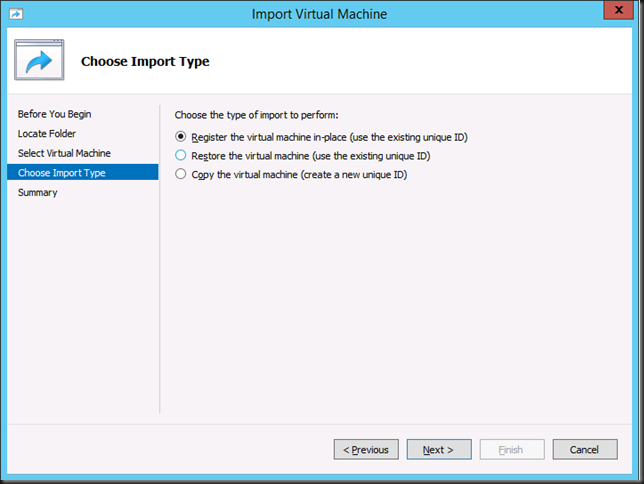

This is important, select “Register the virtual machine in-place (use the existing unique ID)”

Click “Next” to confirm the your actions

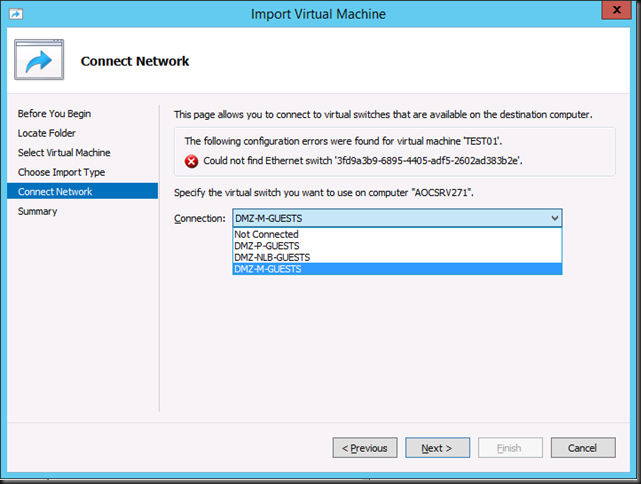

If anything about your virtual machine is not compatible with your host, the GUI allows you to make fix this. Here we have to change the correct virtual switch as they are different from the source host.

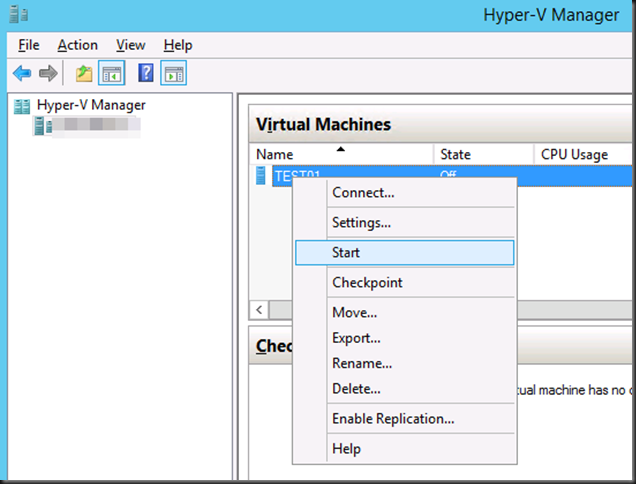

When done, just click next and in a blink of the eye your machine will be imported. You can start it up right now to see if all went well.

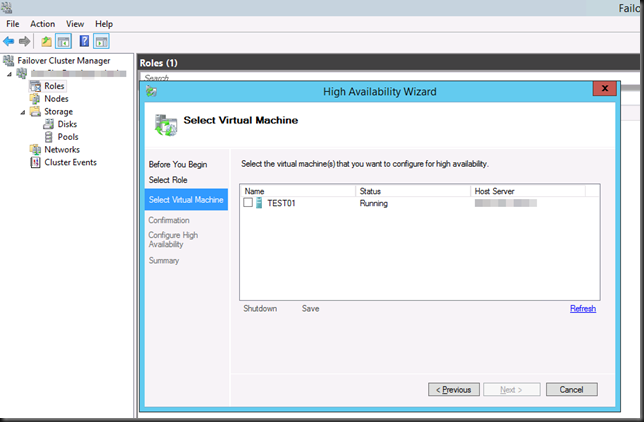

As in Windows Server 2012 (R2) we can add running virtual machines to the cluster for high availability that’s the final step.

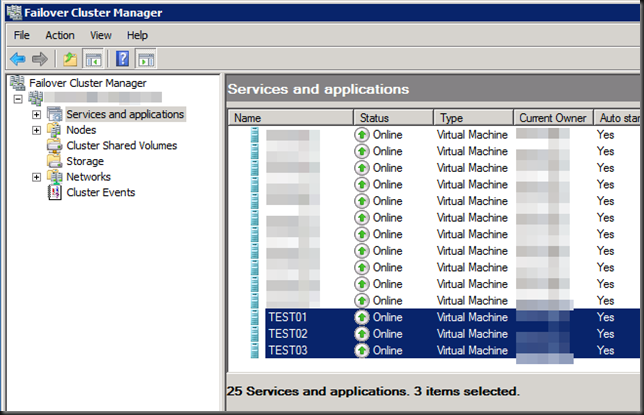

We can import all virtual machines on the demo CSV in the same manner. Congrats, if you set up network connectivity right and done this manual migration procedure correctly you have now migrated a first CSV with VMs to the new cluster in another AD domain that can talk to to VMs that are still on the old cluster. Cool huh! What scenarios? Well, a hoster that has clusters in a management domain that runs different workloads for different customers (multiple ADs) or a company consolidating multiple environments on a common Hyper-V Cluster or clusters in a management domain, etc.

You need to update the integration components of the virtual machines now running but other than that, you’re all set. Just move along with the next CSVs / Virtual machines until you’re done.

Closing comments

Note, what to do if you don’t have shared storage. Move the disks to the new host/cluster, copy the data over (do NOT export the VMs, as that will not work in this scenario, see part 1) or … use VEEAM Replica. It will do the heavy lifting for you and help minimize down time.. Read this blog post by our fellow MVP Silvio Di Benedetto and for more information Veeam Backup & Replication: Migrate VM from Hyper-V 2008 R2 to 2012 R2.

Good luck. And remember if you need any assistance, there are many highly experienced Hyper-V MVPs /consultants out there. They can always help you with your migration plans if you need it.