In this blog post we’re going to take a quick look at the lightning fast fixed VHDX file creation speed with ReFS on Windows Server 2016. We’ll compare it to creating fixed VHDX files On NTFS with a SAN that supports ODX. Both the NTFS and the CSV volume are CSV disk in a Hyper-V cluster and the test is run on the same node. The ODX cabale SAN is a Dell Compellent with Storage Center 6.5.20.

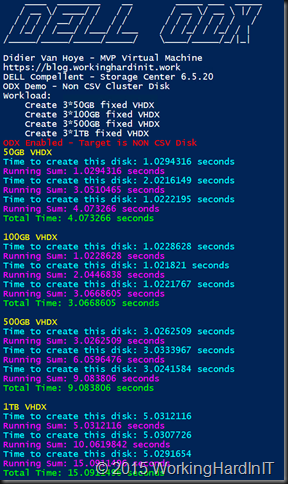

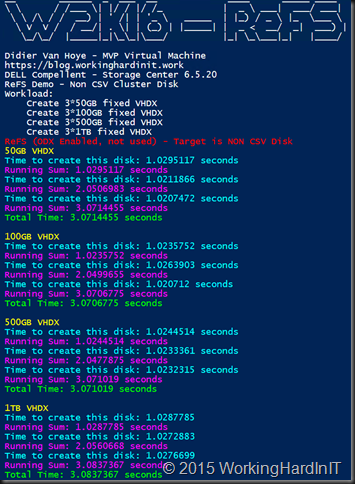

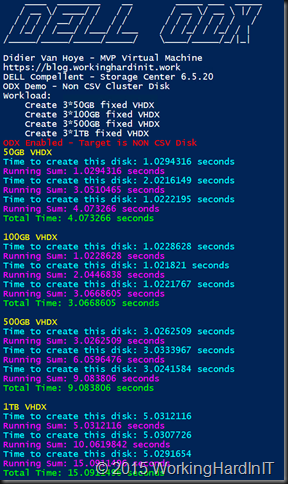

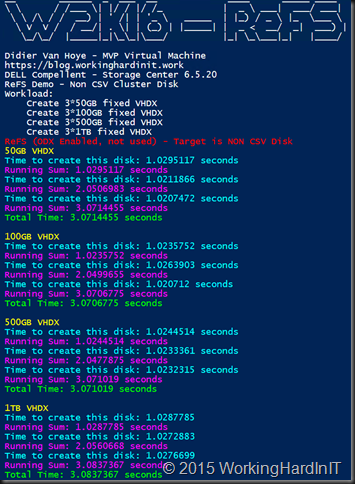

We create on a selection of fixed VHDX files sizes (50GB, 100GB, 500GB and 1TB) on NTFS volume Windows Server 2016 host You can see the quite excellent results in file creation speeds with ODX.

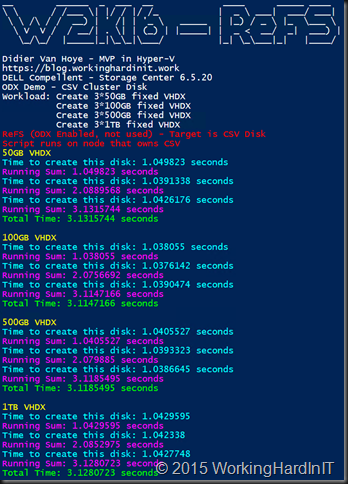

These results are very good (DELL Compellent always did a great job implementing ODX) and the time to create a 1TB fixed VHDX is just over 5 seconds consistently. Impressive by any standard I would say! When we start using CSVs we can see that times double for the larger VHDX sizes but still +/- 12seconds for a 1TB disk is impressive by any standard. There is little difference whether the node where the script runs owns the CSV or not.

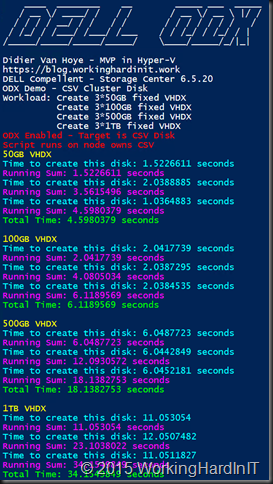

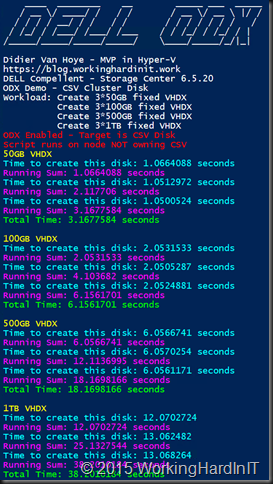

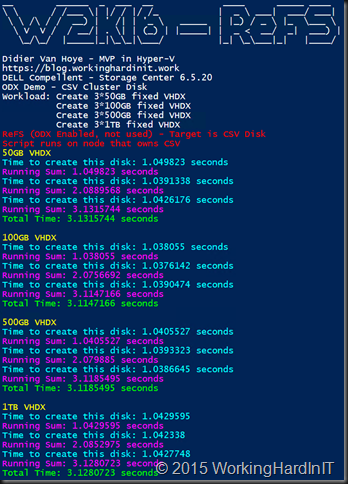

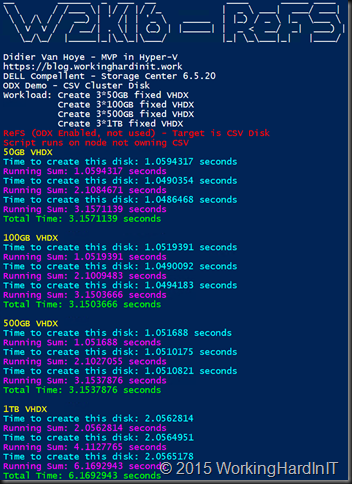

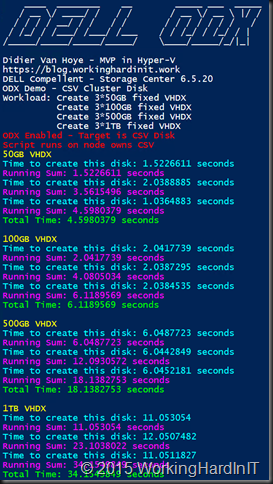

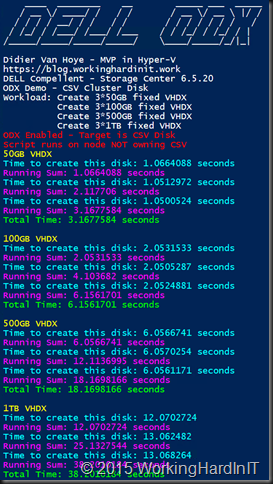

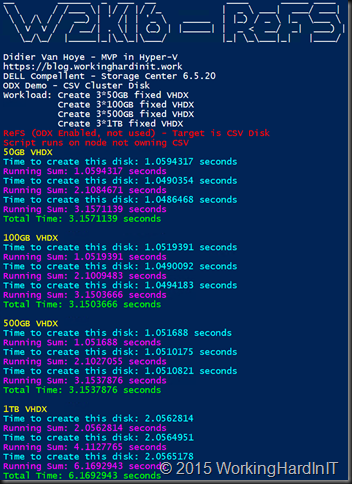

Can things be more impressive? Let’s do the same exercise on a ReFS volume on a Windows Server 2016 host. Same server, same SAN with ODX enabled but note that ReFS does not even support ODX, so it cannot be leveraged.

No matter what the file size of our fixed VHDX files they are created in just over 1 second consistently. This is very impressive.

When we use a CVS LUN we still see the same impressive results. On CSV LUNS not owned by the node where we execute the test script we see a creation time of 2 seconds for VHDX sizes of 1TB. Still lightning fast.

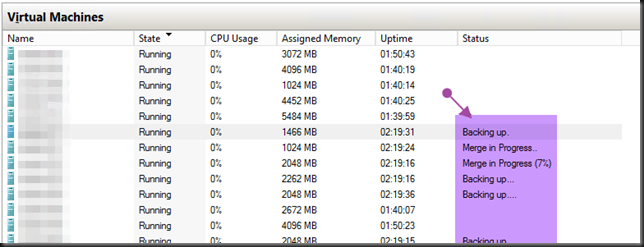

If you do not have a SAN that supports ODX you can see why ReFS might become a very attractive choice for the file system for your Hyper-V virtual machine data volumes in Windows Server 2016. I can see why they mentioned it as the preferred option for Storage Spaces Direct. Do note that ReFS does not support deduplication and/or UNMAP (I see no dedupe support yet for virtual server workloads on the horizon either yet?). If you move large amounts of data around ODX does provide significant assistance with this. So with ReFS go for a large SSD tier. Flash only without deduplication or erasure coding might be cost prohibitive I’m afraid.

But let this not put you off ReFS. It has many benefits in combination with storage spaces and these new VHDX operation capabilities just add to that. So for many environments with commodity based storage this has become an even more interesting choice.