Microsoft recently released another update rollup (aka cumulative update). The

July 2016 update rollup for Windows RT 8.1, Windows 8.1, and Windows Server 2012 R2.

This rollup includes improvements and fixes but more importantly it also contains ‘improvements’ from June 2016 update rollup KB3161606 and May 2016 update rollup KB3156418. When it comes to the June rollup KB3161606 it’s fixes the bugs that cause concerns with Hyper-V Integration Components (IC) to even serious down time to Scale Out File Server (SOFS) users. My fellow MVP Aidan Finn discuses this in this blog post. Let’s say it caused a wrinkle in the community.

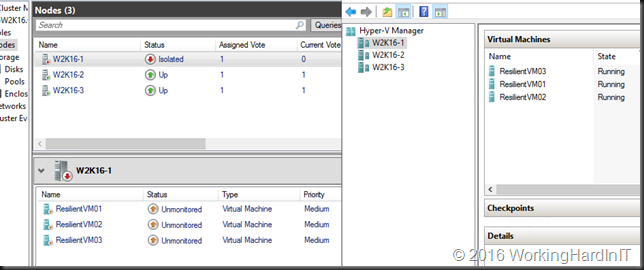

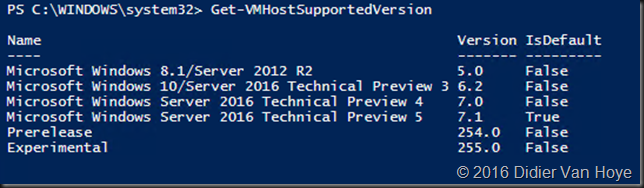

In short with KB3161606 the Integration Components needed an upgrade (to 6.3.9600.18339) but due to a mix up with the manifest files this failed. You could leave them in pace but It’s messy. To make matters worse this cumulative update also messed up SOFS deployments which could only be dealt with by removing it.

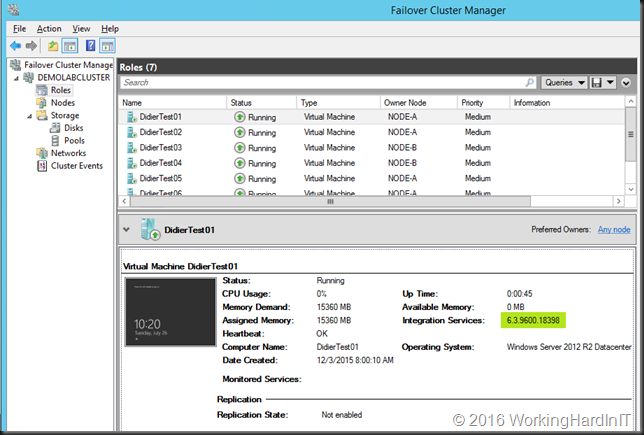

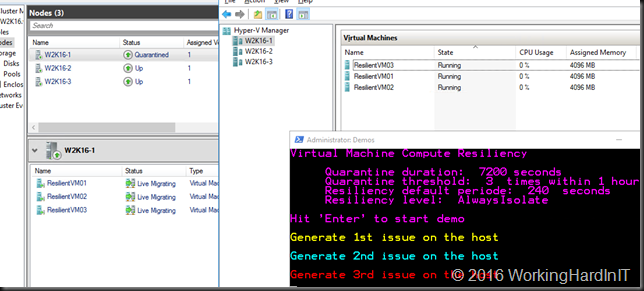

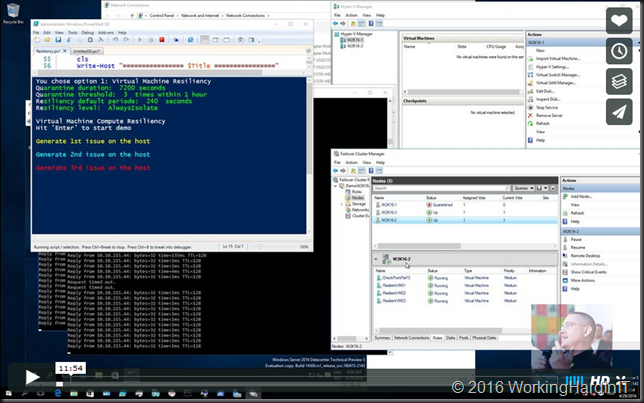

Bring in update rollup 3172614. This will install on hosts and guest whether they have already installed or not and it fixes these issues. I have now deployed it on our infrastructure and the IC’s updated successfully to 6.3.9600.18398. The issues with SOFS are also resolved with this update. We have not seen any issues so far.

In short, CU should be gone from Windows Update and WSUS. It it was already installed you don’t need to remove it. CU will install on those servers (hosts and guests) and this time is does things right.

I hope this leads to better QA in Redmond as it really is causing a lot of people grief at the moment. It also feed conspiracy nuts theories that MSFT is sabotaging on-premises to promote Azure usage even more. Let’s not feed the trolls shall we?