This arrives in via the feedback option on my blog

Hi. I see through your website that you are an expert in vhdx / avhdx file. I had a system crash with data loss. I think this data is in an avhdx file. When I rename this file in vhdx, I can mount it but I have an error: the file is corrupted. Do you know a procedure to repair this type of file? I thank you in advance for your support!

Oh dear! An expert? While flattery can get you a long way in life with certain people virtual disks are impervious to that sort of thing. Look, MVP, Veeam Vanguard, Dell Rockstar … tip of the spear, edge of the sword, it’s all fine and well but it’s no good to split a granite piece of rock and virtual disks don’t care about titles, jut about how they are designed to work.

Before we dive into some more details please use the comments sections under the relevant blog post to ask questions. That way everyone can benefit form the answer. It’s all quite anonymous if you want it to be. Secondly vendors like Microsoft have great public support forums with many thousand pairs of eyes reading. That might also work better and faster for your needs.

Some details

When you have avhdx your data is stored in the avhdx and in the parent disks (more avhdx but at least always one vhdx). While you can throw away what’s in a avhdx under certain conditions (and lose that data) and mount the vhdx you cannot throw away the vhdx and hope to be able to access the data in the avhdx you rename to vhdx.

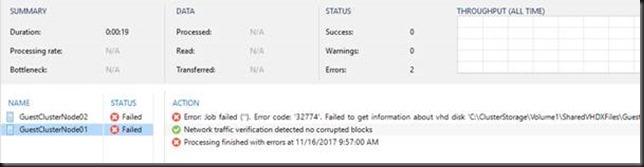

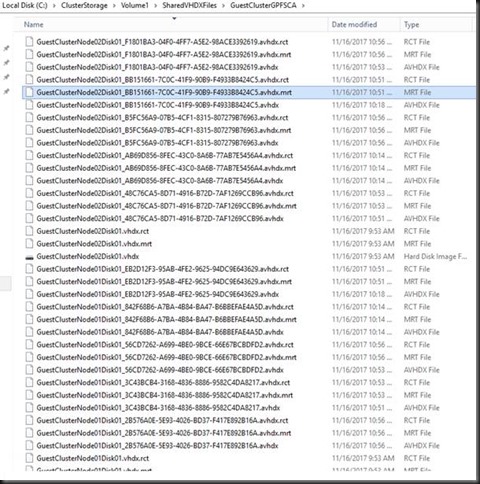

For a case of real data corruption, not just phantom or mixed up VHDX/AVHDX chain, where you can try to intervene, even manually if needed – and if you have the skills – you’ll have to recover or restore data.

If the storage on which the vhdx/avhdx reside is corrupted a good but time-consuming run of chksdk /f /r can do the job. I have done that before with success. But there are no guarantees in this game.

Other than that, or when the storage is gone, it is restore time. This can be leveraging whatever backup solution you use or VSS snapshots on the storage side of things. Those options are your best bet. You can find some more info on manually manipulating vhdx/avhdx files here but that’s not what you’re facing here it seems.

If you don’t have recovery options in place, what can I say?

Stop what you’re doing and contact a good data recovery company. Only damage can come from trying if you don’t know what you’re doing. You can hope trial and error will fix it but that would be the triumph of hope over experience. You’re usually not that lucky. Trust me.

The snarky bit

I’ll fight like hell if I’m in a pickle and the data is valuable. But it’s near to impossible to do it for someone else as it’s hard, time consuming and often it’s a case were the files have been worked on before, so they tend to be messed up. If the data is not that valuable, just eat the loss.

In reality my time always seems less valuable then peoples their data . Now if you say you can help me retire early by trying anyway and are OK with a best effort, no guarantees given deal I might do it. But I’m pretty sure investing in backups and restores is way cheaper and will lead to better results. Your data is important and valuable, even when my time is not. Just saying