Introduction

After the introduction of the SC7020 hybrid array, today also have all-flash only arrays (AFA) in the SC offerings with one being the SC7020F. I was lucky and got to leverage these with both iSCSI (10Gbps) for replication over IP to remote destinations and FC (16Gbps) fabrics for the main workloads.

As always storage is decided upon based on needs, contextual limitations, budgets and politics. Given the state of affairs the SC7020(F) was the best solution we could come up with. In a diverse world there is still a need in certain environments for SAN based storage solutions despite what some like to promote. I try not to be tribal when it comes to storage architectures but pick the best solution given the conditions as they are and as they will evolve for in the environment where it will serve the needs of the business.

Some points of interest

When I first heard and looked at the SC7020 this was to be the “unified” storage solution where block and file level capabilities where both available in the controllers. Given today’s multi socket, multi core systems with plenty of PCIe slots and RDMA capable cards that was a good idea. If DELL played this right and made sure the NAS capability provided 1st class SMB 3 Support this could have been the SOFS offering in a box without the need for SME customers to set up a SOFS Solution with separate DELL PowerEdge Servers. I’m not saying that is the best solution for all customers or use cases but it would have been for some or even many. Especially since in real life not many storage vendors offer full SMB3 support that is truly highly redundant without some small print on what is supported and what is not. But it was not to be. I won’t speculate into the (political?) reasons for this but I do see this as a missed opportunity for a certain market segment. Especially since the SC series have so much to offer to Windows / Hyper-V customers.

Anyway, read on, because when this opportunity’s door got closed it also opened another. Read the release notes for the 7.2, the most recent version can be found here. That original SC7020 7.1 SCOS reserved resources for the file level functionality. But that isn’t there, so, it’s interesting to read this part of the document:

SC7020 Storage System Update Storage Center 7.2 performs the reallocation of SC7020 system resources from file and block to block only. The system resources that are reallocated include the CPUs, memory, and front-end iSCSI ports. An SC7020 running Storage Center 7.2 allows access to block storage from all the iSCSI ports on the SC7020 mezzanine cards. NOTE: In Storage Center 7.1, access to block storage was limited to the right two iSCSI ports on the SC7020 mezzanine cards.

Now think about what that means. NICs are in PCI slots. PCI slots connect to a CPU socket. This means that more CPU cores become available for those block level operations such as dedupe, compression but also other CPU intensive operations. The same for memory actually. Think about back ground scrubbing, repair, data movement operations. That makes sense, why waste these resources, they are in there. Secondly for a SC7020 with only flash disks or the purpose designed SC7020F that which is flash only flash. When you make storage faster and reduce latency you need to make sure your CPU cycles can keep up. So, this is the good news. The loss of unified capabilities leads to more resources for block level workloads. As Cloud & Datacenter MVP focusing in on high availability I can build a SOFS cluster with PowerEdge servers when needed and be guaranteed excellent and full SMB 3 capabilities, backed by a AFA. Not bad, not bad at all.

Hardware considerations

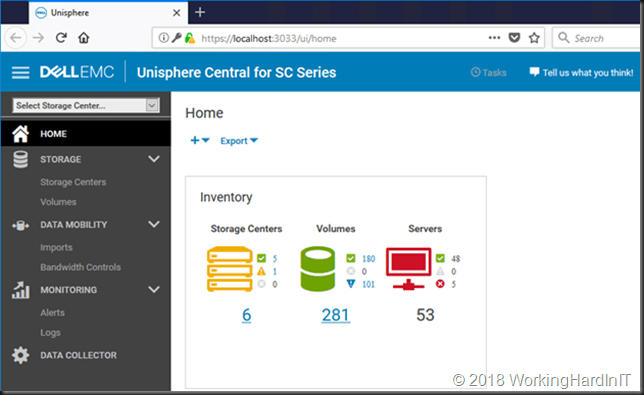

With a complete dual controller SAN in only 3 units with room for 30 2.5” (12Gbps SAS) disks this form factor packs a lot of punch for its size.

With the newer SC series such as the SC7020(F) you are actually not required to use the “local” drives. You can use only expansion enclosure. That comes at the cost of leaving the disk bays go to “waste” and having to buy one or more expansion enclosures. The idea is to leave some wiggle room for future controller replacements. With disks in the 3U that’s another story. But in the end, many people run storage as long as they can and then migrate instead of doing mid life upgrades. But, it is a nice option to have when and where needed. If I had had the budget margin I might have negotiated a bit longer and harder and opted to leverage only disks in external disk bays. But it placed to big a dent on the economics and I don’t have a clear enough view of the future needs to warrant that investment in the option. The limit of 500 disk is more than enough to cover any design I’ve ever made with a SC series (my personal maximum was about 220 disks).

We have redundant power supplies and redundant controllers that are hot swappable. Per controller we get dual 8 core CPU and 128GB of memory. A single array can scale up to 3PB which is also more than I’ve had to deliver so far in a single solution. For those sizes we tend to scale out anyway as a storage array is and remains a failure domain. In such cases federation helps break the silo limitations that storage arrays tend to have.

Configuration Options

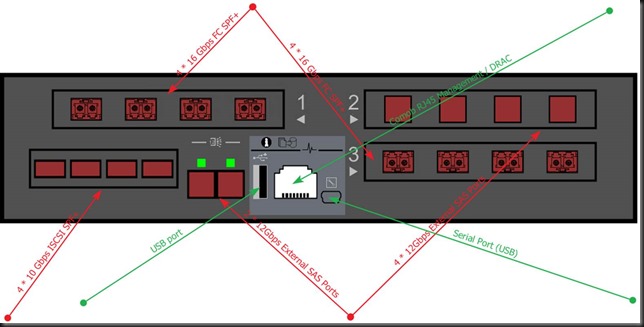

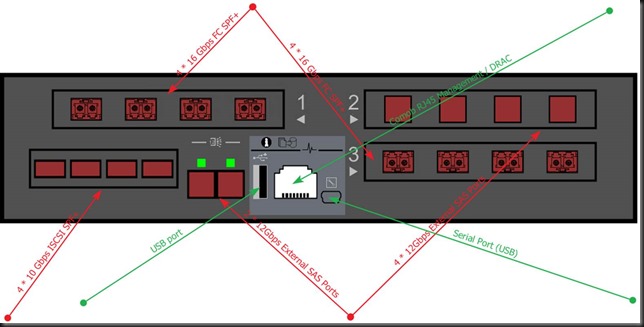

For such a small size a single controller offers ample configuration options. There are 3 slots for expansion modules. It’s up to the designer to determine what’s in the system. You can select:

- 4 port 16Gb FC or 4 port 8Gb FC card

- 2 port 10Gb iSCSI or a 4 port 10Gbps iSCSI card with SFP+/RJ45 options.

- 4 port 12Gb SAS cards

As we’re not using expansion enclosures we’ve gone for the below layout.

We also have 3 indicator lights with the Info providing a LED to identify a controller form Dell Storage Manager. When the Cache to Flash indicator (middle) lights up you’re running on battery power. The 3rd is the health status indicating the controllers condition (off/starting/running/errors).

DRAC observations

The DRAC on the SC7020 looks pretty decent. It’s not a separate dedicated port but shares the management interface. It does have good DRAC functionality. You can have VLAN on the management & DRAC logical interfaces when you so desire. For a storage controller sharing the bandwidth between the management interface and DRAC is not big deal. The only drawback is when the port is broken but hey we have 2 controllers right. The other drawback is that during a FW upgrade of the NIC you’ll also lose DRAC access. For customers coming from the old SC40 controllers that’s progress no matter what. But as in reality these units are not in an Antarctic unmanned research facility I can live with this.

The choice for single tier and 15TB MLC SSD

Based on budget, requirements, the environmental context and politics we opted to go for an All-Flash Array with only 15TB read intensive MLC disk. This was a choice for this particular use case and environment. So, don’t go use this for any environment. It all depends as I have mentioned in many blog posts (Don’t tell me “It depends”! But it does!). Opting to use read intensive MLC SSD means that the DWPD isn’t very high like with an SLC, write intensive SSD. That’s OK. We have large capacity ones and the capacity is needed. It’s not overkill which would lead us to use too few disks.

If these were systems that had 2 tiers or disk-based caching (SSD or even NVMe) and where focused on ingesting large daily volumes of data that 1st tier would have been SLC SSD with lower capacity but with a lot higher DWPD. But using larger SSD allows us some real benefits:

- Long Enough Life time for the cost. Sure, MLC have less durability then SLC but hear me out. 30 * 15TB SSD means that even with a DWPD of 1 we can ingest lot of data daily within our warranty period. We went for 5 years.

- Space & power savings. The aging systems the SC7020F are replacing consumed a grand total of 92 rack units. The monthly cost for a RU they pay means that this is a yearly saving of over 100K Euros. Over a period of 5 years that’s 500K. Not too shabby.

- The larger drives allow for sufficient IOPS and latency specs for the needs at hand. The fact that with the SC Series UNMAP & ODX works very well (bar their one moment of messing it up) helps with space efficiencies & performance as well in a Windows Server / Hyper-V environment.

Then there is the risk not being to read fast enough from a single disk, even at 1200 MB/s sequential reads because it is so large. Well we won’t be streaming data from these and the data is spread across all disks. So, we have no need to read the entire disk capacity constantly. So that should mitigate that risk.

Sure, I won’t be bragging about a million IOPS with this config, but guess what, I’m not on some conference floor doing tricks to draw a crowd. I’m building a valuable solution that’s both effective & efficient with equipment I have access to.

One concern that still needs to be addressed

15TB SSD and rebuild time when a such a large drive fails. Rebuilds for now are many to one. That’s a serious rebuild time with the consumption of resources that comes with it and the long risk exposure. What wee need here is many to many rebuild and for that I’m asking/pushing/demanding that DELLEMC changes their approach to a capacity-based redundancy solution. The fact that it’s SSD and not 8TB HDD gives it some speed. But still we need a change here. 30TB SSD are on their way …

Software considerations

7.1 was a pretty important release adding functionality. In combination with the SC7020(F) we can leverage all it has to offer. 7.2 is mandatory for the SC7020F. Dedupe/compression as well as QoS are the two most interesting capabilities that were added. Especially for those use cases where we have no other options.

I deal a lot with image data in the places I work, which means that I don’t bank too much on deduplication. It’s nice to have but I will never rely on X factor for real world capability. But if your data dedupes well, have a ball!

As stated above, for this particular use case I’m not leveraging tiering. That’s a budget/environment/needs decision I had to make and I think, all things given, it was a good choice.

There is now the option to change between replication types on the fly. This is important. If the results of synchronous (High availability or high consistency) are not working out you can swap those settings without having to delete & recreate the replication.

We have the option to leverage Live Volumes / Live Migrations (the SC kind, not Hyper-V kind here) when required.

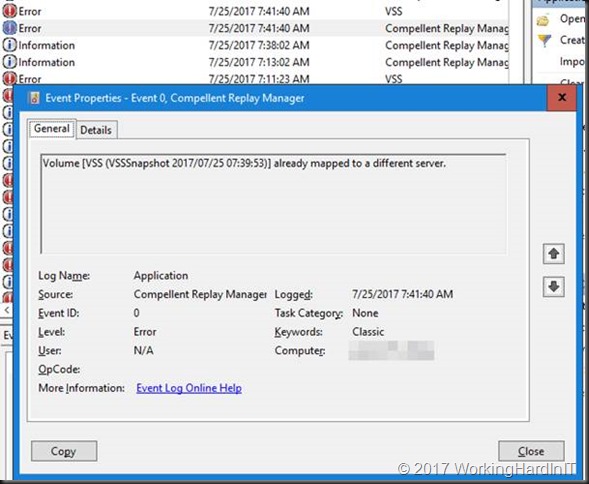

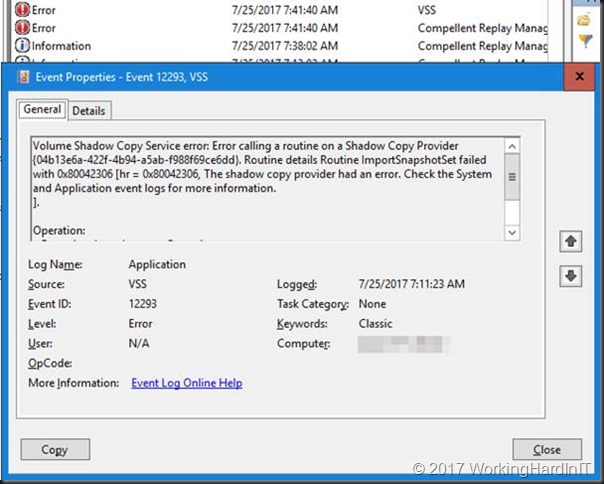

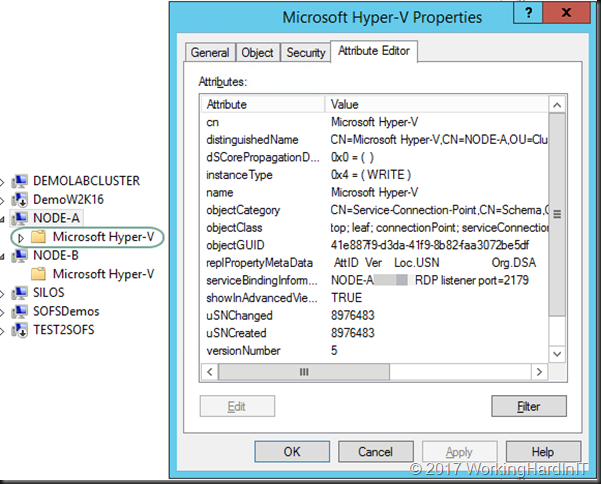

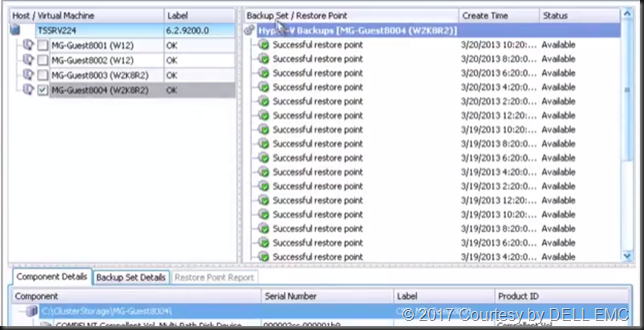

Combine that with functional UNMAP/ODX and a good Hardware VSS provider to complete picture for a Windows Server / Hyper-V environment.

Cost

With any investment we need to keep the bean counters happy. While I normally don’t care too much how the solution is bought and paid for I do care about the cost and the ROI/TCO. In the end I’m about high value solutions, which is not the same as very expensive. So it did help that we got great deal and Dell Financial Services worked with the management and the accounting department to create a lease to buy solution that worked for everyone involved. So there is are flexible solutions to be found for any preference between full OPEX and full CAPEX.

Conclusion

Design, budget & time wise we had to make decisions really fast and with some preset conditions. Not an ideal situation but we found a very good solution. I’ve heard and read some very bad comments about the SC but for us the Compellent SANs have always delivered. And some highly praised kit has failed us badly. Sure, we’ve have to replace disks, one or 2 PSUs, a motherboard once and a memory DIMM. We’ve had some phantom error indications to deal with and we once in 2012 ran into a memory issue which we fixed by adding memory. Not all support engineers are created equal but overall over 6 years’ time the SC series has served us well. Well enough that when we have a need for centralized storage with a SAN we’re deploying SC where it fits the needs. That’s something some major competitors in this segment did not achieve with us. For a Windows Server / Hyper-V environment it delivers the IOPS, latency and features we need, especially if you have other needs than only providing virtualization hosts with storage and HCI might not be the optimal choice.