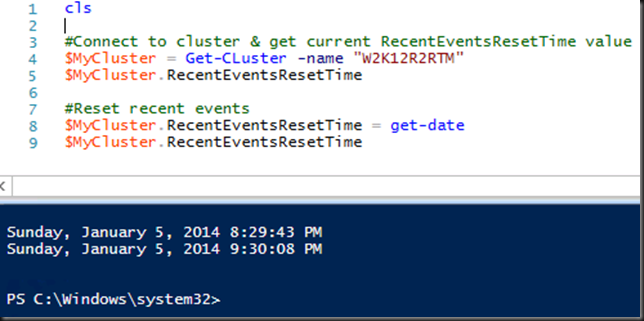

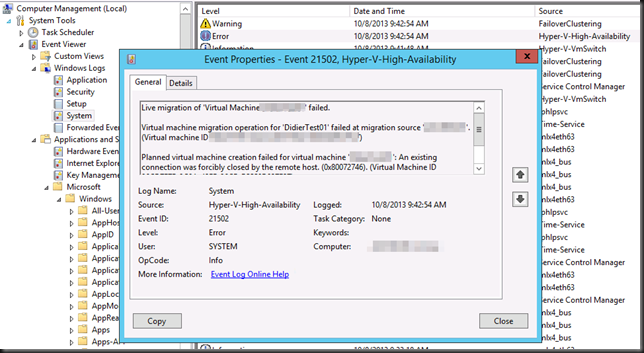

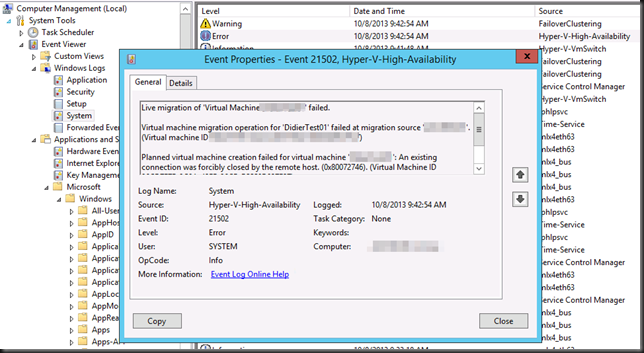

OK so Live Migration fails and you get the following error in the System even log with event id 21502:

Planned virtual machine creation failed for virtual machine ‘DidierTest01’: An existing connection was forcibly closed by the remote host. (0x80072746). (Virtual Machine ID 41EF2DB-0C0A-12FE-25CB-C3330D937F27).

Failed to receive data for a Virtual Machine migration: An existing connection was forcibly closed by the remote host. (0x80072746).

There are some threads on the TechNet forums on this like here http://social.technet.microsoft.com/Forums/en-US/805466e8-f874-4851-953f-59cdbd4f3d9f/windows-2012-hyperv-live-migration-failed-with-an-existing-connection-was-forcibly-closed-by-the and some blog post pointing to TCP/IP Chimney settings causing this but those causes stem back to the Windows Server 2003 / 2008 era.

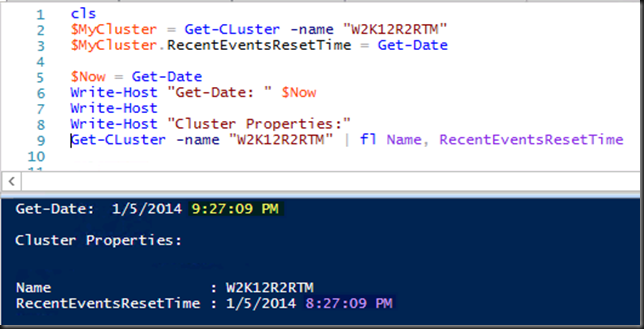

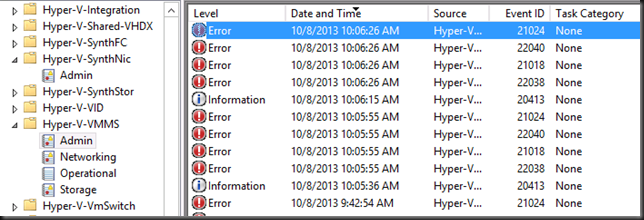

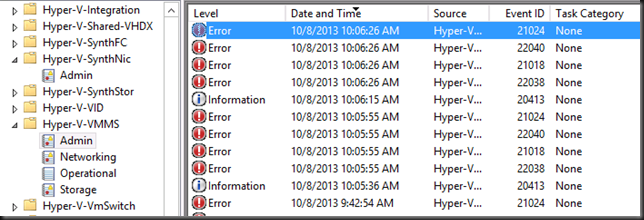

In the Hyper-V event log Microsoft-Windows-Hyper-V-VMMS-Admin you also see a series of entries related to the failed live migration point to the same issue:

Log Name: Microsoft-Windows-Hyper-V-VMMS-Admin

Source: Microsoft-Windows-Hyper-V-VMMS

Date: 10/8/2013 10:06:15 AM

Event ID: 20413

Task Category: None

Level: Information

Keywords:

User: SYSTEM

Computer: SRV1.BLOG.COM

Description:

The Virtual Machine Management service initiated the live migration of virtual machine ‘DidierTest01’ to destination host ‘SRV2’ (VMID 41EF2DB-0C0A-12FE-25CB-C3330D937F27).

Log Name: Microsoft-Windows-Hyper-V-VMMS-Admin

Source: Microsoft-Windows-Hyper-V-VMMS

Date: 10/8/2013 10:06:26 AM

Event ID: 22038

Task Category: None

Level: Error

Keywords:

User: SYSTEM

Computer: SRV1.BLOG.COM

Description:

Failed to send data for a Virtual Machine migration: An existing connection was forcibly closed by the remote host. (0x80072746).

Log Name: Microsoft-Windows-Hyper-V-VMMS-Admin

Source: Microsoft-Windows-Hyper-V-VMMS

Date: 10/8/2013 10:06:26 AM

Event ID: 21018

Task Category: None

Level: Error

Keywords:

User: SYSTEM

Computer: SRV1.BLOG.COM

Description:

Planned virtual machine creation failed for virtual machine ‘DidierTest01’: An existing connection was forcibly closed by the remote host. (0x80072746). (Virtual Machine ID 41EF2DB-0C0A-12FE-25CB-C3330D937F27).

Log Name: Microsoft-Windows-Hyper-V-VMMS-Admin

Source: Microsoft-Windows-Hyper-V-VMMS

Date: 10/8/2013 10:06:26 AM

Event ID: 22040

Task Category: None

Level: Error

Keywords:

User: SYSTEM

Computer: SRV1.BLOG.COM

Description:

Failed to receive data for a Virtual Machine migration: An existing connection was forcibly closed by the remote host. (0x80072746).

Log Name: Microsoft-Windows-Hyper-V-VMMS-Admin

Source: Microsoft-Windows-Hyper-V-VMMS

Date: 10/8/2013 10:06:26 AM

Event ID: 21024

Task Category: None

Level: Error

Keywords:

User: SYSTEM

Computer: srv1.blog.com

Description:

Virtual machine migration operation for ‘DidierTest01’ failed at migration source ‘SRV1’. (Virtual machine ID 41EF2DB-0C0A-12FE-25CB-C3330D937F27)

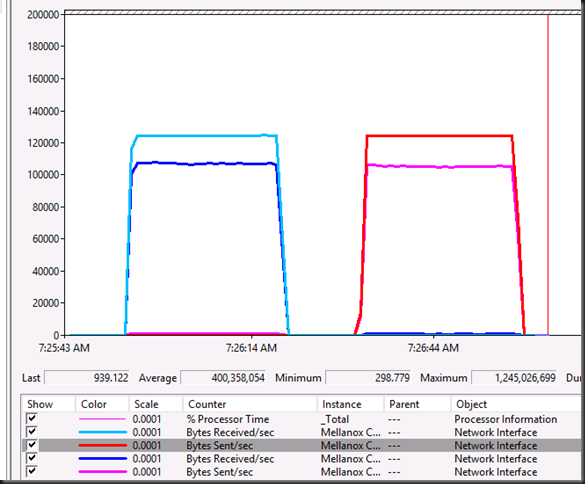

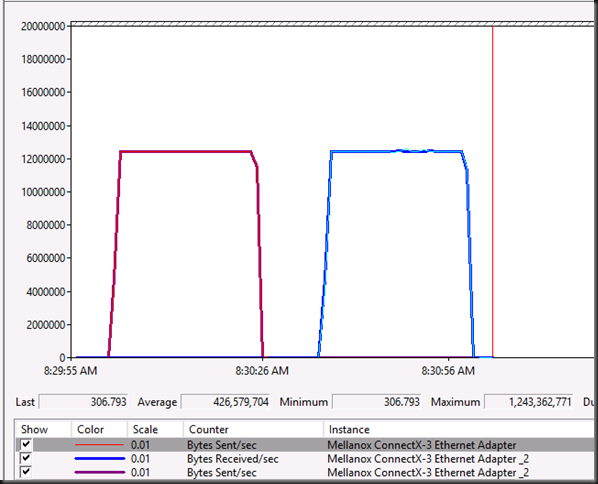

There is something wrong with the network and if all checks out on your cluster & hosts it’s time to look beyond that. Well as it turns out it was the Jumbo Frame setting on the CSV and LM NICs.

Those servers had been connected to a couple of DELL Force10 S4810 switches. These can handle an MTU size up to 12000. And that’s how they are configured. The Mellanox NICs allow for MTU Sizes up to 9614 in their Jumbo Frame property. Now super sized jumbo frames are all cool until you attach the network cables to another switch like a PowerConnect 8132 that has a max MTU size of 9216. That moment your network won’t do what it’s supposed to and you see errors like those above. If you test via an SMB share things seem OK & standard pings don’t show the issue. But some ping tests with different mtu sizes & the –f (do no fragment) switch will unmask the issue soon. Setting the Jumbo Frame size on the CSV & LM NICs to 9014 resolved the issue.

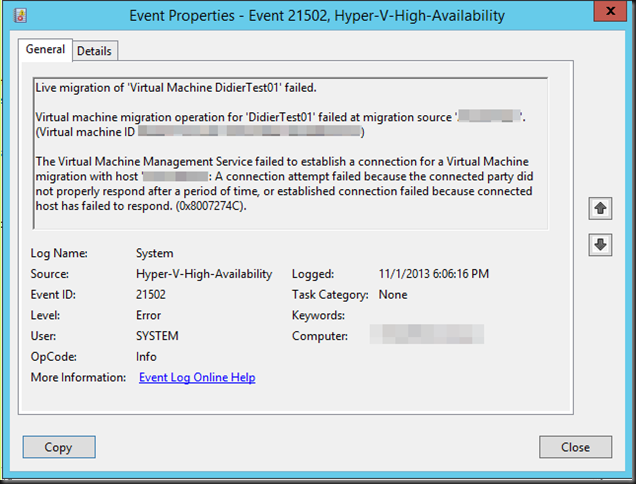

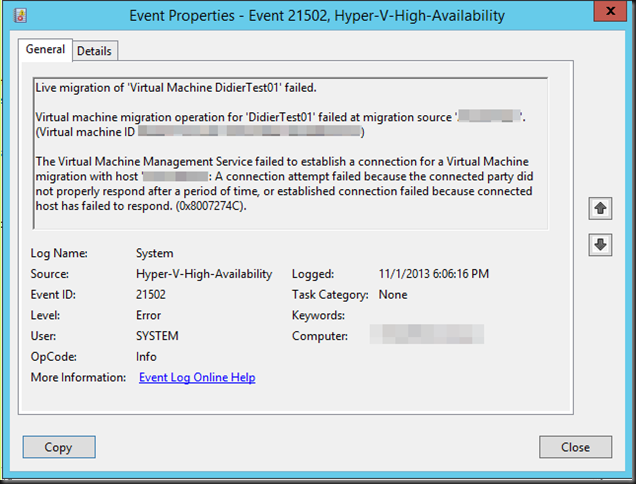

Now if on the server side everything matches up but not on the switches you’ll also get an event id 21502 but with a different error message:

Event ID: 21502 The Virtual Machine Management Service failed to establish a connection for a Virtual machine migration with host XXXX. A connection attempt failed because the connected party did not properly respond after a period of time, or the established connection failed because connected host has failed to respond (0X8007274C)

This is the same message you’ll get for a known cause of shared nothing live migration failing as described in this blog post by Microsoft Shared Nothing Migration fails (0x8007274C).

So there you go. Keep an eye on those Jumbo Frame setting especially in a mixed switch environment. They all have their own capabilities, rules & peculiarities. Make sure to test end to end and you’ll be just fine.