Introduction

I recently had to prepare replacing an aging Aruba Wi-FI deployment with an effective, more capable and budget friendly solution. It needed to offer both corporate (Radius Server) and guest Wi-Fi access for modern workplace needs.

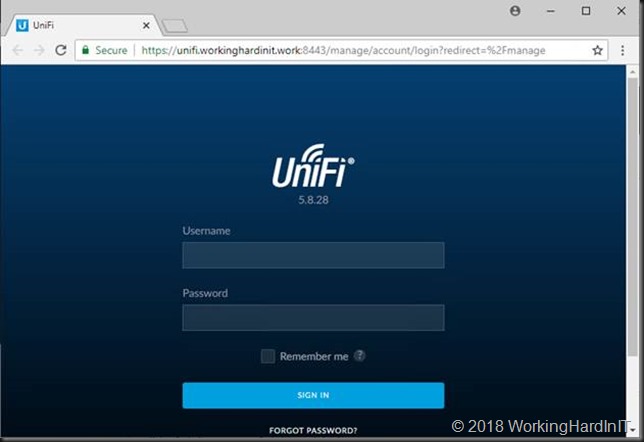

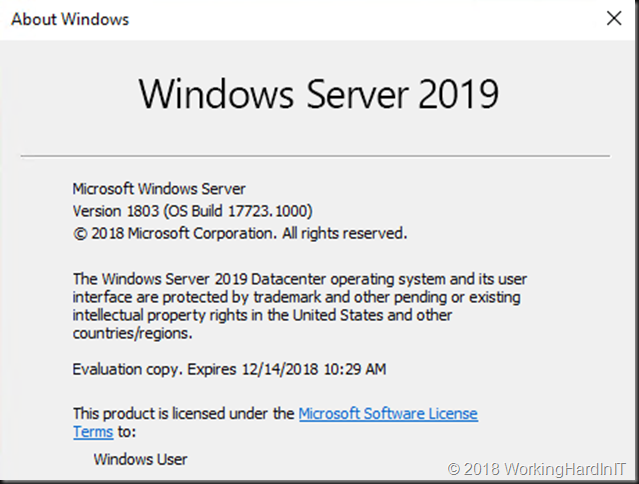

We selected Ubiquiti equipment to comply with the requirements. Apart from the WAPs all gear goes into server & network racks. The controller had to be implemented on-premises (self-managed, not via a service provider). As they have a modern Hyper-V environment we opted to deploy the controller on a Windows 2019 virtual machine. By the time the solution is deployed that will have become generally available. A Cloud Key appliance or Raspberry PI was less interesting in this environment that had professional racks in available in dedicated server & network rooms.

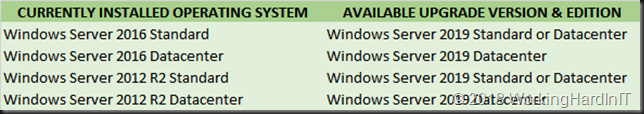

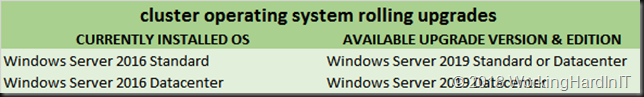

OK, you can use Windows Server 2016 or Windows Server 2012 R2 as well. Note that I don’t like using a client OS for an infrastructure role. I would also not use older server versions because I like longevity in support. I dislike solutions that are out of support a week after I deployed it. The big take away here is that you want to tweak the standard deployment of the controller a bit.

- Change the install so it is not tied to a user profile

- Run the controller as a service rather than an app you need to start manually or add to auto start.

- Configure a certificate for a decent user experience with the UniFi dashboard

Below are my lab notes as reference to myself and my readers in regards to running the Ubiquiti UniFi Controller as a service on Windows Server 2019.

Installation

For some reason the installer dumps all the files in the user profile of the person running the installer. Which is easy in terms of permissions. But people leave and profiles get deleted. Multiple people need to manage systems so having it tied to an individual isn’t that great.

For a UniFi install is first install java (x64) and a x64 bit browser. Chrome & Firefox are support, others may be as well or just work. The controller runs on Java so that’s a no brainer you need it. You don’t need a browser on the virtual machine per se, but it acts as a console access to the controller via the VM in case of network issues. Having multiple options is good. If you don’t need that, Windows Core will do.

Step by Step

1. Install the controller with the UniFi-installer.exe installer. It will put the installation under C:\Users\USERNAME\Ubiquiti UniFi

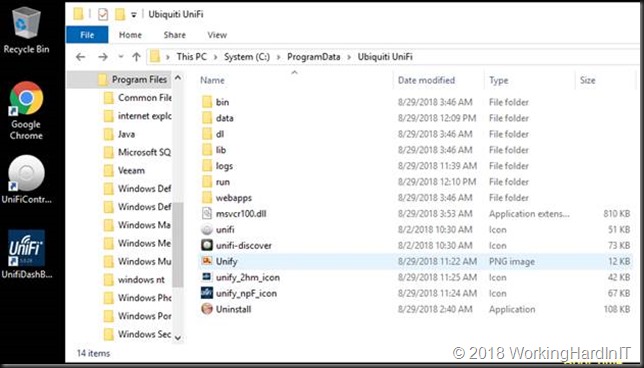

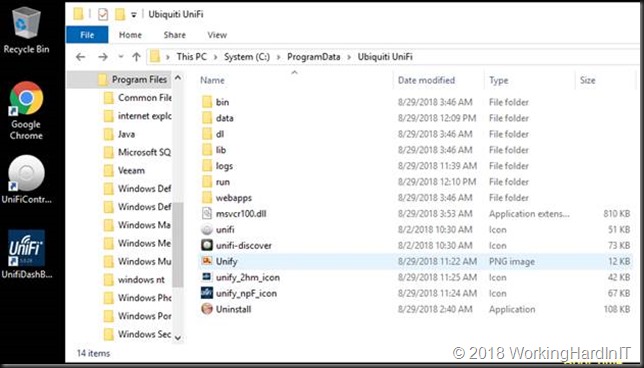

2. To move the UniFi controller app you copy the entire folder to the desired location. That could be C:\Program Files or C:\ProgramData. You can even create your own root folder if you don’t want any admin permission to be needed for the folder. For this demo I used C:\ProgramData\Ubiquiti UniFi.

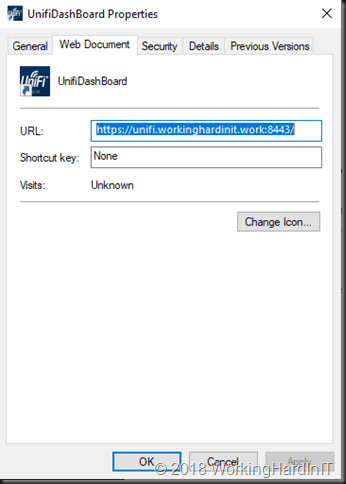

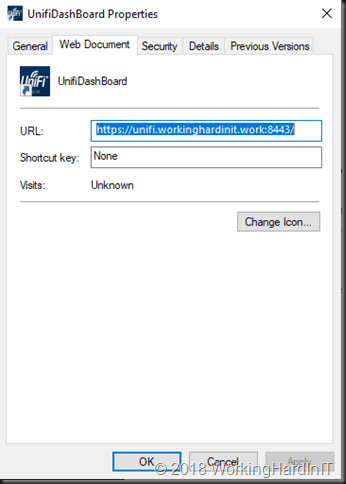

3. I create a shortcut https://unifi.workinghardinit.work:8443 and change the Icon to one I created for this purpose.

4. I then also change the “Target” path to “C:\ProgramData\Ubiquiti UniFi\lib\ace.jar” ui and “Start in” path to “C:\ProgramData\Ubiquiti UniFi”path. That way that short cut points to the right location. However, I want my controller to run as a service so we won’t be using that shortcut too much.

Anyway, we have a clean nice setup right now to continue with. Please note you do not need to install a browser on the server itself. This was done to give people a virtual machine console access option in case they have network issue. If don’t want that you can use Windows Server Core

Running as a service

Since we want the controller to always run and behave like a service, we just have some extra work to do. This is documented here: https://help.ubnt.com/hc/en-us/articles/205144550-UniFi-Run-the-Controller-as-a-Windows-service I just adapted this to my path.

1. Close any instances of the UniFi software on the computer. If you just installed the UniFi controller, make sure to open it once by using the icon on the desktop or within the start menu. Once it says “UniFi Controller (a.b.c) started.” you can close the controller program. This is needed to generate some required files for the service to work.

2. Open the command prompt as an Administrator. For example, on Windows 10, right click on the Start Menu and choose “Command Prompt (Admin)”.

3. Change directory to the location of UniFi in your computer using the following command (exactly as it is here, no substituting needed): cd “C:\ProgramData\Ubiquiti UniFi\”

4. Once in the root of the UniFi folder, issue the following (this installs the UniFi Controller service): java -jar lib\ace.jar installsvc

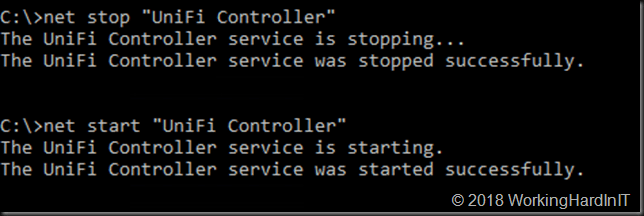

5. Once you’re at a new command prompt line, after it says “Complete Installation…”, issue the following: java -jar lib\ace.jar startsvc

Installing a proper certificate

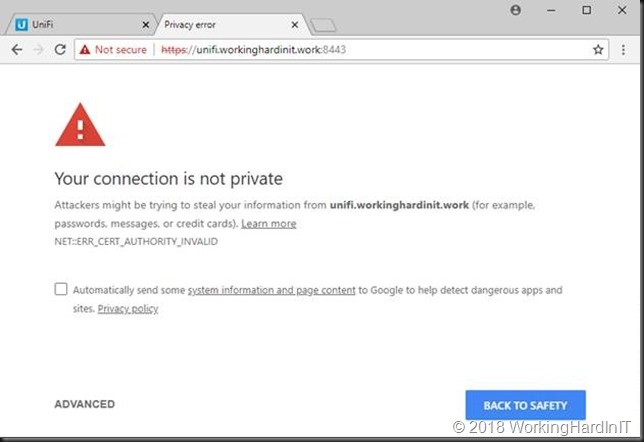

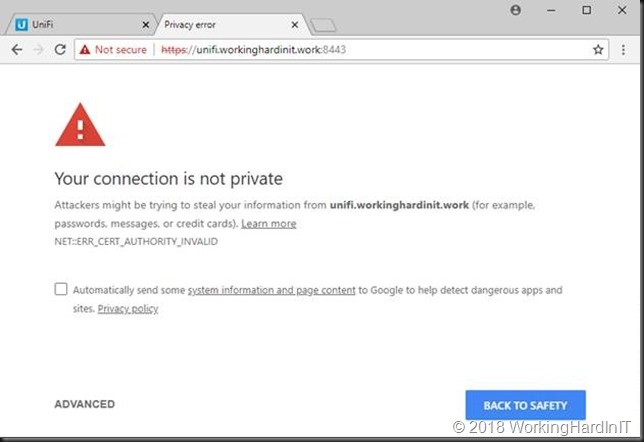

After entering the FQDN A record or CNAME to your DNS infra you will still get a security warning as we haven’t installed a proper certificate yet.

Let’s fix this unprofessional looking fist view of your controller web application! We’ll use a recent cert from either a corporate or public PKI. Take your pick, there are free ones out there if you need that.

I’m using a wild card certificate and will show you how to implement it with the Unifi controller. The trick is to replace default Keystore with a custom one in which you added your certificate. There is are nice tools for that and the exact method will vary a bit. This is what I did. Note that you can do this on your workstation, no need o do all this on your server with the UniFi Controller. Keep that tidy.

Make sure you have your cert available (exported) as a pfx file.

The Windows application method

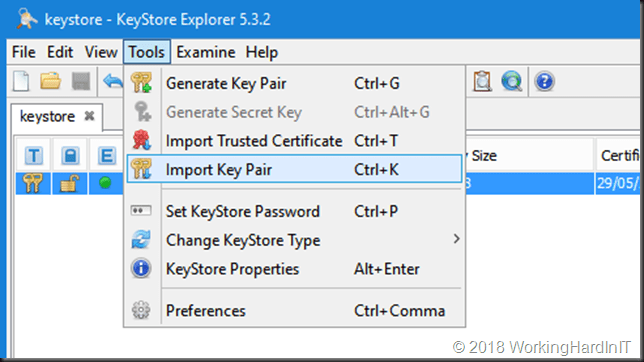

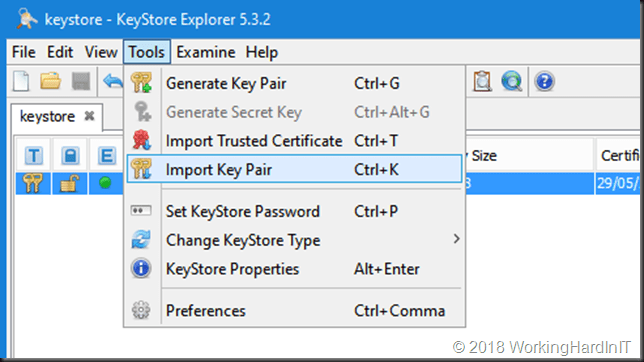

Download KeyStore Explorer (http://keystore-explorer.org/downloads.html) and install in on your PC, the default settings are just fine.

Have your certificate exported as pfx file with private key and the option “Include all certificates in the certification path if possible”.

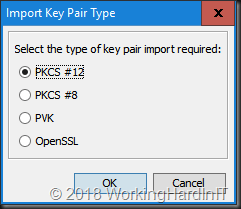

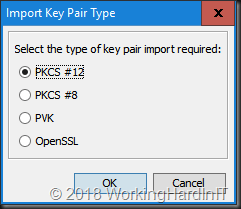

Run KeyStore Explorer and under tools select “Import Key Pair”

As type select PKCS #12

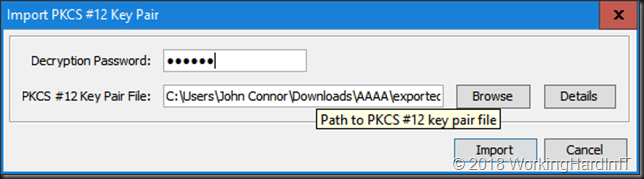

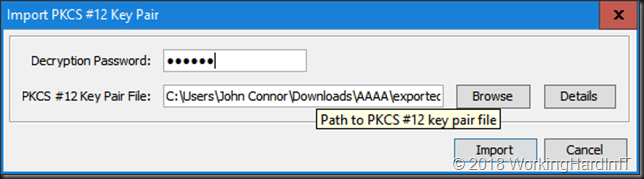

Browse to your pfx cert you created, fill out the correct password and click “Import”

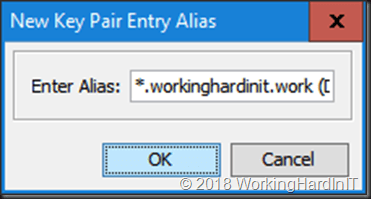

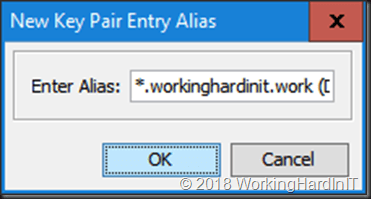

I’m happy with my default alias of * as I have a wild card cert. You should use unifi.domain.ext if you don’t have a wild card to be clear.

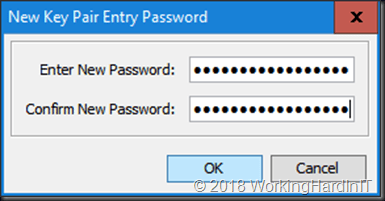

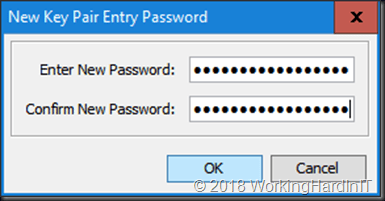

Enter the new Key Pair password, again I use “aircontrolenterprise”

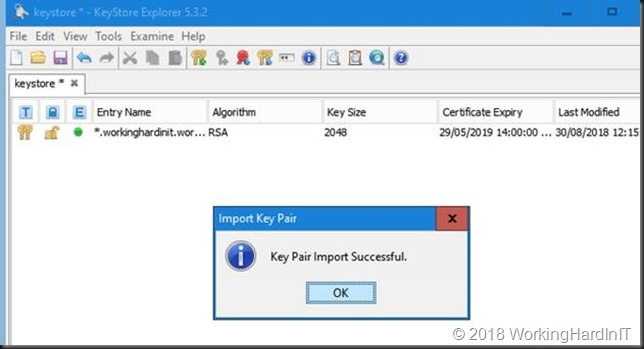

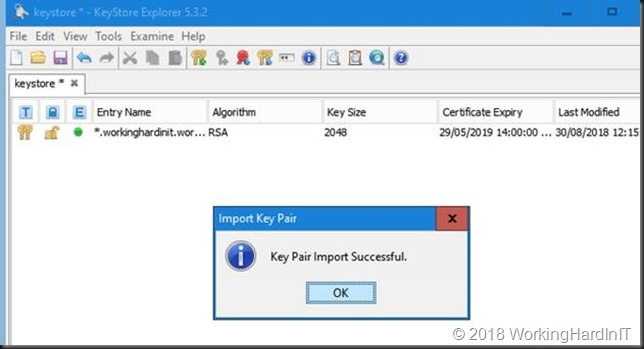

Click OK and your see that your import was successful. Click OK.

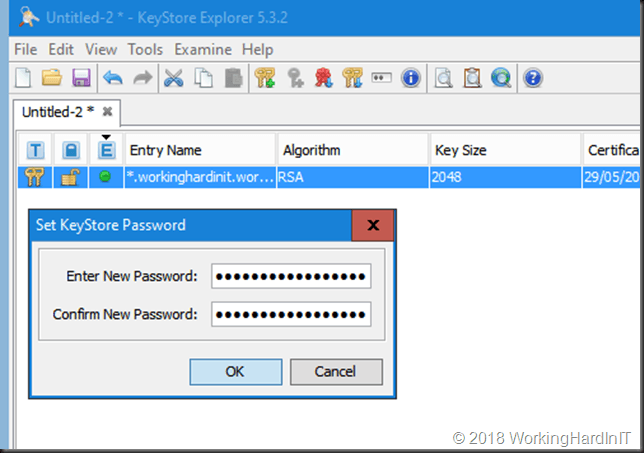

Now select your keypair and under the File menu select “Save As”

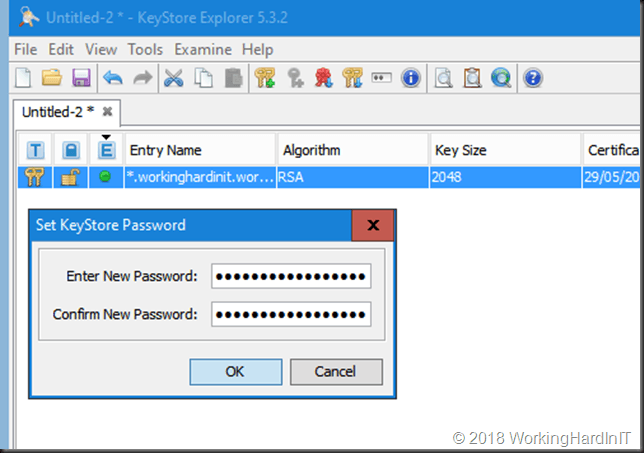

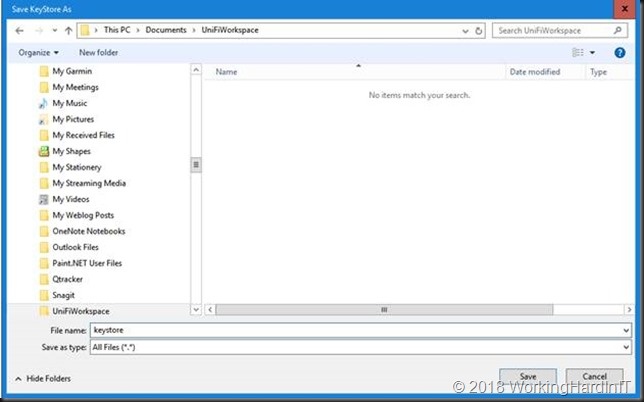

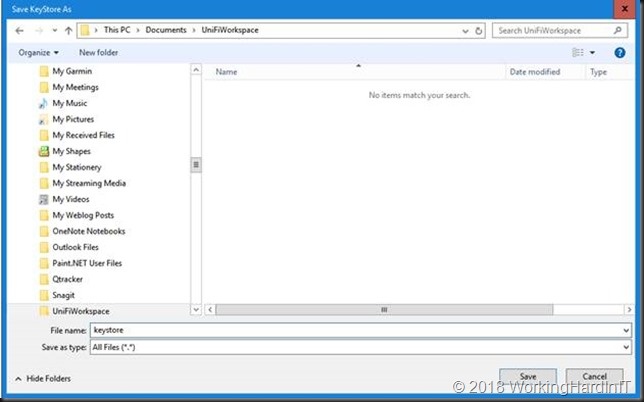

For the password, again, use aircontrolenterprise, click OK and fill out keystore for the file name.

Click save, your done here.

I actually delete the imported key pair form KeyStore Explorer and also shift delete the export pfx. It’s better not to have these sorts of files lingering around on your workstation even when using bitlocker. You must have a cert management process.

The results of your work

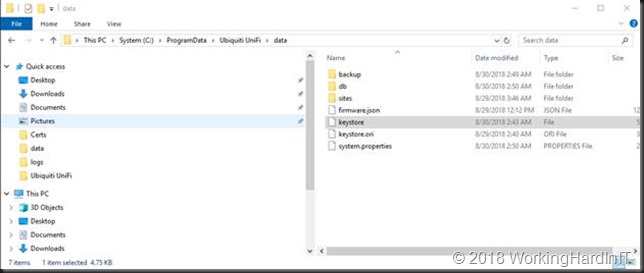

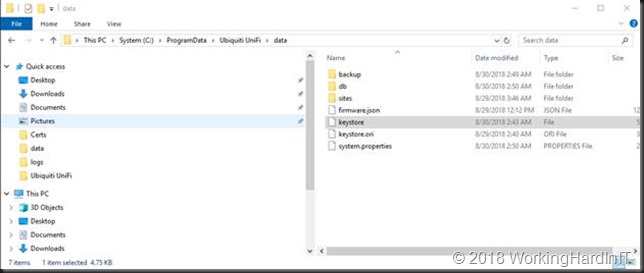

Now on your controller VM navigate to your data path, in my case it’s C:\ProgramData\Ubiquiti UniFi\data. Rename the original keystore file to keystor.ori and past the one you created in this folder.

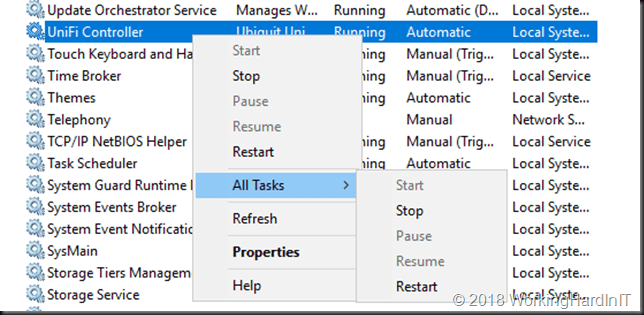

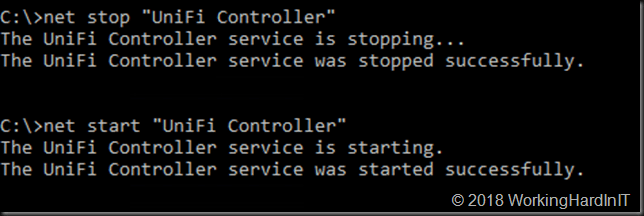

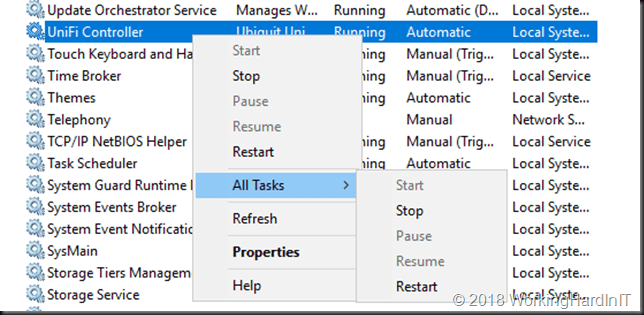

You then need to restart the UniFi Controller service, either in the GUI or via the command prompt.

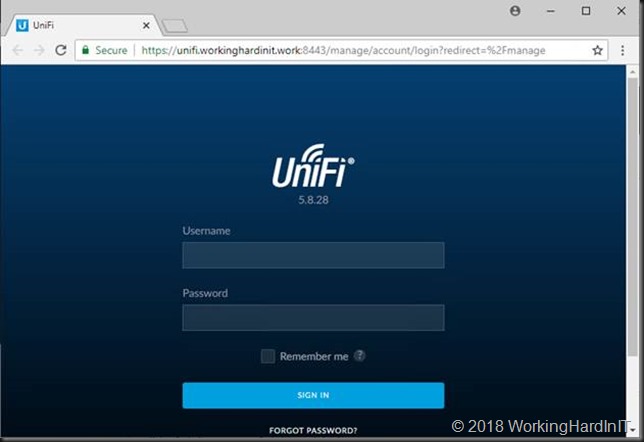

Give the controller 10 second to get going properly and click your UniFi Dashboard shortcut to browse to the application. And now, as you can see, below we have a much better user experience. This is actually the logon screen after you’ve run through the initial install wizard when you first launch the application.

We now have a well-behaved web application to securely access the UniFi controller and manage the Wi-Fi setup.jjj

The native java tools method

If you want you can use native Java tools to do the same as with the KeyStore Explorer app replace those steps above by the one below.

C:\Program Files\Java\jre1.8.0_181\bin>Keytool.exe -list -keystore C:\SysAdmin\Certs\exported_wildcard_workinghardinit_work.pfx -storetype pkcs12 which prompts for your password and outputs:

Enter keystore password:

Keystore type: PKCS12

Keystore provider: SunJSSE

Your keystore contains 1 entry

1524853e062d1785ac5ebedb44a61065, Aug 30, 2018, PrivateKeyEntry,

Certificate fingerprint (SHA1): 7A:82:FC:6E:2D:4D:79:F2:43:7A:FE:57:48:BE:13:FB:C4:AF:ED:71

C:\Program Files\Java\jre1.8.0_181\bin>keytool -importkeystore -srcstoretype pkcs12 -srcalias 1524853e062d1785ac5ebedb44a61065 -srckeystore C:\SysAdmin\Certs\exported_wildcard_workinghardinit_work.pfx -keystore C:\SysAdmin\Certs\keystore -destalias *.workinghardinit.work

Importing keystore C:\SysAdmin\Certs\exported_wildcard_workinghardinit_work.pfx to C:\SysAdmin\Certs\keystore…

Enter destination keystore password: aircontrolenterprise

Re-enter new password: aircontrolenterprise

Enter source keystore password: aircontrolenterprise => the password use to protect the pfx exported, can be anything

Warning:

The JKS keystore uses a proprietary format. It is recommended to migrate to PKCS12 which is an industry standard format using “keytool -importkeystore -srckeystore C:\SysAdmin\Certs\keystore -destkeystore C:\SysAdmin\Certs\keystore -deststoretype pkcs12”.

Conclusion

Ubiquiti delivers value for money Wi-fi solutions. The gear is good and affordable with manageability options that serve the majority of needs for the SME. It perfect for the more demanding SOHO environment.

Ubiquiti offers flexibility but also requires some “tweaking” to get just right. This goes for both the software installation (fixing some default installation choices and installing a certificate) as well as some of the hardware (installing less loud fans) shortcomings.

For many people a virtual machine with Windows is something they already have the infrastructure for. It fist perfectly into their existing operational processes. A virtual machine also fits well into many customers their existing backup and restore scenarios. A virtual machine can also easily be “checkpointed” to revert to a known good situation. This is an extra benefit in case something goes wrong during an upgrade or update wrong. This combined with the Auto Backup Configuration of the UniFi controller cover most bases for quick recovery. Not too many people can restore their raspberry PI or appliance that fast.

We chose to use Windows Server 2019 in this demo as we wanted to future proof the deployment . So we want to deliver the controller on an OS that will serve them well for many years to come.

To recap, first I showed you how to improve on the default installation. We than made the UniFi controller runs as a service. Finally I configured an SSL certficate for the controller app. I hope you liked it and that it helps you out.

![clip_image001_thumb[1] clip_image001_thumb[1]](https://blog.workinghardinit.work/wp-content/uploads/2018/08/clip_image001_thumb1_thumb.png)

![clip_image003_thumb[1] clip_image003_thumb[1]](https://blog.workinghardinit.work/wp-content/uploads/2018/08/clip_image003_thumb1_thumb.png)