VEEAM is also keeping us on our toes here at Ignite in Chicago. They just publicly announced the beta of a new free tool that looks extremely handy, VEEAM FastSCP. It’s a tool that enables you to copy files in and out of Azure virtual machines without the need for a VPN. People who have been working with IAAS in Azure for labs or production known that sometimes even benign tasks on premises can be a bit convoluted in the cloud without a VPN or Express Route to Azure.

Until today our options without a VPN (to leverage file shares / SMB) are to use either RDP which gives us 2 options:

- Direct copy/paste (limited to 2GB)

- Mapped local drives in your VM

or leverage the portability of a VHD.

So why is VEEAM FastSCP a big deal? Well the virtual hard disk method is painstakingly tedious. Putting data into a VHD and moving that around to get data in and out of a virtual machine is a nice workaround but hardly a great solution. It works and can be automated with PowerShell but you only do it because you have no other choice.

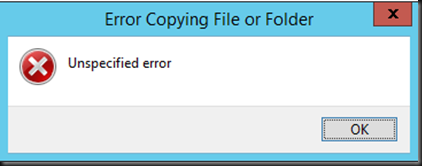

The first RDP method (copy/paste) is fast and easy but it lacks ease of automation and it’s a bit silly to launch an RDP session to copy files. It also has a file size limit of 2GB. Anything bigger will just throw you an error.

Another option is to leverage your mapped local disks in the VM but that’s not a great option for automation either.

Sure you could start running FTPS or SFTP servers in all your VMs but that’s borderline silly as well.

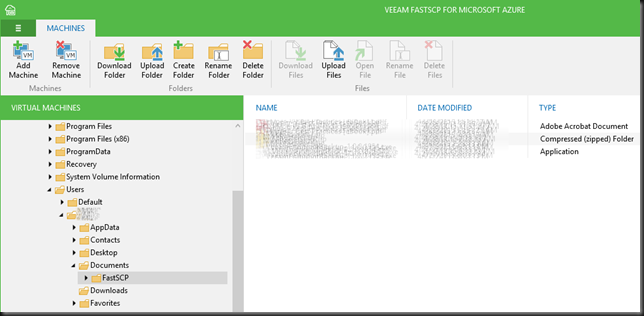

VEEAM FastSCP for Microsoft Azure

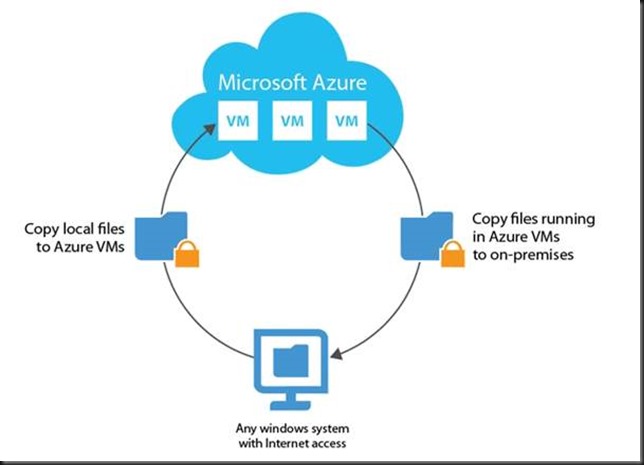

VEEAM is offering this tools as a quick, secure and easy tool to copy files in and out of Azure virtual machines without the need for a VPN or turning your virtual machine into a free target to bad people in the world. Do note this is not meant for blob storage or anything else but an Azure virtual machine. Plenty of tools to go around for blob storage already.

The tool connects to the PowerShell endpoint port of your public IP address. No VPN, 3rd party tool or encryption required, it’s all self-contained. Inside the VM it’s based on winrm.

This will not interfere with your normal RDP or PowerShell sessions at all, so no worries there. When using this tool there is also no file size limit to worry about like with copy/paste over RDP.

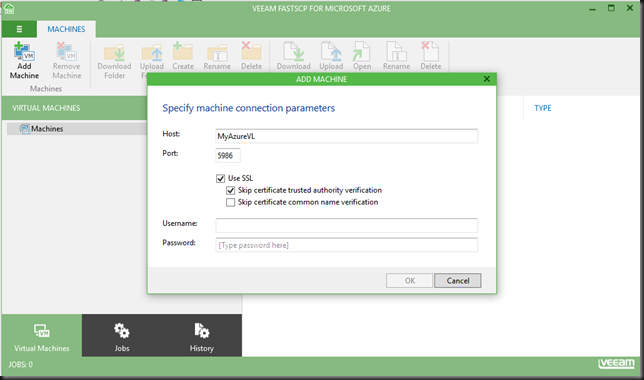

Via the GUI you connect to the Virtual Machine with your credentials. After that you can browse the file system of that VM and copy data in and out. All of this is secured over SSL.

A nice thing is that you don’t need to keep the GUI open after you’ve started the copy just close it and things will get done. No babysitting required.

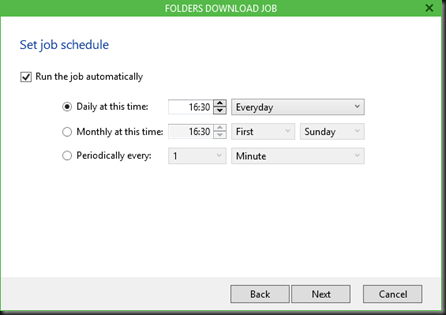

It’s all wizard driven so it’s very easy and to top it all off you can schedule jobs making it a perfect little automation tool bypassing the limitations we’re facing right now.

Some use cases

Any one who has an IAAS lab in Azure will appreciate this tool I think. It’s quick and easy to get files in and out of your VMs and you can schedule this.

Backups. I create a backup of my WordPress blog and the MySQL database regularly to file. While these are protected in the cloud themselves I love backup in depth and have extra option incase plan A fails. Using the build in scheduler I can now easily download a copy of those files just in case Azure goes south longer than I care to suffer. Having an off-cloud copy is just another option to have when Murphy comes knocking.

This is another valuable tool in my toolkit courtesy of VEEAM and all I can say is: thank you! To get it you can register here and download the Beta bits.