Introduction

In our previous two blog posts on Veeam and SMB 3 we’ve seen how and when Veeam Backup & Replication can leverage SMB Multichannel and SMB Direct. See Veeam Backup & Replication leverages SMB Multichannel and Veeam Backup & Replication Preferred Subnet & SMB Multichannel.The benefits of this are more bandwidth, high availability, better throughput and with RDMA low latency and CPU offload. What’s not to like, right? In a world where the compute and networks need keeps rising due to the storage capabilities (flash storage) pushing the limits this is all very welcome.

We have also seen earlier that Veeam B & R 9.5 leverages ReFSv3 in Windows Server 2016. This provides clear and present benefits in regards to space efficiencies and speed with many backup file related operations. Read Veeam Leads the way by leveraging ReFSv3 capabilities

When it comes to ReFSv3 in Windows Server 2016 most of the focus has gone to solutions based around Storage Spaces Direct (S2D). That’s a great solution and it is the poster child use case of these technologies.

But what other options do you have out there to build efficient and effective high available backup targets creatively except for S2D? What if you would like to repurpose existing hardware to build those? Let’s take a look together at how continuous available general purpose file shares & ReFSv3v3 provide high available backup targets

CSV, S2D, ReFSv3 & Archival Data

In Windows Server 2016, traditional shared storage (iSCSI, FC, Shared SAS, Shared RAID) with CSV are not recommend to be used with ReFSv3. Why isn’t exactly clear. The biggest impact you’ll see is the performance difference when not writing to the owner node of the CSV in this use case. Even with a well configured RDMA network that difference is significant. But that doesn’t mean that the performance is bad. It’s just that many of the super-fast meta data operations are relatively and significantly slower when compared each other, not that any of these two are slow.

Microsoft does state that an S2D with ReFSv3 and SOFS shares can be used for archival data. Storage spaces and ReFSv3 also have the benefit of offering automatic repair of corrupt data from a redundant copy on the fly even when needed. So yes, the best know supported scenario is this one.

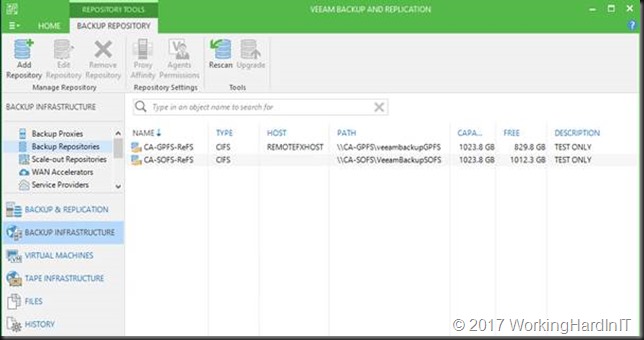

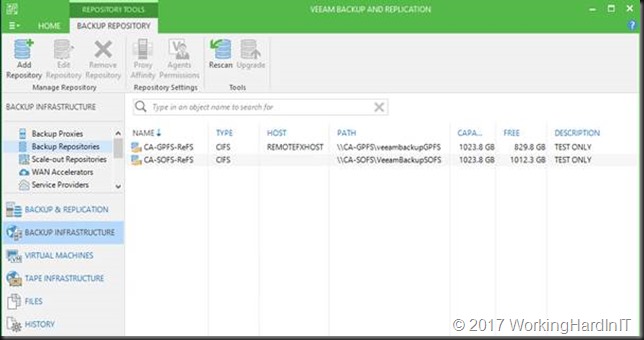

Continuous available general purpose file shares and ReFSv3 provide high available backup targets

But what if we need a high available backup target and would love to leverage ReFSv3 with Veeam Backup & Replication 9.5? Well, you can have 95% of your cookie and eat it to. All this without ignoring the cautions offered.

We could set up SOFS shares on a Windows Server 2016 Cluster with ReFSv3 with traditional shared storage. Some storage vendors do state this is supported actually.

That only means you don’t have the auto repair functionality ReFSv3 combined with storage spaces offers. But perhaps you want to avoid the risk of using ReFSv3 with CSV in a non S2D scenario all together. What you could do is forgo ReFSv3 and use NTFS. How well this will work for archival data or backup is something you’ll need to test and find out how well this holds up. There is not much info is out there, only other cautions and warnings that might keep you up at night.

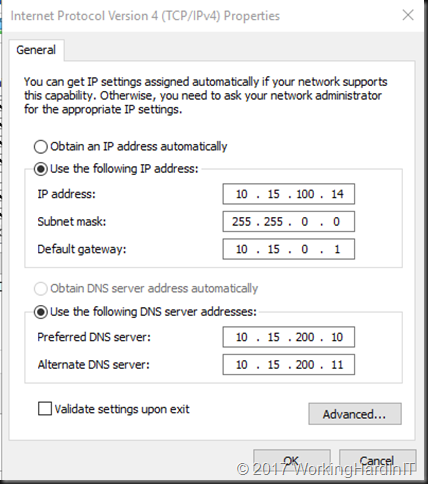

There is another scenario however and that is using Windows Server 2016 failover clustering to set up continuously available general purpose file shares that leverage SMB3 transparent failover.

The good news is that general purpose file shares (no CSV) do work consistently with ReFSv3 because such a share/LUN is only exposed on one cluster node at the time, the owner. By having multiple shares and setting preferred owners we can load balance the workload across all cluster nodes.

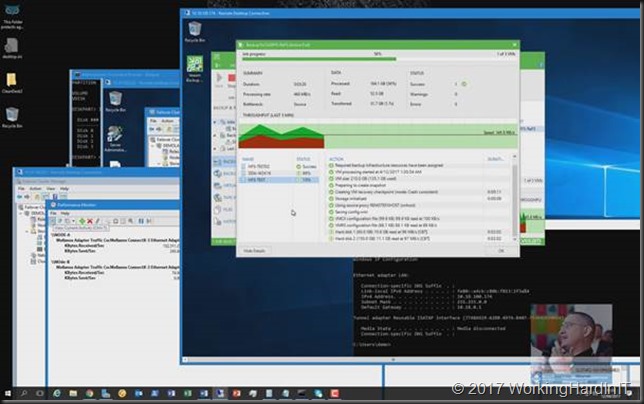

Thank to continuous availability for general file shares and SMB 3 transparent failover we can still get a high available backup target this way. The failover is fast enough to make this happen and all we see with Veeam Backup & Replication is a short pause in throughput before it resumes after failover. To put the icing on the cake, you can leverage SMB multichannel SMB Direct for both backup and restores.

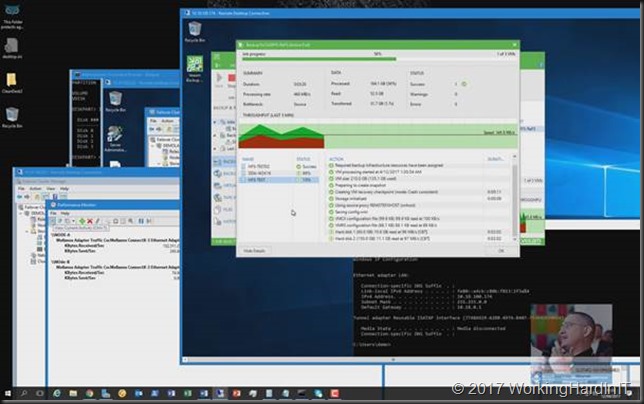

I would take a sizeable whitepaper to walk through the setup so instead I’ll show you a a quick video of a POC we did in the lab here https://vimeo.com/212886392.

If you want to learn more come to the community & other conferences I’m speaking at and will be around for Ask The Experts time opportunities. I’ll be at the German Hyper-V community meet up, The Cloud & Datacenter Conference in Germany 2017, Dell EMC World 2017 and last but not least VeeamON 2017 (see May 2017 will be a travelling month).

Conclusion

What do you lose?

Potentially there is one big loss in regards to the capabilities of ReFSv3 with this solution when you are not using storage spaces. This is that you lose the capability to automatic repair of corrupt data. The ability of ReFSv3 to do so is tied into the redundant copies of Storage Spaces (parity/mirror).

What do you get?

That’s fine, the strength of this design is that you get the speed and space efficiencies of ReFSv3and high available backup targets in way more scenarios than “just” S2D. After all, not everyone is in a position to choose their storage fabric for backup targets green field or at will. But they might be able to leverage existing storage and opt to use SMB 3 for their data transport.

So even if you can’t have it all, you can still build very good solutions. It offers ReFSv3 benefits and high availability for your backup target via transparent failover with SMB transparent failover on continuous available general purpose file shares. This also only requires Windows Server 2016 Standard Edition, which is a cost saving. You get to leverage SMB Multichannel and SMB Direct. All this while not ignoring the cautions of using ReFSv3 in certain scenarios.

On top of that, if you use NTFS with this approach it will also work for Windows Server 2012 (R2) as the OS for the backup target cluster hosts.

Disclaimer

I do not work for or at Microsoft, nor am I perfect or infallible just because I’m an MVP. You’ll have to do your own testing and validation. From our testing and without ReFSv3 bugs ruining the show, to me this is a very valid and cost effective approach.