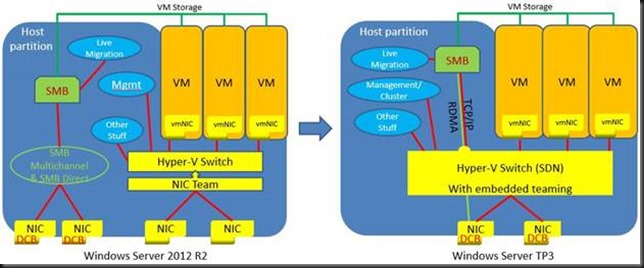

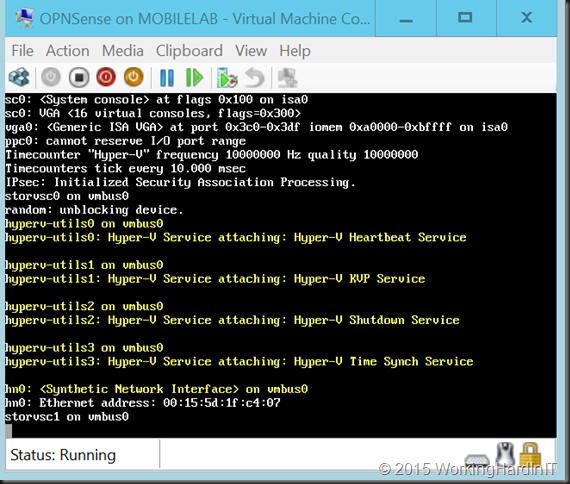

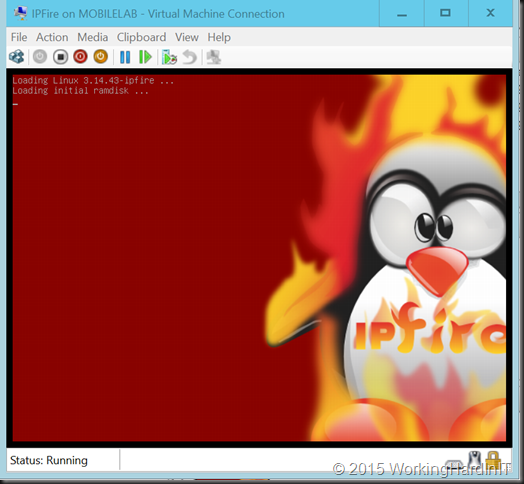

When doing lab work, or real life implementations you’ll need to go beyond the basic 101 stuff to build solutions every now and then. This is especially true when using virtual network appliances. Networking means you’ll you’ll be dealing with Link Aggregation Groups, Trunking, MLAG, routing, LACP … in short the tools of the trade when doing networking. In my experience I use trunking in Hyper-V mostly to mimic real world scenarios where trunking is used (firewall, routers, load balancers). These tend to be limited in usable ports in real life. So even, before you run out of physical ports on your Hyper-V host to work with we leverage them to mimic the real live environment. This leads us to trunking with Hyper-V networking

I for one have used this on 10Gbps ports on bot physical and virtual load balancers in the uplink to the switches. As you can imagine when doing redundant (teaming) cabling with HA load balancers you’re consuming 10Gbps ports and not all VLANs warrant a dedicated 10Gbps uplink, even if you had ‘m.

Trunking & VLAN’s are the way we deal with this in the network hardware world and we can do the same in Hyper-V. In the Hyper-V Manager GUI you will not find a way to define a trunk on an vNIC attached to a vSwitch. But this can be done via PowerShell. So please do not reject Hyper-V as not being up to the job. It is. Let me show you how you can do trunking with Hyper-V networking.

Generally on a clean install I dump the default vNIC. DO NOT DO this blindly on an existing deployed appliance virtual machine.

#Delete the default network adapter

Remove-VMNetworkAdapter -VMName VLM200-1 -Name "Network Adapter"

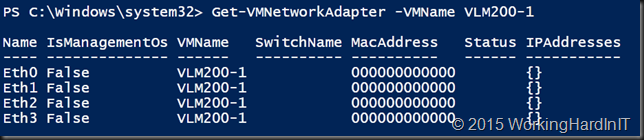

I then add the number of ethernet ports I need on my Kemptechnologies virual Load Master.

#Create the VLM200 ports (4 like it's physical counterpart)

For ($Count=0; $Count -le 3; $Count ++)

{

Add-VMNetworkadapter -VMName VLM200-1 -Name "Eth$Count"

}

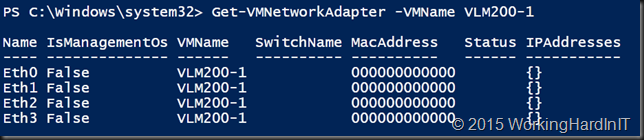

A peak at our handy work via Get-VMNetworkAdapter -VMName VLM200-1 shows our 4 ports.

As you can see I like to name my network adapters with a distinctive name. In combination with the switch name it enables me to identify the NICs better. Combine that with a good naming policy inside the VM if possible. In Windows Server 2016 you can hot add and remove vNICs and new “Device Naming”

(see Hot add/remove of network adapters and enabling device naming in Windows Server Hyper-V) functionality which only makes the experience better in relation to uptime and automation.

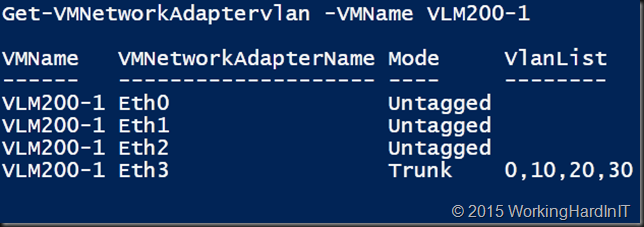

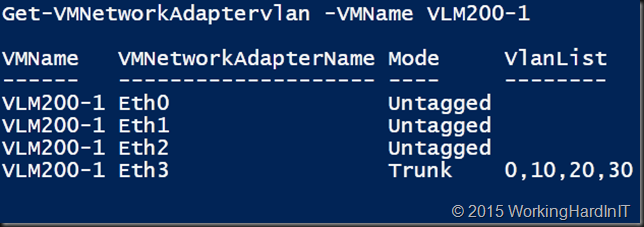

Now let’s say we use eth0 for management and for the HA heartbeat. That leaves Eth2 and Eth3 for workloads. We could even aggregate these (redundancy, heart beat). In this demo we’ll configure Eth3 as a trunk with a list of allowed VLANs. We keep the native VLAN ID on 0 as it is by default. Only in specific situations where you have changed this in the network should this be changed.

#Trunk Eth3 and add the required VLAnIDs

Set-VMNetworkAdaptervlan -VMName VLM200-1 -VMNetworkAdapterName "Eth3"-Trunk -AllowedVlanIdList "10, 20, 30" -NativeVlanId 0

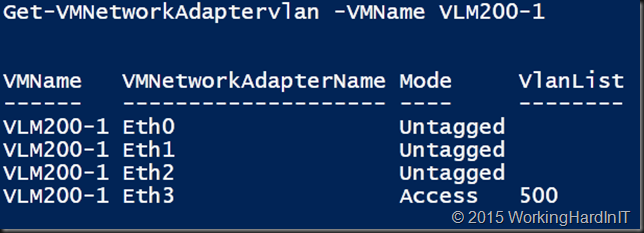

Which delivers us what we need to get our network appliance going

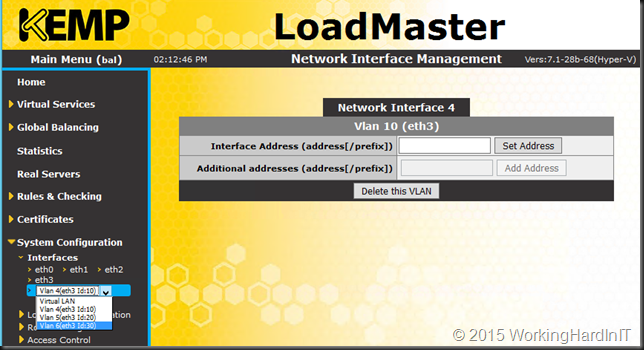

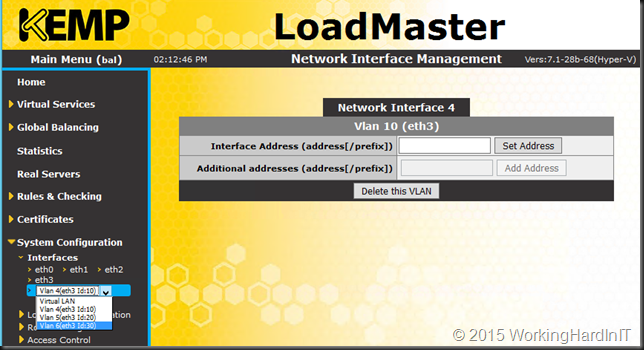

In your virtual appliance you can now create VLANs on Eth3. How this shows up is dependent on the appliance. In this example a Kemp Virtual Load Master. Here we mimic a 4 port load master. We’re not doing trunking because we ran out of the max supported number of NICs we can add to a virtual machine.

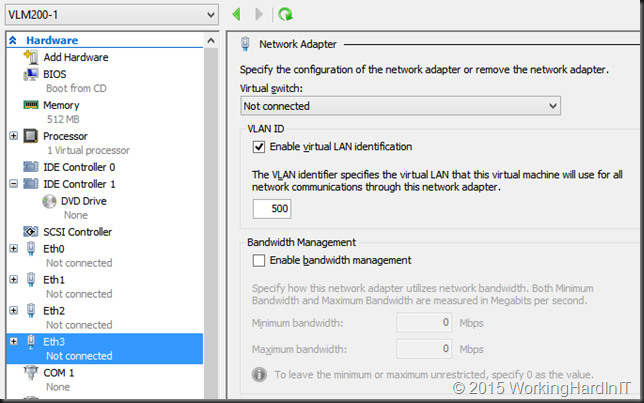

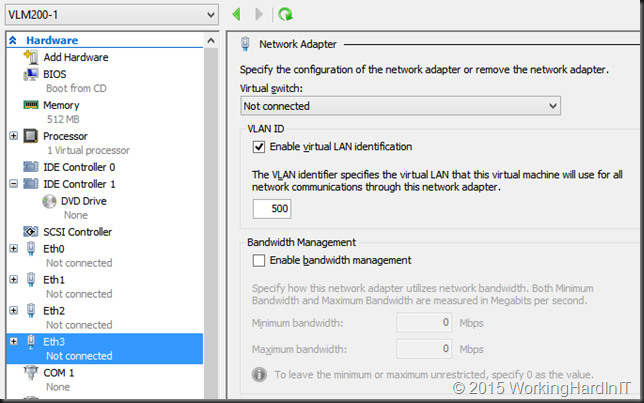

A word of warning. You will not see this configuration in the settings via the GUI.

Manipulating the VLAN settings in the GUI will overwrite the settings without a warning.

So be careful with configuration of your virtual network appliance(s). As an example I’ll touch the VLAN setting of Eth3 and give it VLAN 500.

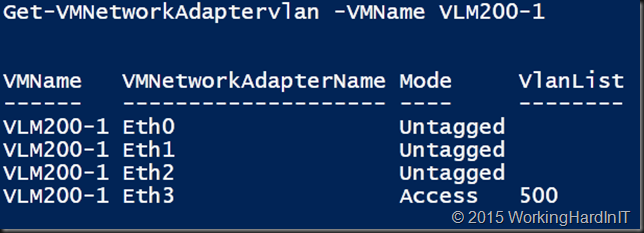

We now have a look at our VLAN settings of the appliance

That vNIC is now in Access mode with VLAN 500. Ouch, that will seriously ruin your day in production! Be careful!

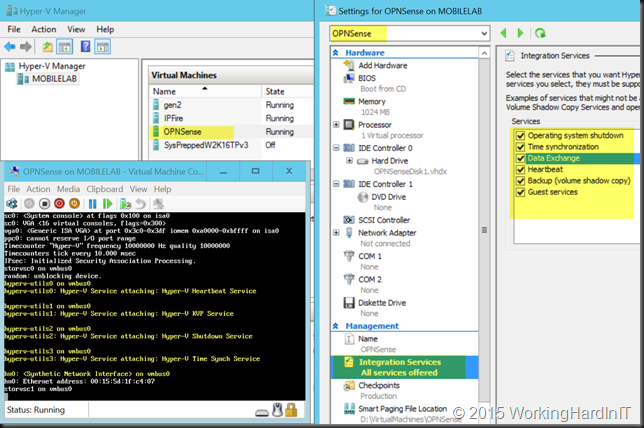

On top of this some appliances do not respond well to such misconfigurations on the switch side (both physical and virtual switches). This leads not only to service interruption but could lead to the inability to mange the appliance, requiring a reboot of them etc.

Anyway, so yes you can do trunking with Hyper-V networking on a vNIC but this normally only makes sense I you have an appliance running that knows what to do with a trunk such as a virtual firewall, router or load balancer.