Dell EMC Digital Transformation Goals at Del EMC World

Introduction

As many of my blog readers will know, I am attending Dell EMC World this week in Las Vegas. Today we got our introduction into how they aim to help customers realize the digital transformation goals they have and, quite frankly that their customers need. It is no secret that I have leveraged DELL hardware very effectively over the years to build highly performant solutions at a great price value. Sometimes to the envy of my peers who saw those results. I’m here because I intend to continue doing so.

It is clear that DELL EMC is still very proud to be privately owned and they mentioned this once again. This gives them the freedom and flexibility they need to outperform the competition. DELL EMC files SEC records, so there is nothing to hide. I do note they have a couple of public owned companies in the business now. That model has gone hybrid as well it seems.

Given the vast amount of attention digital transformation gets nowadays it will be no surprise that DELL EMC is focusing its efforts to facilitate this transition for its customers. After all, an unrelenting focus on real customer needs is one of the cornerstones of digital transformation. As such, DELL EMC is drinking its own champagne.

The digital transformation challenge

While things are changing fast, this also has the consequence they last less long. That means that the need to move and deliver fast is certainly clear and present. This is because the time spam in which to deliver the ROI gets shorter as well. Together with that comes perhaps a more urgent need and that is to change course fast when needed. However, to ensure digital transformation becomes more than buzz or hype we need more than just agility and speed.

We need a modern service focused “serverless” IT architecture where the cloud model reigns due to its agility and elasticity. When we use cloud as delivery model and not a location we get the ability to leverage our architectures in both public, hybrid and private environments without making any solution or technology the goal instead of the services we deliver to the customer. I call that “Service Resilience Engineering” as this encompasses the need to focus the services we deliver and not infrastructure, architectures or technologies. This avoids being stuck in a location, a technology or vendor. To the extent that is possible, as (public) cloud per definition is perhaps the biggest attempt at vendor lock in at scale we have ever seen. This despite valiant and sometimes successful efforts of many to avoid it. They also mention cost efficiency as a public cloud issue for customers. Cost however is a limited metric; value is a much better one.

Dell EMC has its eye set on helping customers do exactly that. This without making the mistake of leaving existing needs behind or failing to cater to more recent and emerging technologies and trends.

Budgets are limited. This means the old and the new IT architecture have to merge and transform where needed. We often do not have the ability and budget to go one hundred % green field in all or most situations.

One of the ways Dell EMC hopes to facilitate this is via a cloud-like pricing model. That means that even in cases where on-premises infrastructure remains needed customers can scale up and scale down. This leverages a pay for use model for components of their datacenter instead of the entire datacenter (Dell Technologies Rolls Out Flexible Consumption Models for IT from the Desktop to the Data Center). Even when, personally, I rather see smaller capex efforts more frequently when possible, this option is often not available. If that is not possible in an OPEX loving world that is very CAPEX shy, this initiative can only help.

Customers must do more than window dressing and avoid mere lip service to digital transformation. No matter what you call it, this will require a deep and broad understanding of user needs combined with an expert level understanding of information technologies, architectures and designs. That means that business will need to accept the technology experts into the C level positions, board roams and at the helm. The need for speed in delivery is too high and specialized to waste time on old school and very flawed models that see technology as a facility. Organizations trying to achieve digital transformation in that way will fail. Technology will lead the effort to deliver what customers require and demand. As DELL EMC puts it: “Every company is an IT company. Technology and your IT capabilities are at the center of driving digital transformation”. Having spoken with some customers here and having seen how farmers back home are digitizing their business despite lack of support from government and traditional IT providers, I agree.

There is no one size fits all. Before you getting too excited and think that modernizing the data center will free up vast amounts of money for app development you might be in for a surprise. If you already have a decent datacenter environment. For many well run companies there is not much more money to be saved there. The cost of services and wages in regards to business operations and app development are already a magnitude of their infrastructure costs. Optimizing that further might not deliver that much. The big savings are not for the more up to date companies, those need to leverage their advantage now, not shave of more of ever less. So be ready for some major investments.

Some of the announcements

At Dell EMC World today, we saw announcements on the new generation 14 Power Edge server models that are adding all the tech updates that are available from Intel mid this year (Dell EMC Drives IT Transformation With the New 14th Generation of PowerEdge Servers). Especially when it comes to VDI scalability and local NVMe storage, we will see major improvements. The other areas of improvement are security, ease of automation and manageability in a software-defined world. One excellent improvement is that it is shipping with native 25GbE support! Awesome, 10Gbps becomes the new 1Gbps fast so to speak. 40Gbps is on the way out as 50/100Gbps take over. These improvements should keep us fully powered up until we see PCIe 4 arrive in the next server generation.

Storage wise we see lots of movement in the hyper-converged segment where cloud consumption models become more outspoken (see New Dell EMC Hyper-Converged Infrastructure Advancements and Cloud-Like Consumption Model Ease Adoption). I do note that the do not mention Storage Spaces Direct in their HCI offering while they do in their SDS efforts. The line blur but while S2D is not limited to HCI, it does deliver that as well.

They continue their efforts towards more software defined storage capabilities (see Dell EMC Software-Defined Storage Paves Way for Data Center Modernization). Note that this includes Microsoft Storage Spaces Direct on generation 14 servers available in the second half of this year. That is great news for people not willing to carry the engineering and support effort of building it themselves.

It will be no surprise that there is an ever-stronger move towards all flash solutions which seems to be moving faster and more widely across the industry and customers (Dell EMC Introduces New All-Flash Storage Systems to Help Customers Modernize Their Data Centers and Transform IT).

I am happy to see that they also introduced the SC5020 to the great Compellent series of mid-range storage that punches far above its perceived weight when configured properly. To me the SC series have always been and remains a gem in the traditional SAN offerings. I do still wish a native full featured SMB3 offering was be available in them, but for now we will do what we have been doing, build our own scale out file servers against it where needed.

On the network side, it is clear DELL EMC keeps focusing its efforts on open networking (Dell EMC Powers IT Transformation with New Open Networking Products). Another noticeable fact is that the push towards 25/50 and a 100Gbps truly picks up speed.

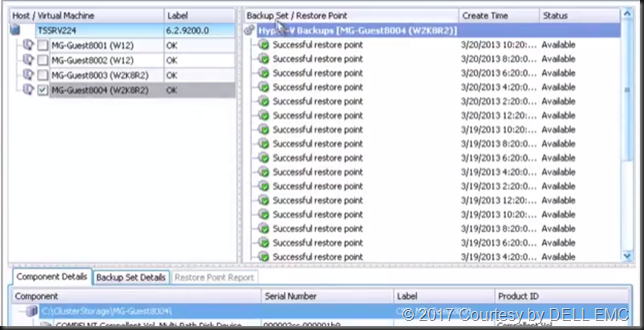

Dell EMC intends to remain a player in the data protection arena (Dell EMC Launches Integrated Data Protection Appliance and Expands Cloud Data Protection Portfolio). As this is an area I also focus on by leveraging commodity hardware, cloud and native in box capabilities I’m interested to see if the dedicated appliance vendors can keep up with other players in that field of endeavor when pitched against creative customers.

Conclusion

On top of the above, DELL EMC is highlighting the potential of artificial intelligence and virtual reality in the future. Then there is security. In a world where work is a thing that you do not a place you go, mobility and security is and remains of paramount importance. Technology has to evolve to make this happen and leave bolted on security solutions behind.

I hope to dive deeper in to some of this as the conference continuous and I get the chance to speak with industry experts, both DELL EMC employees and my peers while here. Next to that, I am attending to provide feedback to DELL EMC on our needs and wants to achieve our goals, which means customers will gladly pay for our services.

Whether Michael Dell is more right than wrong will be determined by the market as they say. I do notice that if his vision does not materialize it will not be due to his lack of managerial and sales man skills. He can sell a vision and drive a company.

In case you think, DELL EMC tells me what or when to write, that is not the case. As my former account manager stated “I will gladly let Didier provide feedback to management at DELL EMC, but they will have to accept that it will be direct and not always 100% positive. It is honest and that is when they will learn where they can improve”. I always operate on that principle.