How does one keep an IT Infrastructure in top form? With care, knowledge, dedication and maintenance. For some this still comes as a surprise. To many the job is done when a product or software is acquired, sold or delivered. After all what else is there to be done?

Lots. True analysis, design and architecture requires a serious effort. Despite the glossy brochures the world isn’t a perfect and shiny as it should be. Experience and knowledge go a long way in making sure you build solid solutions that can be maintained with minimal impact on the services.

Maintenance must be one of the least appreciated areas that are valuable and necessary. The things we do that management, not even IT management, knows about are numerous. Let alone that they would understand what and why.

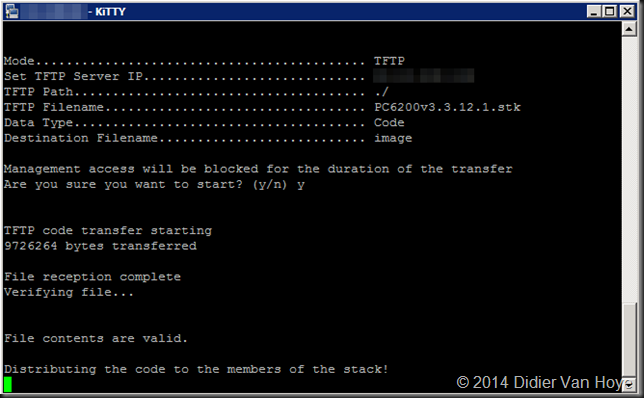

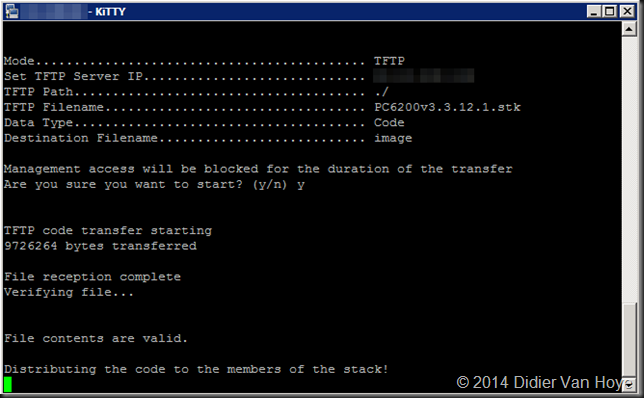

Take firmware upgrades for example. Switches, load balancers, servers …. The right choice of a solution and the right design means you’ll be able to do maintenance without downtime or service impact.

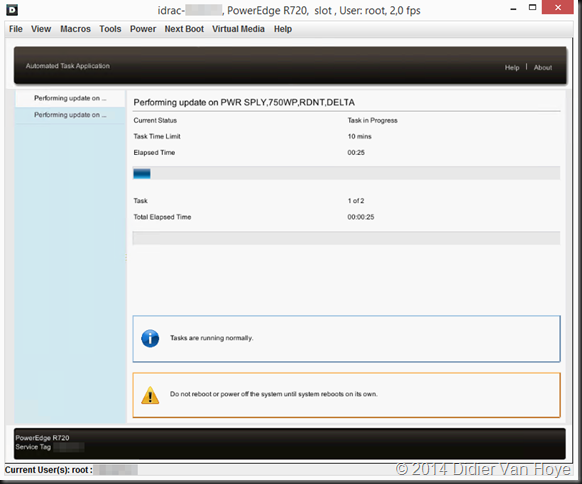

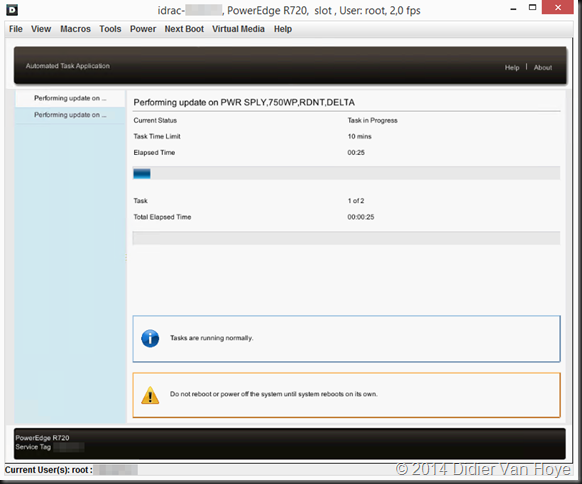

Who’s manager knows that even server PSUs need upgrades? Do they realize how much down time that takes for a server with redundant power supplies?

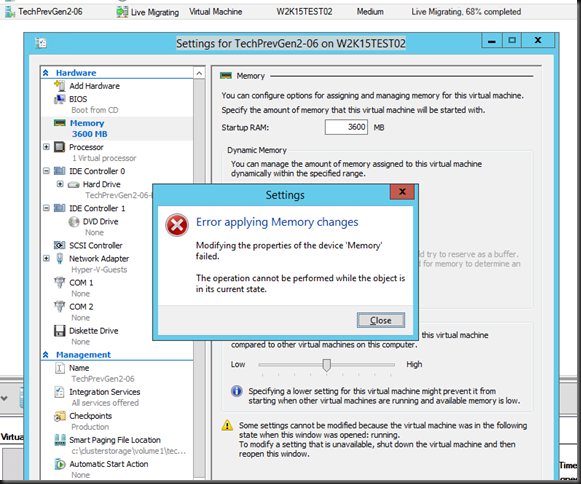

It takes up to 20-25 minutes per node. Yes! So you see that 10Gbps live migration network has yet another benefit, cuts down on the total time needed to complete this effort in a cluster. Combine it with Cluster Aware Updating and it’s fully automated. Just make sure people in ops know it takes this long or they might start trouble shooting something that’s normal. So you want to have clusters, you want independent redundant switches or MLAG/VLT, vPC, …

Yes, an older switch model, but the only stack still in use in a data center. At client sites I don’t mind that much, different workload.

Think about your storage fabrics, load balancers, gateways … all redundant & independent to allow no service affecting maintenance.

If you do not have a solution & practices in place that keep your business running during maintenance people might avoid it. As a result you might suffer down time that’s classified as buggy software or unavoidable hardware failure. But there is another side to that medal, the good old saying “if it ain’t broke, don’t fix it”. On top of that even hardware maintenance requires care and needs a plan to deal with failure; it to has bugs and can go wrong.

There is a lot of noise about the “hero” culture or IT Ops and a “cowboy mentality” with system administrators. Partially this is supposed to be cultivated by the fact they get rewarded for being a hero, or so I read. In my experience that’s not really the case, you work at night or through the night and have to show up at work anyway and explain what went wrong. No appreciation, money or anything. Basically you as an admin pay the price. There is no over time pay, on call remuneration or anything. Maybe it’s different but I have not seen many “hero cultures” in real life in IT Ops (as said we pay personally for our mistakes or misfortunes). Realistically I have the perception that the“cowboy culture” is a lot more rampant at the white collar managers level. You known when they decide to buy the latest and greatest solution du jour to fix something that isn’t caused by existing products or technology. When it blows up it’s an operational issue. Right? Well, don’t worry, the cloud will make it all go way! Cloud. Sure, cloud is big and getting bigger. It brings many benefits but also drawbacks, especially when done wrong. There are many factors to consider and it can’t be done just like that. It needs the same care and effort in analysis, design, architecture, deployment as all other infrastructure. You see it’s not just operational ease where the benefit of cloud lies but in the fact that when done right it allows for a whole different way of building & supporting services. That’s where the real value is.

So yes that’s why we do architecture and why we design with a purpose. So we can schedule regular maintenance. So we can minimize or even avoid any impact. On premises we build solutions that allow this to be done during office hours and can survive even a failed firmware upgrade. In the cloud we try to protect against cloud provider failure. You might have notices they to have issues.

Cowboys? Hero culture? No me, site resilience engineering for the win!

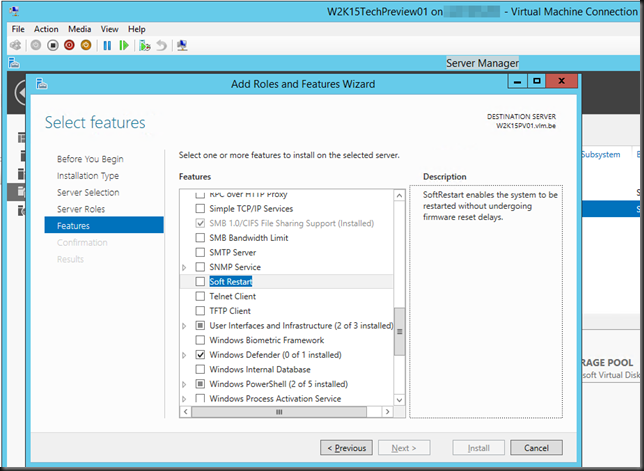

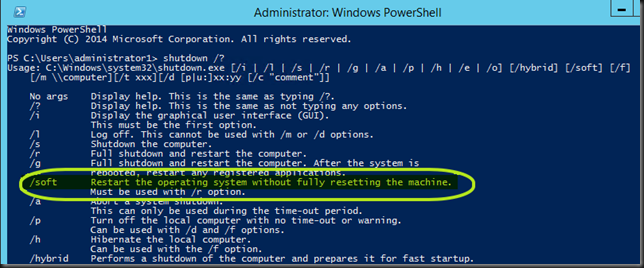

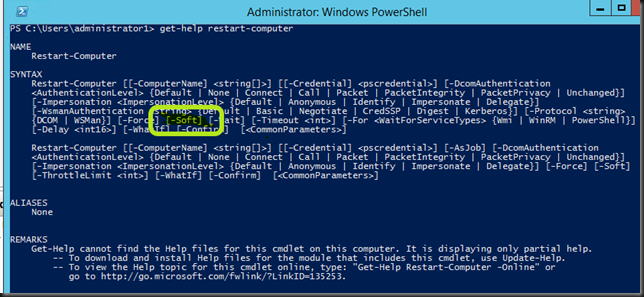

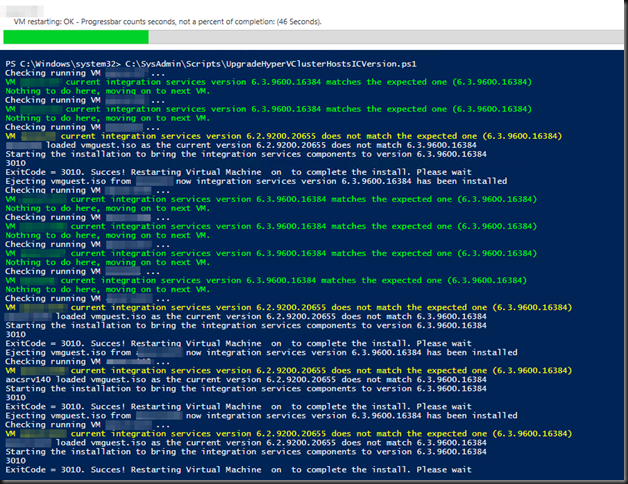

![]() . First of all the installation of this feature requires a restart. Keep this in mind.

. First of all the installation of this feature requires a restart. Keep this in mind.![]() but we’ll have to wait for the next code drop to see if and how it works …

but we’ll have to wait for the next code drop to see if and how it works …