Introduction

Before I dump the script to add HBAs to SC Series Servers with Add-DellScPhysicalServerHba on you, first some context. I have been quite busy with multiple SAN migrations. A bunch of older DELLEMC SC Series (Compellent) to newer All Flash Arrays (see My first Dell SC7020(F) Array Solution) When I find the time I’ll share some more PowerShell snippets I use to make such efforts a bit easier. It’s quite addictive and it allows you migrate effectively and efficiently.

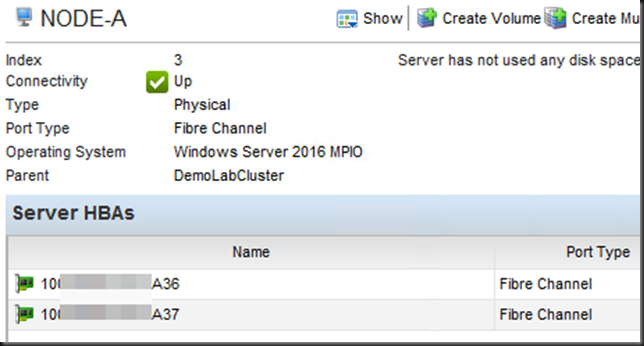

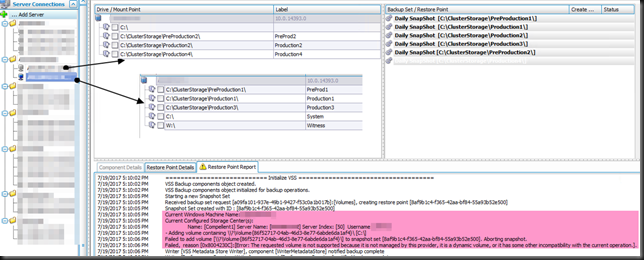

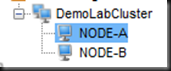

In the SC Series we create cluster objects in which we place server objects. That make life easier on the SAN end.

Those server objects are connected to the SAN via FC or iSCSI. For this we need to add the HBAs to the servers after we have set up the zoning correctly. That’s a whole different subject.

This is tedious work in the user interface, especially when there are many WWN entries visible that need to be assigned. Mistakes can happen. This is where automation comes in handy and a real time saver when you have many clusters/nodes and multiple SANs. So well show you how to grab the WWN info your need from the cluster nodes to add HBAs to SC Series Servers with Add-DellScPhysicalServerHba.

Below you see a script that loops through all the nodes of a cluster and gets the HBA WWNs we need. I than adds those WWNs to the SC Series server object. In another blog post I’ll share so snippets to gather the cluster info needed to create the cluster objects and server objects on the Compellent SC Series SAN. In this blog post we’ll assume the server have objects has been created.

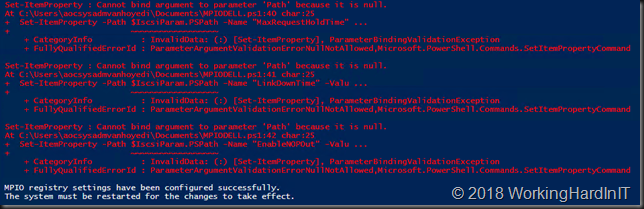

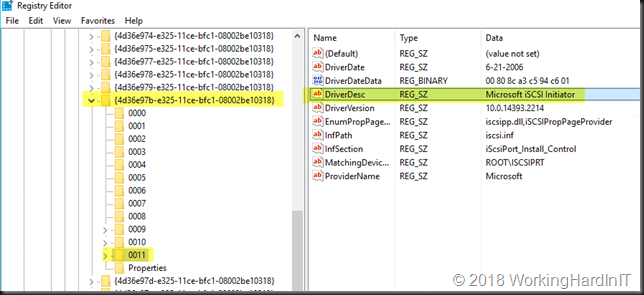

We leverage the Dell Storage Manager – 2016 R3.20 Release (Public PowerShell SDK for Dell Storage API). I hope it helps.

Add HBAs to SC Series Servers with Add-DellScPhysicalServerHba

function Get-WinOSHBAInfo

#Basically add 3 nicely formated properties to the HBA info we get via WMI

#These are the NodeWWW, the PortWWN and the FabricName. The raw attributes

#from WMI are not readily consumable. WWNs are given with a ":" delimiter.

#This can easiliy be replaced or removed depending on the need.

{

param ($ComputerName = "localhost")

# Get HBA Information

$Port = Get-WmiObject -ComputerName $ComputerName -Class MSFC_FibrePortHBAAttributes -Namespace "root\WMI"

$HBAs = Get-WmiObject -ComputerName $ComputerName -Class MSFC_FCAdapterHBAAttributes -Namespace "root\WMI"

$HBAProperties = $HBAs | Get-Member -MemberType Property, AliasProperty | Select -ExpandProperty name | ? {$_ -notlike "__*"}

$HBAs = $HBAs | Select-Object $HBAProperties

$HBAs | % { $_.NodeWWN = ((($_.NodeWWN) | % {"{0:x2}" -f $_}) -join ":").ToUpper() }

ForEach ($HBA in $HBAs) {

# Get Port WWN

$PortWWN = (($Port |? { $_.instancename -eq $HBA.instancename }).attributes).PortWWN

$PortWWN = (($PortWWN | % {"{0:x2}" -f $_}) -join ":").ToUpper()

Add-Member -MemberType NoteProperty -InputObject $HBA -Name PortWWN -Value $PortWWN

# Get Fabric WWN

$FabricWWN = (($Port |? { $_.instancename -eq $HBA.instancename }).attributes).FabricName

$FabricWWN = (($FabricWWN | % {"{0:x2}" -f $_}) -join ":").ToUpper()

Add-Member -MemberType NoteProperty -InputObject $HBA -Name FabricWWN -Value $FabricWWN

# Output

$HBA

}

}

#Grab the cluster nane in a variable. Adapt thiscode to loop through all your clusters.

$ClusterName = "DEMOLABCLUSTER"

#Grab all cluster node

$ClusterNodes = Get-Cluster -name $ClusterName | Get-ClusterNode

#Create array of custom object to store ClusterName, the cluster nodes and the HBAs

$ServerWWNArray = @()

ForEach ($ClusterNode in $ClusterNodes) {

#We loop through the cluster nodes the cluster and for each one we grab the HBAs that are relevant.

#My lab nodes have different types installed up and off, so I specify the manufacturer to get the relevant ones.

#Adapt to your needs. You ca also use modeldescription to filter out FCoE vers FC HBAs etc.

$AllHBAPorts = Get-WinOSHBAInfo -ComputerName $ClusterNode.Name | Where-Object {$_.Manufacturer -eq "Emulex Corporation"}

#The SC Series SAN PowerShell takes the WWNs without any delimiters, so we dump the ":" for this use case.

$WWNs = $AllHBAPorts.PortWWN -replace ":", ""

$NodeName = $ClusterNode.Name

#Build a nice node object with the info and add it to the $ServerWWNArray

$ServerWWNObject = New-Object psobject -Property @{

WWN = $WWNs

ServerName = $NodeName

ClusterName = $ClusterName

}

$ServerWWNArray += $ServerWWNObject

}

#Show our array

($ServerWWNArray).WWN

#just a demo to list what's in the array

ForEach ($ServerNode in $ServerWWNArray) {

$Servernode.ServerName

$Servernode.WWN

}

#Now add the HBA to the servers in the cluster.

#This is part of a bigger script that gathers all HBA/WWN infor for all clusters

#and creates the Compellent SC Series Cluster Object, the Servers, add the HBA's

#I'll post more snippets in futire blog post to show how to do that and give you

#some ideas for your own environment.

import-module "C:\SysAdmin\Tools\DellStoragePowerShellSDK\DellStorage.ApiCommandSet.dll"

#region SetUpDSMAccess Variable & credentials

Get-DellScController

$DsmHostName = "MyDSMHost.domain.local"

$DsmUserName = "MyUserName"

# Prompt for the password

$DsmPassword = Read-Host -AsSecureString -Prompt "Please enter the password for $DsmUserName"

# Create the connection

Connect-DellApiConnection -HostName $DsmHostName -User $DsmUserName -Password $DsmPassword -Save MyConnection

#Assign variables

$ConnName = "MyConnection "

$ScName = "MySCName"

# Get the Storage Center

$StorageCenter = Get-DellStorageCenter -ConnectionName $ConnName -Name $ScName

ForEach ( $ClusterNodeWWNInfo in $ServerWWNArray ) {

# Get the server

$Server = Get-DellScPhysicalServer -StorageCenter $StorageCenter -Name $ClusterNodeWWNInfo.ServerName

$PortType = [DellStorage.Api.Enums.FrontEndTransportTypeEnum] "FibreChannel"

ForEach ($WWN in $ClusterNodeWWNInfo.WWN)

{

# Add the array of WWNs for the cluster node and add them to the SC Compellent server

Add-DellScPhysicalServerHba -ConnectionName $ConnName `

-Instance $Server `

-HbaPortType $PortType `

-WwnOrIscsiName $WWN -Confirm:$false

}

}