When configuring live migrations it’s easy to go scrounge on all the features and capabilities we have in Windows Server 2012 R2.

There is no one stopping you configuring 50 simultaneous live migrations. When you have only one, two or even four 1Gbps NICs at your disposal, you might stick to 1 or 2 VMs per available 1Gbps. But why limit yourself if you have one or multiple 10Gbps pipes or bigger ready to roll? Well let’s discuss a little what happens when you do a live migration on a Hyper-V cluster with CSV storage. Initiating a live migrations kicks of a slew of activities.

- First it is establish form where (aka the source host) to where we are migrating (aka the target host).

- Permissions are checked, are we allowed to do this?

- Do we have enough memory on the target to do this? If so allocate that memory.

- Set up a skeleton VM on the target host that is a perfect copy of the source VM’s specifications and configure dependencies on the target host.

- Let’s see if we can get a network connection set up and running. If that works, we’re cool and can now transfer the memory.

- A bitmap is created to track the changes to the memory pages of the source VM’s pages. Each memory page is copied from the source host to the target host VM during which the memory page is marked clean.

- As long as the source VM is running memory is changing, which continues to be tracked in the bitmap and as such that page is mapped as dirty over there. In an iterative process this dirty memory is copied over again and so on. This continues until the remaining dirty memory is minimal. This will take longer if the VM is very memory intensive.

- The tiniest amount of not yet copied dirty memory is that part of a VMs state that is copied during “black out”. For this to happen the VM on the source host is paused, the remaining state is copied.

- A final check is done to confirm all is well and then the virtual machine is resumed on the target host.

- Any remains of the VM on the source host are cleaned up.

That’s actually a lot of work and as you can see copying the state is just part of the process. The more bandwidth & the lower the latency we throw at this part of the process becomes less of the total time spent during live migration.

If you can’t fill of just fill the bandwidth of your 10/40/46Gbps pipe or pipes & you operate at line speed, what’s left as overhead? Everything that’s not actual the copy of VM state. The trick is to keep the host busy so you minimize idle time of the network copies. I.e we want to fill up that bandwidth just right but not go overboard otherwise the work to manage a large number of multiple live migrations might actually slow you down. Compare it to juggling with balls. You might be very good and fast at it but when you have to many balls to attend to you’ll get into trouble because you have to spread you attention to wide, i.e. you’re doing more context switching that is optimal.

So tweaking the number of simultaneous live migrations to your environment is the last step in making sure a node is drained as fast as possible. Slowing things down can actually speed things up. So when you get your 10Gbps or better pipes in production it pays of to test a bit and find the best settings for your environment.

Let’s recap all of the live migration optimization tips I have given over the years and add a final word of advice. Those who have been reading my blog for a while know I enjoy testing to find what works best and I do tweak settings to get best performance and results. However you have to learn and accept that it makes no sense in real life to hunt for 1% or 2% reduction in live migration speeds. You’ll get one off hiccups that slow you down more than that.

So what you need to do is tweak the things that matter the most and will get you 99% results?

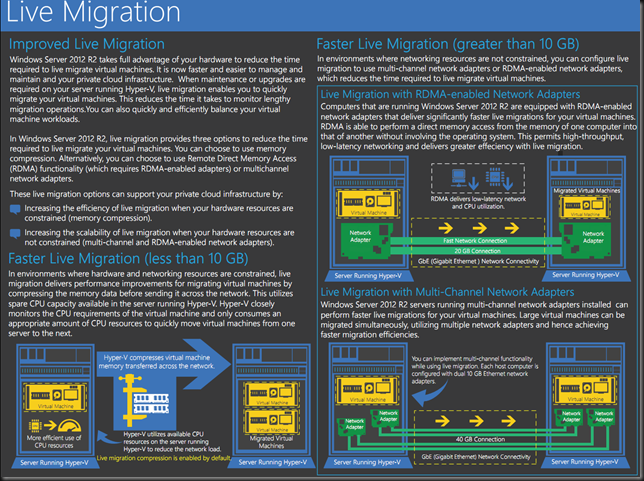

- Get the biggest pipe you need & can afford. Bigger pipes are always better than lots of aggregated smaller pipes when it come to low latency & high throughput.

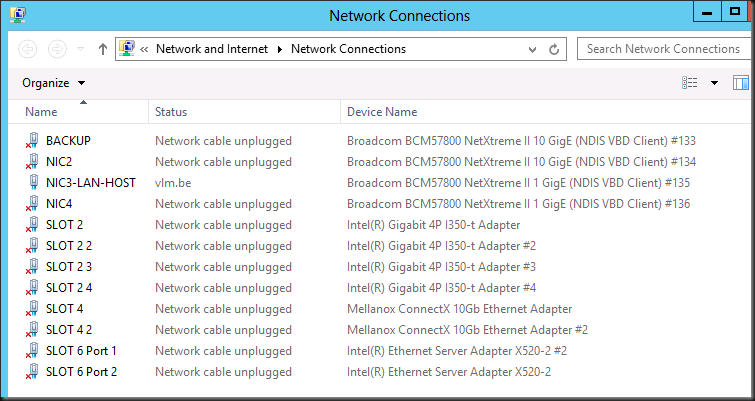

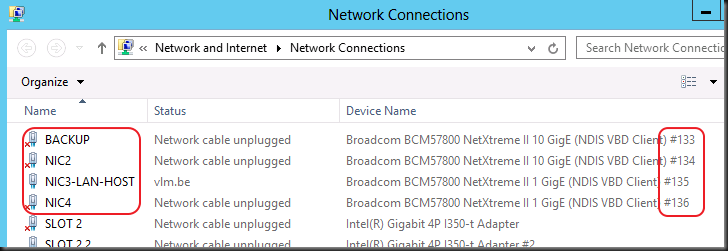

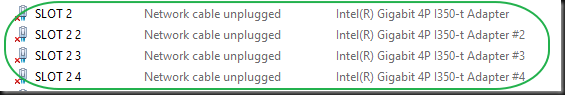

- Choose the best performance settings Hyper-V offers you. You can choose from TCP/IP,Compression, SMB. Ben Armstrong has a blog post on this Faster Live Migration–Which Option Should You Choose? I’d like to add that you can use NIC teaming for live migration as well and prior to Windows Server 2012 R2 that was the only way to aggregate bandwidth. Now you have more options. I prefer SMB but when I don’t have 10Gbps at my disposal I have found that compression really makes a difference. In my home lab where I have only 1Gbps, the horror, it stopped me from going crazy

(being addicted to 10Gbps).

(being addicted to 10Gbps).

- Optimize the power settings for your server BIOS if you want an extra speed & smoothness with 10Gbps (less so with 1Gbps). Look here An Early Look At Live Migration Over TCP/IP & Multichannel In Windows Server 2012 R2 Preview, the network traffic is a lot more stable, i.e. a flat line! In Windows 2008 R2 this was a real need for 10Gbps or you’d be stuck at 16% max.

- Enable Jumbo Frames for another 15-20%. Thanks to Multi Channel I can visualize this now. See also this blog post Live Migration Can Benefit From Jumbo Frames. The pictures say it all!

- Figure out the best number of simultaneous live migrations in your environments. Well you just read this blog, so now you know. Start at 4 and experiment upwards. Tune it back down if the speed deteriorates. The “best” number depends on your environment.

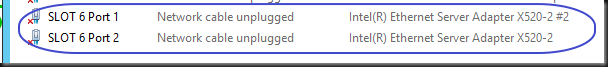

If you do these 5 things you’ll have really gotten the best performance out of your infrastructure that’s possible for live migration. Bar compression, which is not magic either but reducing the GB you need to transport at the cost of CPU cycles, you just cannot push more than 1.25GB/s trough a single 10Gbps pipe and so on. You might keep looking to grab another 1% or 2% improvement left and right but might I suggest you have more pressing issues to attend to that, when fixed are a lot more rewarding? Knocking 1 or 2 seconds of a 100 second host evacuation is not going to matter, it’s a glitch. Stop, don’t over engineer it, don’t IBM it, just move on. If you don’t get top performance after tweaking these 5 settings you should look at all the moving parts involved between the host as the issue is there (drivers, firmware, cables, switch configurations, …) as you have a mistake or problem somewhere along the way.