When the first information about RemoteFX in Windows 2008 R2 SP1 Beta became available a lot of people busy with VDI solutions found this pretty cool and good news. It’s is a very much needed addition in this arena. Now after that first happy reaction the question soon arises about how the host will provide all that GPU power to serve a rich GUI experience to those virtual machines. In VDI solutions you’re dealing with at least dozens and often hundreds of VM’s. It’s clear, when you think about it, that just the onboard GPU won’t hack it. And how many high performance GPU can you put into a server? Not many or not even none depending on the model. So where does the VDI hosts in a cluster get the GPU resources? Well there are some servers that can contain a lot of GPUs. But in most cases you just add GPU units to the rack which you attach to the supported server models. Such units exist for both rack servers and for blade servers. Dell has some info up on this over here here. The specs on the the PowerEdge C410x, a 3U, external PCIe expansion chassis by DELL can be found following this link C410x. It’s just like with external DAS Disk bays. You can attach one or more 1U / 2U servers to a chassis with up to 16 GPUs. They also have solutions for blade servers. So that’s what building a RemoteFX enabled VDI farm will look like. Unlike some of the early pictures showing a huge server chassis in order to make room to stuff all those GPU’s cards the reality will be the use of one or more external GPU chassis, depending on the requirements.

Monthly Archives: November 2010

Exchange 2010 SP1 Public Folder High Availability Returns with Roll Up 2

Al lot of people were cheering in the inter active session on Exchange 2010 SP1 High Availability with Scott Schnoll and Ross Smith of the Exchange Team. They announced (between goofing around) that the alternate server that provides failover to the clients (so they can select another public folder database to connect to) for public folders and that is sadly missing from Exchange 2010 would return with Exchange 2010 SP 1 Roll Up 2. This feature is needed by Outlook to automatically connect to an alternate public folder and it’s return means that high availability will finally be achievable for public folders in Exchange 2010 SP1. That’s great news and frankly an “oversight” that shouldn’t have happened even in Exchange 2010 RTM. The issue is described in knowledge base article “You cannot open a public folder item when the default public folder database for the mailbox database is unavailable in an Exchange Server 2010 environment” which you can find here http://support.microsoft.com/kb/2409597.

In previous versions of exchange you made public folders highly available to Outlook clients by having replica’s. The Outlook clients could access an replica on another server if the default public folders as defined in the client settings of the database was not available. Clustering in Exchange 2010 does nothing for public folders. In Exchange 2010 the Outlook clients connect directly to the mailbox server in order to get to a public folder so they do not leverage the CAS or CAS array. Also the DAG does not support public folders and as clustering happens at the database level on DAG members and no longer at the server level we no longer get any high availability for the clients with clustering in Exchange 2010. Sure, if you have multiple replica’s the data is highly available but the access to another replica/database/server for public folder doesn’t happen automatically in Outlook when you’re running Exchange 2010. To make that happen you need an alternate server to be offered to the client for selection But as this feature is missing in Exchange 2010 up until SP1 Roll Up 1 in reality until now you need to keep using Exchange 2003/2007 to have public folder high availability. Exchange 2010 SP1 Roll Up 2 will change that. I call that good news.

New Spatial & High Availability Features in SQL Server Code-Named “Denali”

The SQL Server team is hard at work on SQL Server vNext, code name “Denali”. They have a whitepaper out on their web site, “New Features in SQL Server Code-Named “Denali” Community Technology Preview 1” which you can download here.

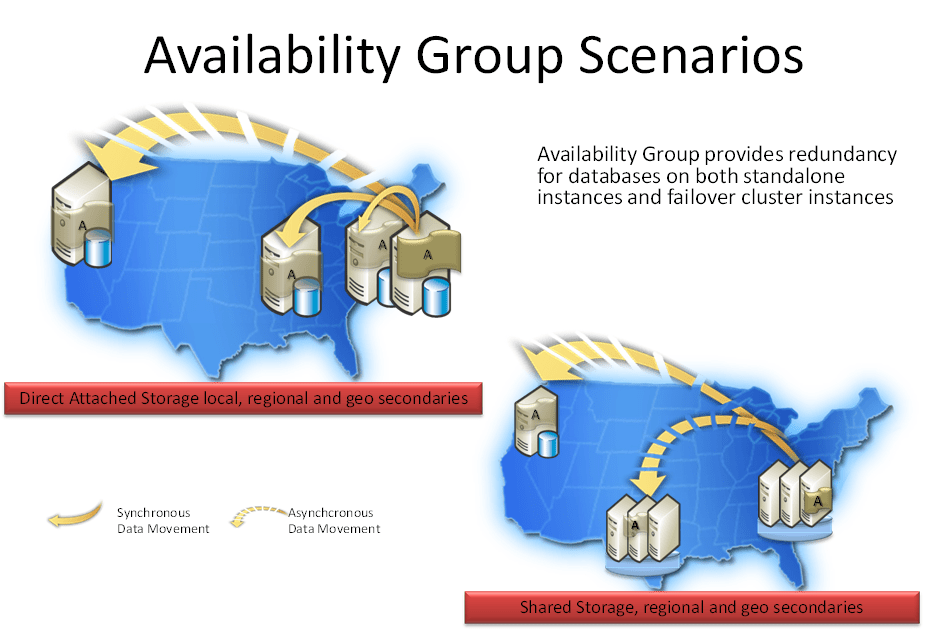

As I do a lot of infrastructure work for people who really dig al this spatial and GIS related “stuff” I always keep an eye out for related information that can make their lives easier an enhance the use of the technology stack they own. Another part of the new features coming in “Denali” is Availability Groups. More information will be available later this year but for now I’ll leave you with the knowledge that it will provide for Multi-Database Failover, Multiple Secondaries, Active Secondaries, Fast Client Connection Redirection, can run on Windows Server Core & supports Multisite (Geo) Clustering as shown in the Microsoft (Tech Ed Europe, Justin Erickson) illustration below.

Availability Group can provide redundancy for databases on both standalone instances and failover cluster instances using Direct Attached storage (DAS), Network Attached Storage (NAS) and Storage Area Networks (SAN) which is useful for physical servers in a high availability cluster and virtualization. The latter is significant as they will support it with Hyper-V Live Migration where as Exchange 2010 Database Availability Groups do not. I confirmed this with a Microsoft PM at Tech Ed Europe 2010. Download the CTP here and play all you want. Please pay attention to the fact that in CTP 1 a lot of stuff isn’t quite ready for show time. Take a look at the Tech Europe 2010 Session on the high availability features here. You can also download the video and the PowerPoint presentation via that link. At first I thought MS might be going the same way with SQL as they did with Exchange, less choice in high availability but easier and covering all needs but than I don’t think they can. SQL Server Applications are beyond the realm of control of Redmond. They do control Outlook & OWA. So I think the SQL Server Team needs to provide backward compatibility and functionality way more than the Exchange team has. Brent Ozar (Twitter: @BrentO) did a Blog on “Denali”/Hadron which you can read here http://www.brentozar.com/archive/2010/11/sql-server-denali-database-mirroring-rocks/. What he says about clustering is true. I’ use to cluster Windows 2000/2003 and suffered some kind of mental trauma. That was completely cured with Windows 2008 (R2) and I’m now clustering with Hyper-V, Exchange 2010, File Servers, etc. with a big smile on my face. I just love it!

Availability Group can provide redundancy for databases on both standalone instances and failover cluster instances using Direct Attached storage (DAS), Network Attached Storage (NAS) and Storage Area Networks (SAN) which is useful for physical servers in a high availability cluster and virtualization. The latter is significant as they will support it with Hyper-V Live Migration where as Exchange 2010 Database Availability Groups do not. I confirmed this with a Microsoft PM at Tech Ed Europe 2010. Download the CTP here and play all you want. Please pay attention to the fact that in CTP 1 a lot of stuff isn’t quite ready for show time. Take a look at the Tech Europe 2010 Session on the high availability features here. You can also download the video and the PowerPoint presentation via that link. At first I thought MS might be going the same way with SQL as they did with Exchange, less choice in high availability but easier and covering all needs but than I don’t think they can. SQL Server Applications are beyond the realm of control of Redmond. They do control Outlook & OWA. So I think the SQL Server Team needs to provide backward compatibility and functionality way more than the Exchange team has. Brent Ozar (Twitter: @BrentO) did a Blog on “Denali”/Hadron which you can read here http://www.brentozar.com/archive/2010/11/sql-server-denali-database-mirroring-rocks/. What he says about clustering is true. I’ use to cluster Windows 2000/2003 and suffered some kind of mental trauma. That was completely cured with Windows 2008 (R2) and I’m now clustering with Hyper-V, Exchange 2010, File Servers, etc. with a big smile on my face. I just love it!

Tech Ed 2010 Europe – After Action Report

I spend the last two days of Tech Ed 2010 doing break out sessions and Inter Active Sessions. Only one Inter Active Session was a complete disaster as the guy handling it had no clue, it looked more like a bar discussion, not for lack of the public trying to get it going. One breakout session on SCVMM vNext was a mess due to the speaker not showing up and the improvising that caused. But on the whole the sessions were good. John Craddock confirmed once more he is a great scholar. The SCVMM team managed their message well. The info on Lync was good and useful but I do find the explanation about getting the configuration data out of Active Directory a bit weak as the same can be said for Exchange. If they go that way the entire dream of using Active Directory for leveraging applications goes down the drain. The other thing that I found a bit negative about Lync is the focus on large enterprises for high availability. Smaller ones need that as well. And small to USA standards is medium over here in Europe ![]()

I also really enjoyed the Clustering interactive session. I almost wanted to start speaking on the subject my self but I could refrain myself ![]() .

.

The statement that the Exchange team no longer recommends Windows NLB is no surprise and was welcomed by most. But in retrospect, that’s a public secret put into words. They talk more about WNLB in their docs but never recommend it over hardware NLB, they just never really discussed the latter, probably because the hardware configuration is vendor specific. There is some info available on what needs to be done on the Exchange side when you opt for hardware load balancing and it’s a bit more involved.

The networking aspect of the conference was a success. I’ve had long technical and conceptual talks with the Windows (clustering), Hyper-V and System Center Virtual Machine Manager teams. Those hours providing feedback, conveying wishes and concerns and learning how they look at certain issues were very interesting. The most new info on future releases was on SCVMM vNext. Things like managing the fabric (storage,network, cluster), library management, deployment and WSUS/Maintenance Mode integration for automated patching if the hosts are cool. I’m looking forward to the public betas. They could not talk about any items in Windows clustering /NLB or Hyper-V vNext. I also signed up for an Office365 beta account. We’ll have to see when one becomes available.

The developers I know that came along came away with the sobering confirmation of what they already knew about agile, project managers and time based planning of implementation versus releases. Now how will they communicate this back home. Food for taught, I bet!

Then there are the rumors. There was one about Tech Ed changing back to the time frame it was running until 2005, in the summer. This lead to the rumor that this would be the last Tech Ed Europe. I don’t think so, I guess that it’s probably the last one in Berlin for now, but not the last one in Europe. The following rumor was that due to the timing the 2011 Tech Ed in Europe would be skipped as summer 2011 would be to soon. We’ll have to wait and see. If so, I’m eyeballing Microsoft Management Summit & Tech Ed North America in the USA.

Speaking of the USA, if you look at the money Microsoft throws against Tech Ed North America and PDC than this year the European Tech Ed really stood out as being a bit “poor”. In the end no one goes to a conference for a bag, freebies or swag. But the atmosphere at the outset of a conference determines how the mood is starting the event and this year they messed that up. By the end of the week that was mostly forgotten. I do think however Microsoft needs to manage and guard the quality of the sessions. This is very important, it should not become a marketing/managerial types event. Tech Ed = Technical Education and that education should be of a high level. The combination of that with high quality architectural and conceptual sessions is value for money. As already said above, something they did very well this year was providing lots of quality interaction and networking opportunities with MVP’s, Microsoft personnel and partners. That is awesome and I hope they keep working at that.

If you went to the conference I hope you had a good Tech Ed. if you didn’t make it you can enjoy loads of sessions here: Tech Ed Europe 2010 Online Sessions