Introduction

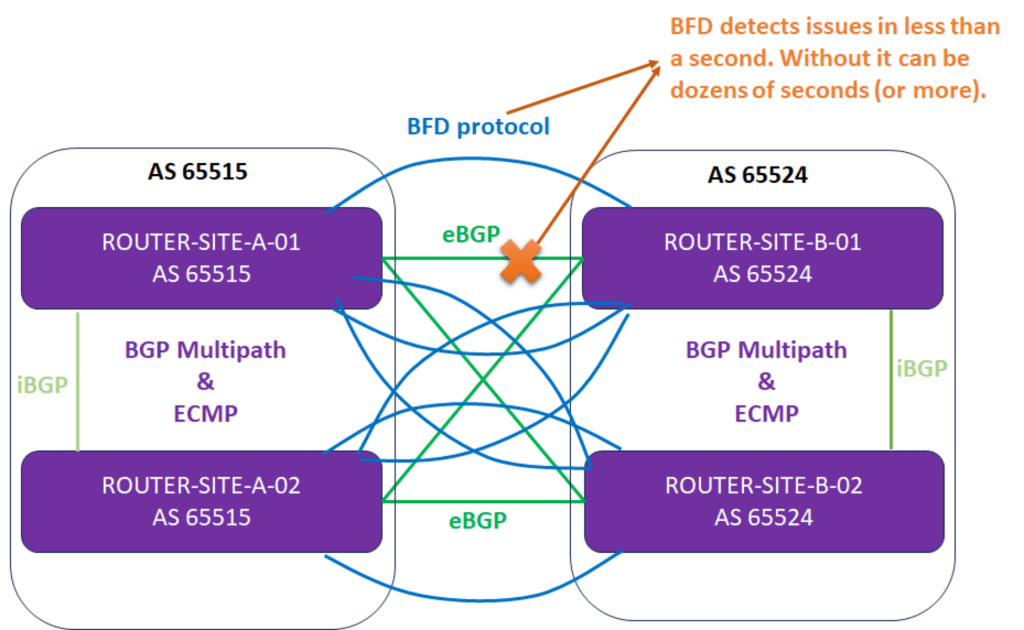

Anyone doing redundant, high-available VPN gateways leveraging BGP (Border Gateway Protocol) has encountered BFD (Bi-Directional Forwarding Detection). That said, BFD is not limited to BGP but also works with OSPF and OSPF6. But before we answer whether Azure VPN Gateways that leverage BGP support BFD, l briefly discuss what BFD does.

Bi-Directional Forwarding Detection

The BFD (Bi-Directional Forwarding Detection) protocol provides high-speed and efficient detection for link failures. It works even when the physical link has no failure detection support itself. As such, it helps routing protocols, such as BGP, failover much quicker than they could achieve if left to their own devices.

BFD control packets are transmitted via UDP from source ports between 49152-65535 to destination port 3784 (single-hop, RFC 5880, RFC 5881, and RFC 5882) or 4784 (multi-hop, RFC 5883). It can be IPv4 as well as IPv6. See Bidirectional Forwarding Detection on Wikipedia for more information. Note that this works between directly connected routers (single-hop) or (multi-hop).

Currently, OPNsense and pfSense, with the FRR (Flexible Rigid Routing) plugin, support BFD integration with BGP, Open Shortest Path First (OSPF), and Open Shortest Path First version 6 (OSPF6). Naturally, most vendors support this, but I mention OPNsense and pfSense because they offer free, fully functional products that are very handy for demos and lab testing.

Do Azure VPN Gateways that leverage BGP support BFD?

TL;DR: No.

You do not find much information when you search for BFD information about Microsoft Azure networking. Only for Azure ExpressRoute does Microsoft clearly state that it is supported and provides information.

But what about Azure VPN gateways with BFD? Well, no, that is not supported at all. You can try to set it up, but your VPN Gateways on-premises is shouting into a void. The session status with the peers will always be “down.” It just won’t work.