The Need For Speed

My clients or employers are normally into lots of data, large files & number crunching. That means they like CPU power, memory, bandwidth & IOPS. That doesn’t mean they want to spend fortunes but it does mean I got a standing order to get every last ounce of optimization and efficiency out of commodity hardware.

Today that means we prefer to work with DELL generation 12 servers like the R620 & R720 which are the best possible value for money you can get. They deliver all features in box at no extra cost / licensing crap and they can be fine tuned and optimized for top performance. Windows Server 2012 R2 can handle loads higher than the hardware today can throw at it so you want all you can get without breaking the bank. Add Intel X520/540, Mellanox (RoCE) or Cheslio (iWarp) 10Gbps cards and you’re ready to rumble with some nice PowerConnect 81XX Series or even the Force10 S4810 10Gbps switches.

CPU Power is not an issue, just bring money. You have 8-12 core CPUs. You can scale up to 4 socket systems & scale out as well. Licensing is probably your biggest concern here due to cost.

Memory? DDR3 is readily available and compared to other costs cheap. DDR4 is on the way. For max performance you possibly won’t do Dynamic Memory to avoid a NUMA hit but otherwise it helps with efficiencies.

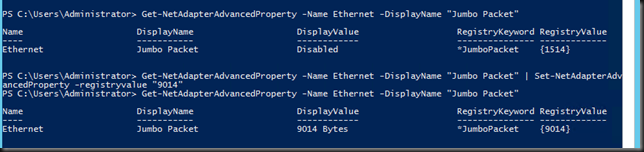

Network. We’ll you’ve seen/heard/read me on topics like 10Gbps, RDMA/SMB Direct, (Dynamic VMQ) but today we’ll look at virtual RSS. In Windows Server 2008 R2 VMQ helped us beyond the scalability issues of a single core on the host having to handle all interrupts for the traffic going the virtual machines. In Windows 2012 that got optimized with Dynamic VMQ. For workloads that need the absolute best performance & lowest latency we got SR-IOV support. That works fine but it has some potentially important draw backs on bot the manageability (NIC teaming needs to be done inside the guest) & security front (it bypasses the virtual switch, so no ACLs or NVGRE).

Today with Windows 2012 R2 we have a new optimization for network traffic in a virtual environment. It’s “Virtual RSS” or vRSS. I tell you it’s sweet and you’d do well to investigate this and enable it in your environment especially if, like we, you move lots of data around & virtualization is default. We don’t do physical unless for very strict reasons, i.e. it cannot be virtualized. Otherwise … no excuses, the economics and benefits are just to good not to do so. It’s not as supper low latency as SR-IOV but depending on your needs you might never notice and … it plays nicely with all other network features in Hyper-V.

Show me vRSS already!

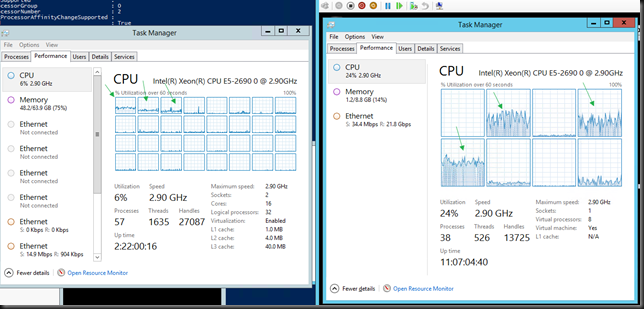

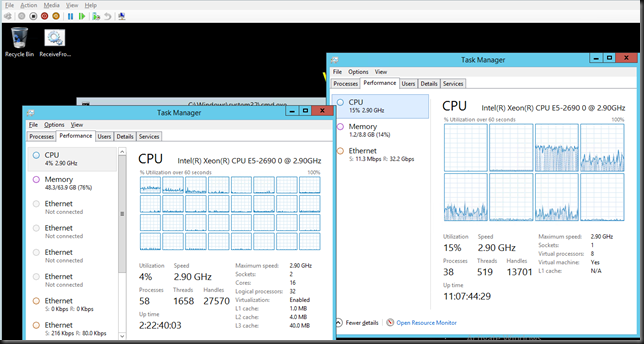

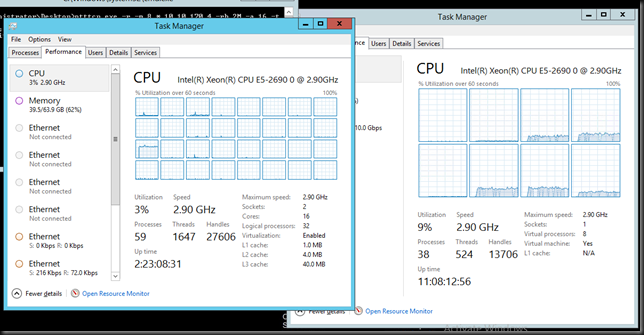

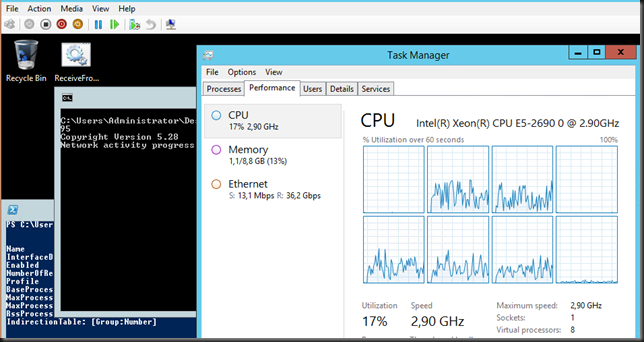

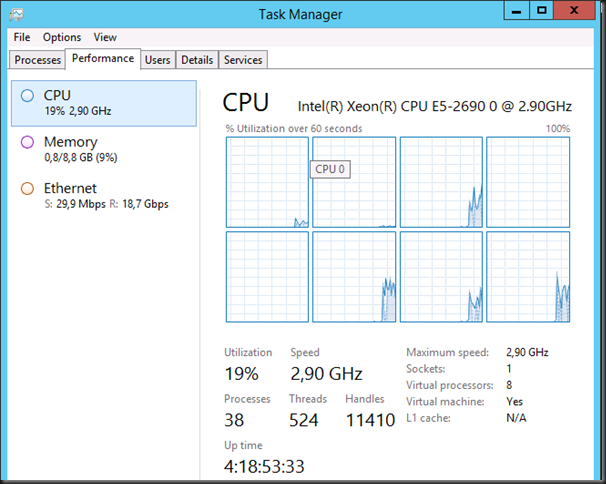

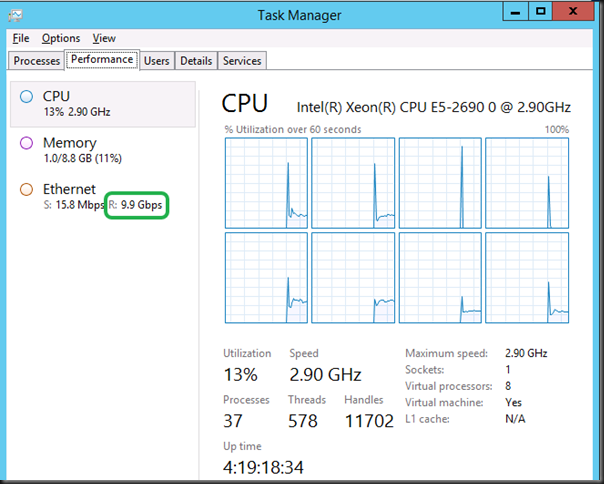

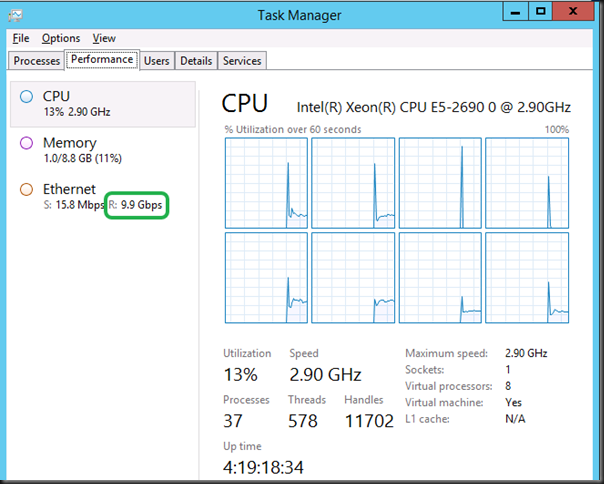

Inside the guest we see vRSS in action as multiple cores are put in to action to handle the interrupts of incoming traffic. This takes away the single vCPU bottle neck.

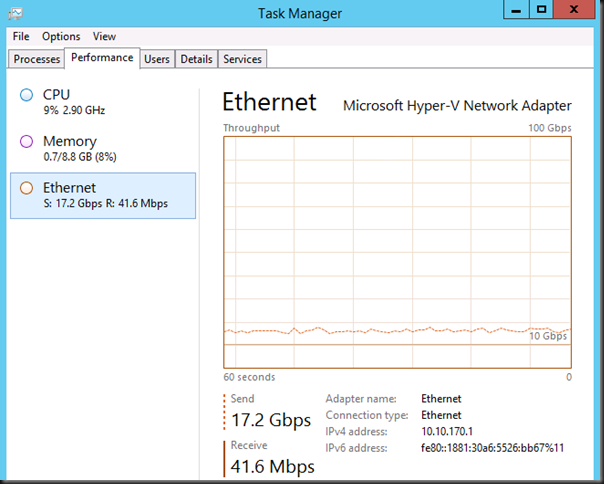

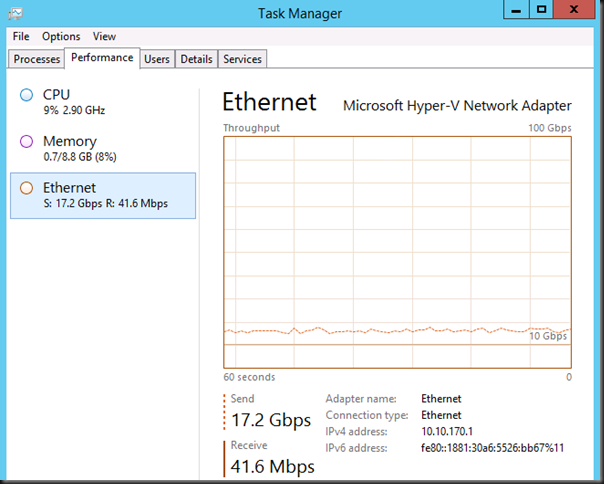

And the result is a sweet 17.2Gbps from VM1 to VM2. For the sharp eyed ones amongst you, they are on the same host so yes in this case we get top bandwidth as the traffic doesn’t have to go across the wire over the 2*10Gbps NIC team but stays within the same host or better, vSwitch.

The GUI is very friendly and suggest I can go to 100Gbps networking hardware  Well, not yet I’m afraid, but I’ve taken note

Well, not yet I’m afraid, but I’ve taken note  .

.

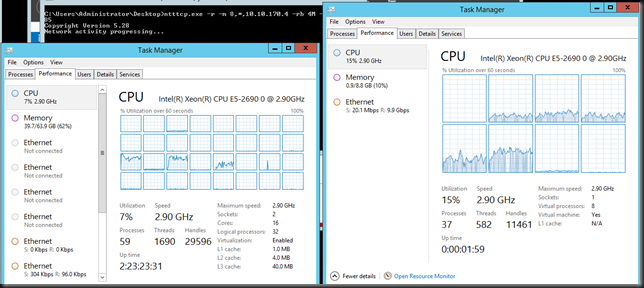

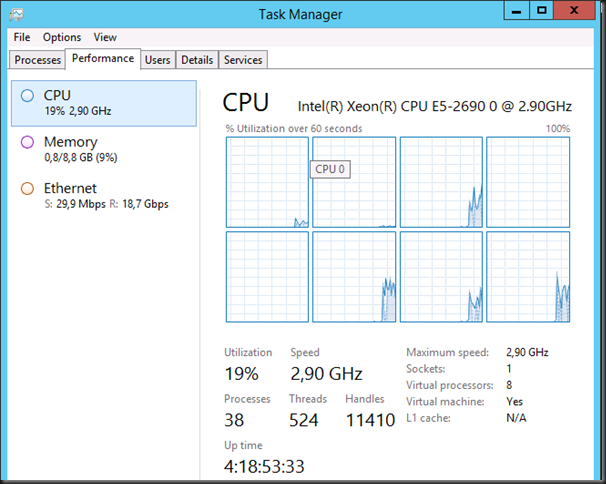

Here’s what it looks like when the sending and receiving VM are on different hosts and the vSwitch is connected to a 2*10Gbps team, switch independent, dynamic mode. You “only” get 10Gbps as the team can send on all members but receive on only one. But that’s still fine.

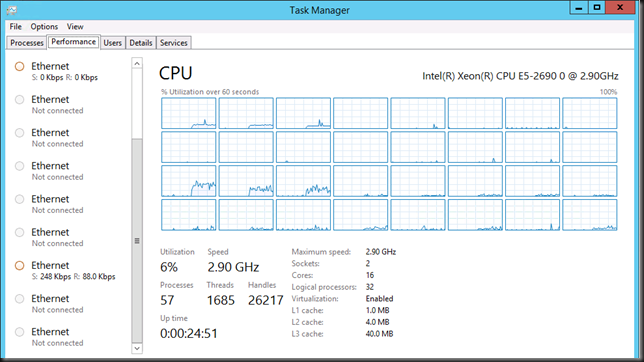

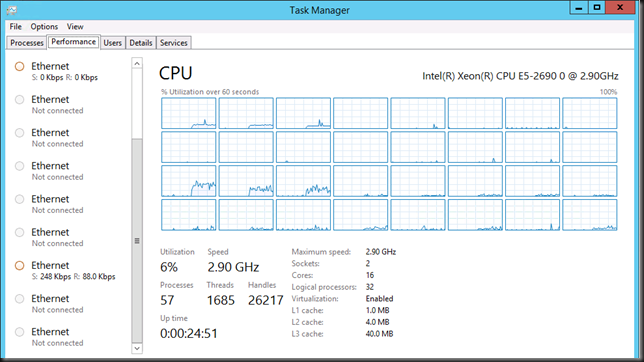

So how this look like on the host?

Here you see Dynamic VMQ in action. To prevent one core of becoming overloaded the host puts more of them into service. This depends on the load and it’s dynamic. Hence Dynamic VMQ. Where VMQ was great it still a limiting factor as you used to be tied to one core / VM.

This means that our network traffic processing is no longer limited by the OS or better the use of a single core, both inside the VM and on the host. Our bottleneck now is the maximum throughput the NICs can deliver. In our 2 member NIC team that’s 20Gbps max under the right circumstances. Yup. Line speed. Need more? Throw 40Gbps NICs at the problem or even 100Gbps pretty soon. Windows Server 2012 R2 is ready for this.

Sharing the wealth

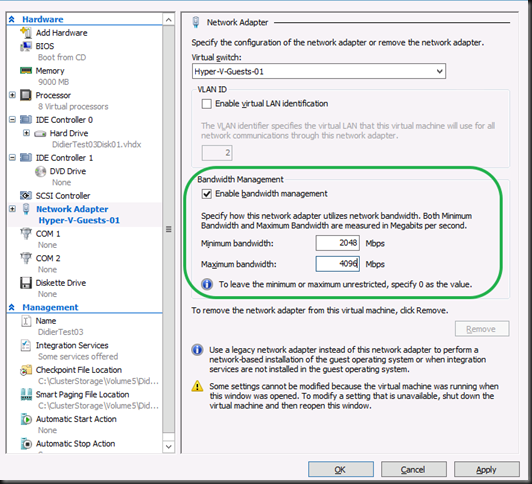

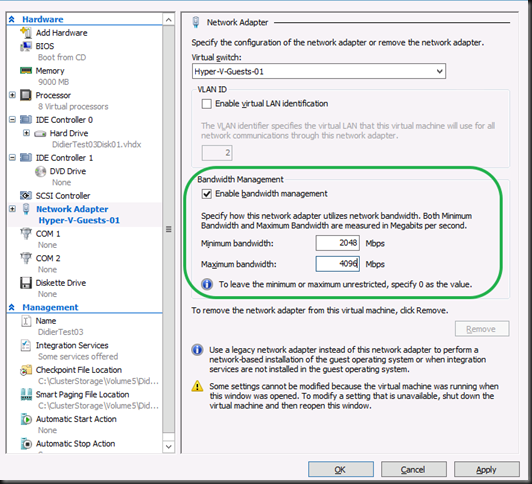

Now to make sure a bunch of these VMs on your cluster don’t starve the rest of the VM population with their greed for and lust after bandwidth you have the option of using Bandwidth management on the VM NIC.

This is one of the cases where this option can be very useful.

VM mobility without boundaries

Also consider this capability and this type of high workloads combined while leveraging SMB Direct. This offloads the processing of CSV traffic, Live Migration, Shared Nothing Live Migration and under certain conditions Storage Live Migration to the NIC by leveraging RDMA. In other words it doesn’t tax your host CPUs. This means you can have these kinds of network traffic loads going on and still live migrate at will. Scalable VM mobility anyone? You’ll understand what tremendous network loads the combination Windows Server 2012 R2 Hyper-V host & guests can handle.

It’s virtualization, not magic

OK, time for a little reality check, just in case it’s needed. Virtualization is technology, not magic. For all of you “thinking” they can push 20GBps into 20 VMs simultaneously on single host over just a single Team of 2 *10Gbps … ah well, get real  OK?

OK?

Teaser

I can talk about the benefits of vRSS all day and show you some more screenshots but I’m working on “vRSS, The Movie’”. Perhaps even a sequel already.

![]() . To my buddies, acquaintances & connections, I’ll see you soon. We have a lot to learn & discuss. That’s one of the reasons I’m off a bit earlier. It helps with the jet lag but it also gives me time to meet up with friends and acquaintances I’ve made in the Puget Sound area and talk shop. This helps to keep in touch with what’s happening over the world and to understand where their priorities are, what’s keeping them occupied. While I’m a firm believer in remote and teleworking there is value in getting your boots on the ground every now and then. It prevents tunnel vision and helps avoiding teleology in our views while enhancing early detection of small trend changes to whole sale tectonic shifts. This is not to be confused with thinking you have a crystal ball or anything.

. To my buddies, acquaintances & connections, I’ll see you soon. We have a lot to learn & discuss. That’s one of the reasons I’m off a bit earlier. It helps with the jet lag but it also gives me time to meet up with friends and acquaintances I’ve made in the Puget Sound area and talk shop. This helps to keep in touch with what’s happening over the world and to understand where their priorities are, what’s keeping them occupied. While I’m a firm believer in remote and teleworking there is value in getting your boots on the ground every now and then. It prevents tunnel vision and helps avoiding teleology in our views while enhancing early detection of small trend changes to whole sale tectonic shifts. This is not to be confused with thinking you have a crystal ball or anything.