Here’s the deal. While Windows NLB on Hyper-V guests might seem to work OK you can run into issues. Our biggest challenge was to keep the WNLB cluster functional when all or multiple node of the cluster are live migrated simultaneously. The live migration goes blazingly fast via SMB over RDMA nut afterwards we have a node or nodes in an problematic state and clients being send to them are having connectivity issues.

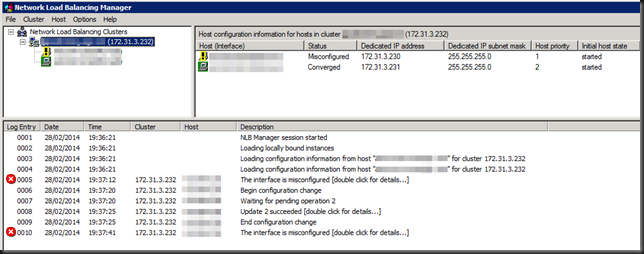

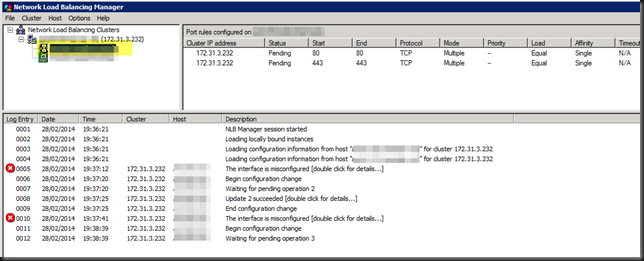

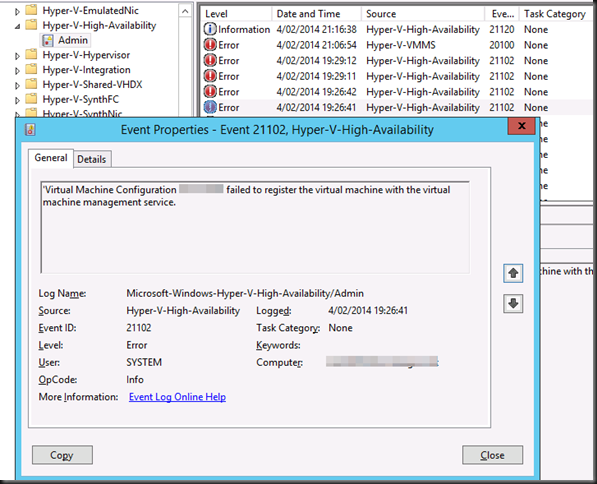

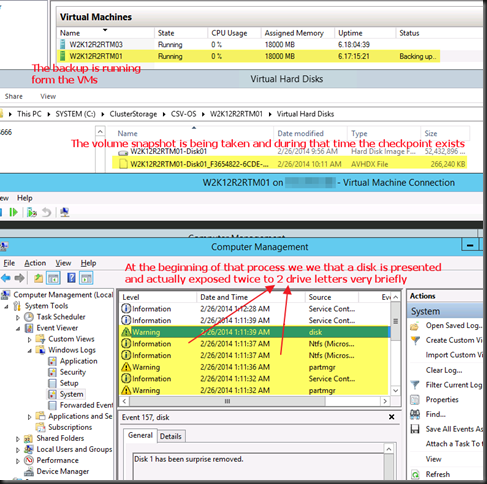

After live migrating multiple or all nodes of the Windows NLB cluster simultaneously the cluster ends up in this state:

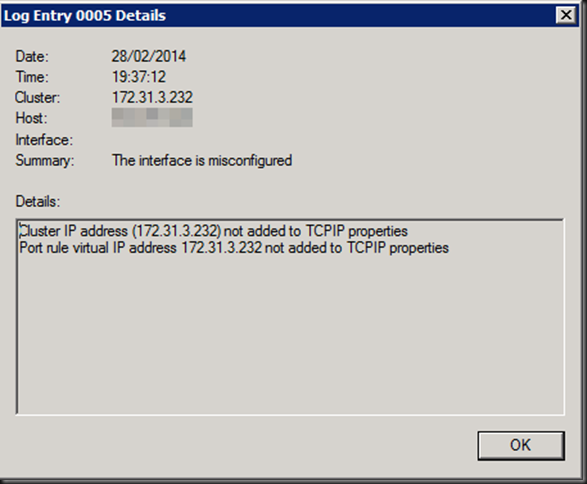

A misconfigured interface. If you click on the error for details you’ll see

Not good, and no we did not add those IP addresses manually or so, we let the WNLB cluster handle that as it’s supposed to do. We saw this with both fixed MAC addresses (old school WNLB configuration of early Hyper-V deployments) and with dynamic MAC addresses. On all the nodes MAC spoofing is enabled on the appropriate vNICs.

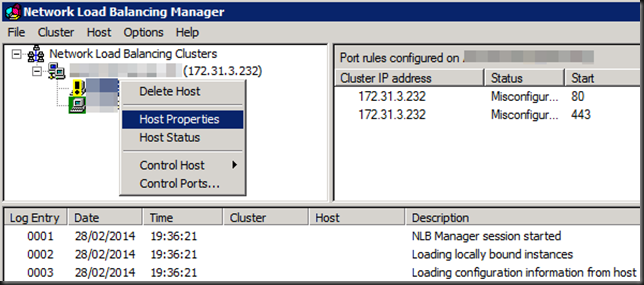

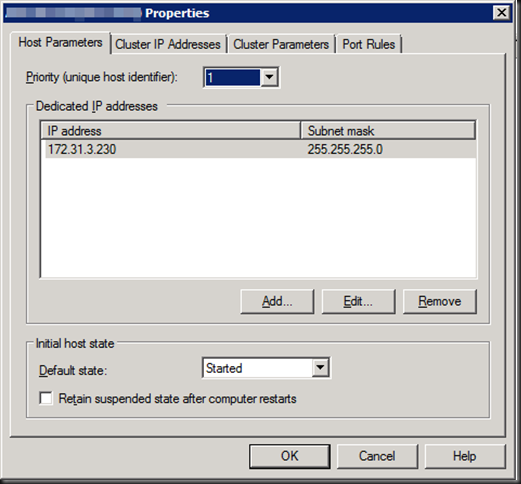

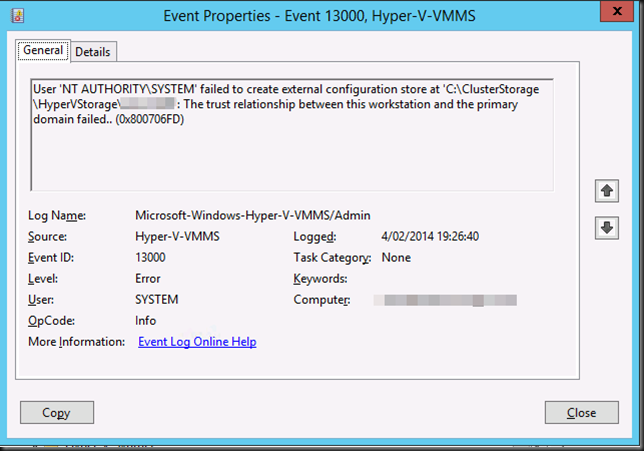

The temporary fix is rather easy. However it’s a manual intervention and as such not a good solution. Open up the properties of the offending node or nodes (for every NLB cluster that running on that node, you might have multiple).

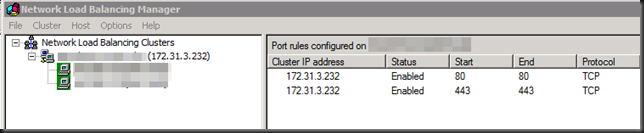

Click “OK” to close it …

… and you’re back in business.

Scripting this out somehow with nlb.exe or PowerShell after a guest gets live migrated is not the way to go either.

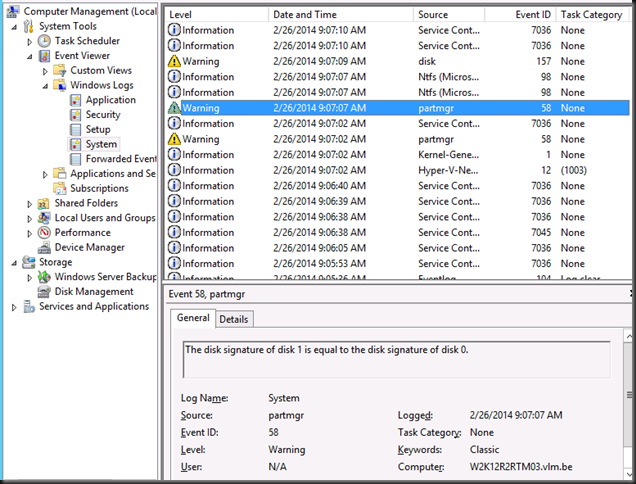

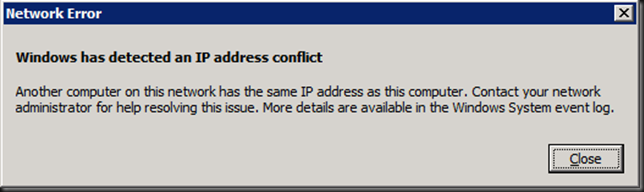

But that’s not all. In some case you’ll get an extra error you can ignore if it’s not due to a real duplicate IP address on your network:

We tried rebooting the guest, dumping and recreating the WNLB cluster configuration from scratch. Clearing the switches ARP tables. Nothing gave us a solid result.

No you might say, Who live migrates multiple WNLB nodes at the same time? Well any two node Hyper-V cluster that uses Cluster Aware Updating get’s into this situation and possibly bigger clusters as well when anti affinity is not configured or chose to keep guest on line over enforcing said anti affinity, during a drain for an intervention on a cluster perhaps etc. It happens. Now whether you’ll hit this issue depends on how you configure and use your switches and what configuration of LBFO you use for the vSwitches in Hyper-V.

How do we fix this?

First we need some back ground and there is way to much for one blog actually. So many permutations of vendors, switches, configurations, firmware & drivers …

Unicast

This is the default and Thomas Shinder has an aging but great blog post on how it works and what the challenges are here. Read it. It you least good option and if you can you shouldn’t use it. With Hyper-V we and the inner workings and challenges of a vSwitch to the mix. Basically in virtualization Unicast is the least good option. Only use it if your network team won’t do it and you can’t get to the switch yourself. Or when the switch doesn’t support mapping a unicast IP to a multicast MAC address. Some tips if you want to use it:

- Don’t use NIC teaming for the virtual switch.

- If you do use NIC teaming for the virtual switch you should (must):

- use switch independent teaming on two different switches.

- If you have a stack or just one switch use multicast or even better IGMP with multicast to avoid issues.

I know, don’t shout at me, teaming on the same switch, but it does happen. At least it protects against NIC issues which are more common than switch or switch port failures.

Multicast

Again, read Thomas Shinder his great blog post on how it works and what the challenges are here.

It’s an OK option but I’ll only use it if I have a switch where I can’t do IGMP and even then I do hope I can do two things:

- Add a static entry for the cluster IP address / MAC address on your switch if it doesn’t support IGMP multicast:

- arp [ip] [cluster multicast mac*] ARPA > arp 172.31.1.232 03bf.bc1f.0164 ARPA

- To prevent switch flooding occurs, as with the unicast configure your switch which ports to use for multicast traffic:

- mac-address-table static [cluster multicast mac] [vlan id] [interface] > mac-address-table static 03bf.bc1f.0164 vlan 10 interface Gi1/0/1

The big rotten thing here is that this is great when you’re dealing with physical servers. They don’t tend to jump form switch port to switch port and switch to switch on the fly like a virtual machine live migrating. You just can’t hardcode all the vSwitch ports into the physical switches, one they move and depending on the teaming choice there are multiple ports, switches etc …it’s not allowed and not possible. So when using multicast in a Hyper-V environment stick to 1). But here’s an interesting fact. Many switches that don’t support 1) do support 2). Fun fact is that most commodity switches do seems to support IGMP … and that’s your best choice anyway! Some high end switches don’t support WNLB well but in that category a hardware load balancer shouldn’t be an issue. But let’s move on to my preferred option.

IGMP With Multicast (see IGMP Support for Network Load Balancing)

This is your best option and even on older, commodity switches like a DELL PowerConnect 5424 or 5448 you can configure this. It was introduced in Windows Server 2003 (did not exist in NT4.0 or W2K). It’s my favorite (well, I’d rather use hardware load balancing) in a virtual environment. It works well with live migration, prevents switch flooding and with some ingenuity and good management we can get rid of other quirks.

So Didier, tell us, how to we get our cookie and eat it to?

Well, I will share the IGMP with Multicast solution with you in a next blog. Do note that as stated above there are some many permutations of Windows, teaming, WNL, switches & firmware/drivers out there I give no support and no guarantees. Also, I want to avoid writing a 100 white paper on this subject?. If you insist you want my support on this I’ll charge at least a thousand Euro per hour, effort based only. Really. And chances are I’ll spend 10 hours on it for you. Which means you could have bought 2 (redundancy) KEMP hardware NLB appliances and still have money left to fly business class to the USA and tour some national parks. Get the message?

But don’t be sad. In the next blog we’ll discuss some NIC teaming for the vSwitch, NLB configuration with IGMP with Multicast and show you a simple DELL PowerConnect 5424 switch example that make WNLB work on a W2K12R2 Hyper-V cluster with NIC teaming for the vSwitch and avoids following issues:

- Messed up WNLB configuration after the simultaneous live migration of all or multiple NLB Nodes.

- You avoid “false” duplicate IP address goof ups (at the cost of IP address hygiene management).

- You prevent switch port flooding.

I’d show you on redundant Force10 S4810 but for that I need someone to ship me some of those with SFP+ modules for the lab, free of cost for me to keep ![]()

Conclusion

It’s time to start saying goodbye to Windows NLB. The way the advanced networking features are moving towards layer 3 means that “useful hacks” like MAC spoofing for Windows NLB are going no longer going to work. But until you have implement hardware load balancing I hope this blog has given you some ideas & tips to keep Windows NLB running smoothly for now. I’ve done quite few and while it takes some detective work & testing, so far I have come out victorious. Eat that Windows NLB!

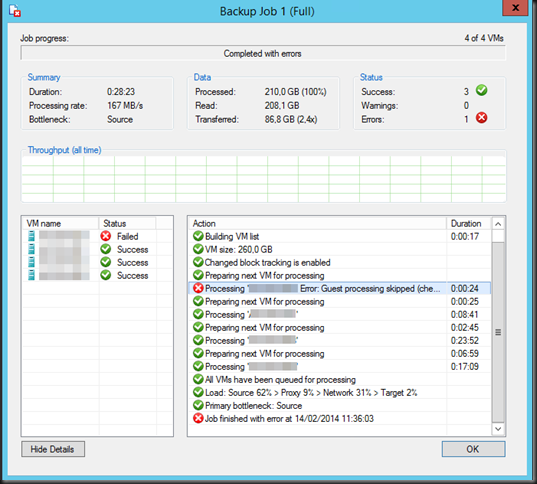

![image_thumb[1] image_thumb[1]](https://blog.workinghardinit.work/wp-content/uploads/2014/02/image_thumb1_thumb.png)

![image_thumb[3] image_thumb[3]](https://blog.workinghardinit.work/wp-content/uploads/2014/02/image_thumb3_thumb1.png)