We had a kind reminder recently that we shouldn’t forget to complete all steps in a Hyper-V cluster node upgrade process. The proof of a plan lies in the execution ![]() . We needed to configure a virtual machine with a whooping 50GB of memory for an experiment. No sweat, we have plenty of memory in those new cluster nodes. But when trying to do so it failed with a rather obscure error in System Center Virtual Machine Manager 2008 R2

. We needed to configure a virtual machine with a whooping 50GB of memory for an experiment. No sweat, we have plenty of memory in those new cluster nodes. But when trying to do so it failed with a rather obscure error in System Center Virtual Machine Manager 2008 R2

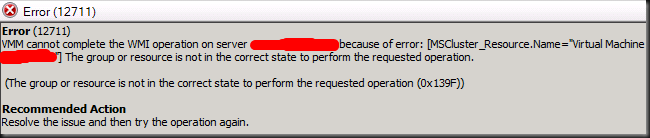

Error (12711)

VMM cannot complete the WMI operation on server hypervhost01.lab.test because of error: [MSCluster_Resource.Name="Virtual Machine MYSERVER"] The group or resource is not in the correct state to perform the requested operation.

(The group or resource is not in the correct state to perform the requested operation (0x139F))

Recommended Action

Resolve the issue and then try the operation again.

One option we considered was that SCVMM2008R2 didn’t want to assign that much memory as one of the old host was still a member of the cluster and “only” has 48GB of RAM. But nothing that advanced was going on here. Looking at the logs found the culprit pretty fast: lack of disk space.

We saw following errors in the Microsoft-Windows-Hyper-V-Worker-Admin event log:

Log Name: Microsoft-Windows-Hyper-V-Worker-Admin

Source: Microsoft-Windows-Hyper-V-Worker

Date: 17/08/2011 10:30:36

Event ID: 3050

Task Category: None

Level: Error

Keywords:

User: NETWORK SERVICE

Computer: hypervhost01.lab.test

Description:

‘MYSERVER’ could not initialize memory: There is not enough space on the disk. (0x80070070). (Virtual machine ID DEDEFFD1-7A32-4654-835D-ACE32EEB60EE)

Log Name: Microsoft-Windows-Hyper-V-Worker-Admin

Source: Microsoft-Windows-Hyper-V-Worker

Date: 17/08/2011 10:30:36

Event ID: 3320

Task Category: None

Level: Error

Keywords:

User: NETWORK SERVICE

Computer: hypervhost01.lab.test

Description:

‘MYSERVER’ failed to create memory contents file ‘C:ClusterStorageVolume1MYSERVERVirtual MachinesDEDEFFD1-7A32-4654-835D-ACE32EEB60EEDEDEFFD1-7A32-4654-835D-ACE32EEB60EE.bin’ of size 50003 MB. (Virtual machine ID DEDEFFD1-7A32-4654-835D-ACE32EEB60EE)

Sure enough a smaller amount of memory, 40GB, less than the remaining disk space on the CSV did work. That made me remember we still needed to expand the LUNS on the SAN to provide for the storage space to store the large BIN files associated with these kinds of large memory configurations. Can you say "luxury problems"? The BIN file contains the memory of a virtual machine or snapshot that is in a saved state. Now you need to know that the BIN file actually requires the same disk space as the amount of physical memory assigned to a virtual machine. That means it can require a lot of room. Under "normal" conditions these don’t get this big and we provide a reasonable buffer of free space on the LUNS anyway for performance reasons, growth etc. But this was a bit more than that buffer could master.

As it was stated in the planning that we needed to expand the LUNS a bit to be able to deal with this kind of memory hogs this meant that the storage to do so was available and the LUN wasn’t maxed out yet. If not, we would have been in a bit of a pickle.

So there you go a real life example of what Aidan Finn warns about when using dynamic memory. Also see KB 2504962 “Dynamic Memory allocation in a Virtual Machine does not change although there is available memory on the host” which discusses the scenario where dynamic memory allocation seems not to work due to lack of disk space. Don’t forget about your disk space requirements for the bin files when using virtual machines with this much memory assigned. They tend to consume considerable chunks of your storage space. And even if you don’t forget about it in your planning, please don’t forget the execute every step of the plan ![]()