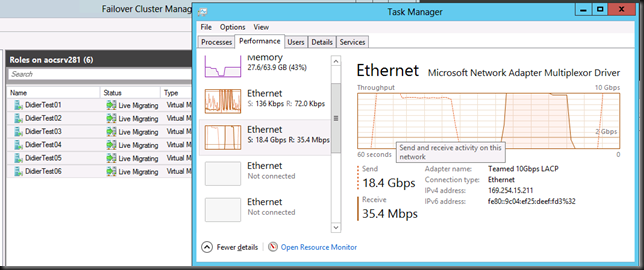

Do you remember my blog from 2011 on optimizing some system settings to get way better Live Migration performance with 10Gbps NICs? It’s over here Optimizing Live Migrations with a 10Gbps Network in a Hyper-V Cluster. This advice still holds true, but the power optimization settings & interaction between DELL Generation 12 Server and with Windows Server 2012 has improved significantly. Where with Windows Server 2008 R2 we could hardly get above 16% bandwidth consumption out of the box with Live Migration over a 10Gbps NIC today this just works fine.

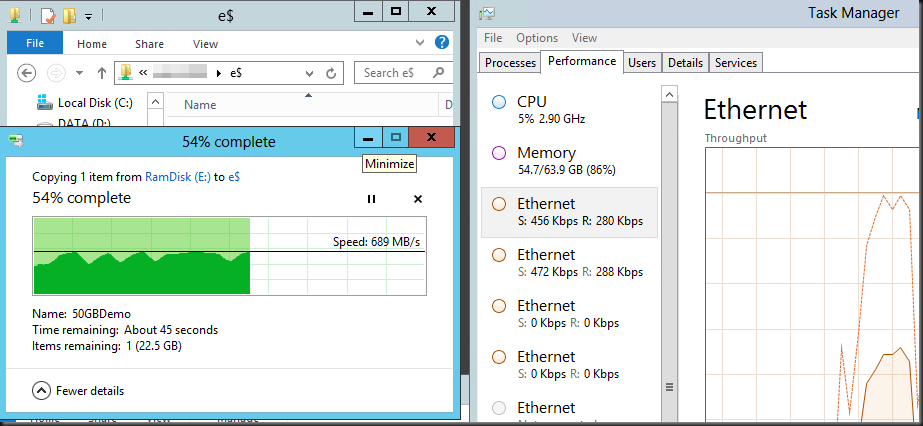

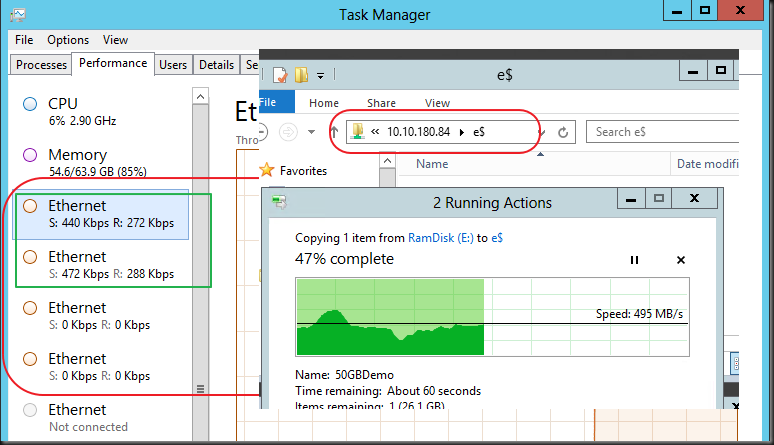

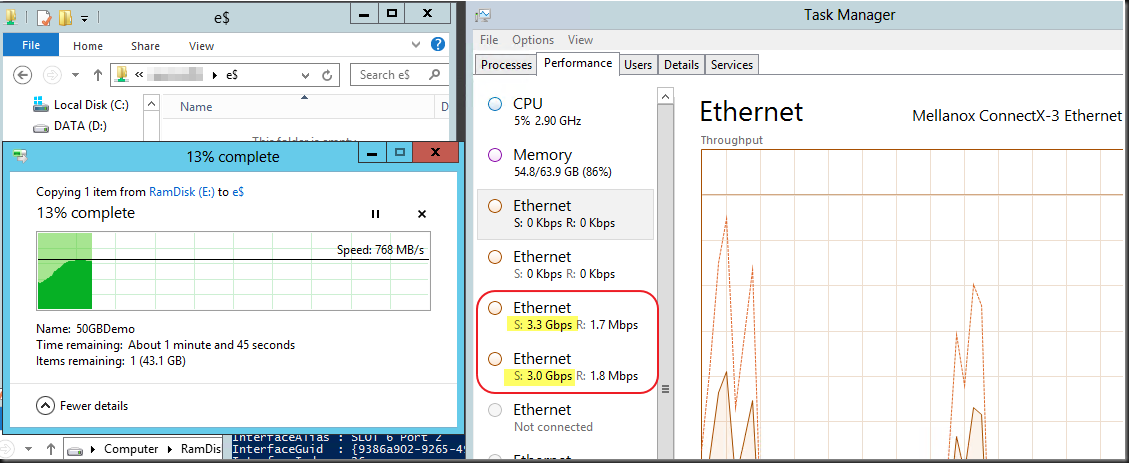

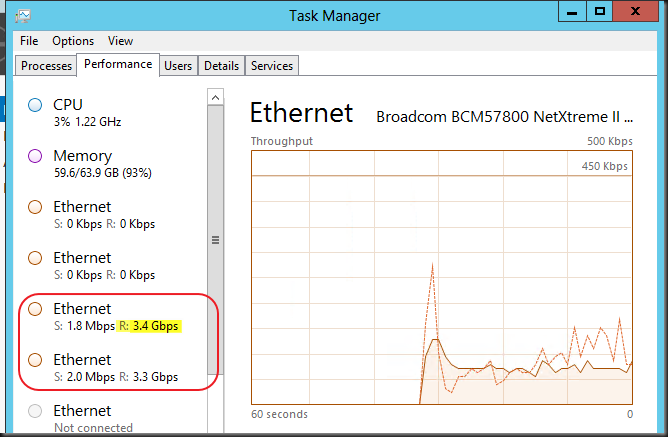

Don’t believe me? You do now? A cool

You do now? A cool ![]()

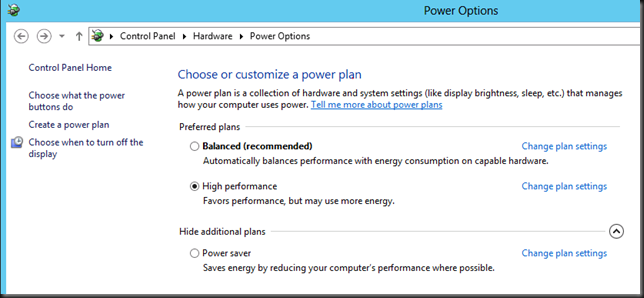

For overall peak system performance you might want to adjust your Windows configuration settings to run the High Performance preferred power plan, if that’s needed.

You might do no longer need to dive into the BIOS. Of cause if you have issues because your hardware isn’t that intelligent and/or are still running Windows 2008 R2 you do want to there. As when it comes to speed we want it all and we want it now ![]() and than you still want to dive into the BIOS and tweak it even on the DELL 12th Generation hardware. Test & confirm I’d say but you should notice a difference, all be it smaller than with Windows Server 2008 R2.

and than you still want to dive into the BIOS and tweak it even on the DELL 12th Generation hardware. Test & confirm I’d say but you should notice a difference, all be it smaller than with Windows Server 2008 R2.

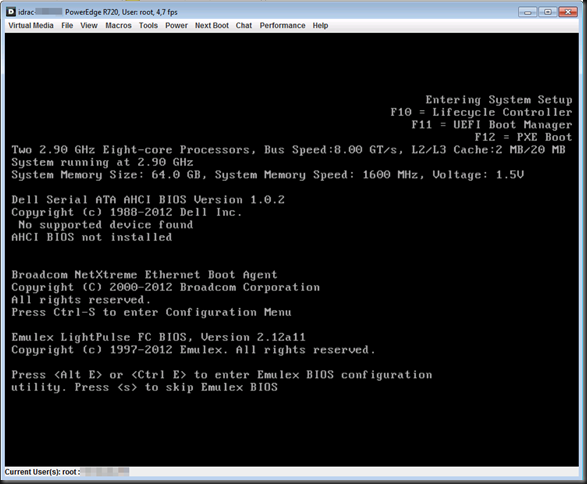

Well let’s revisit this again as we are now no longer working with Generation 10 or 11 servers with an “aged” BIOS. Now we have decommissioned the Generation 10 server, upgrade the BIOS of our Generation 11 Servers and acquired Generation 12 servers. We also no use UEFI for our Hyper-V host installations. The time has come to become familiar with those and the benefits they bring. It also future proofs our host installations.

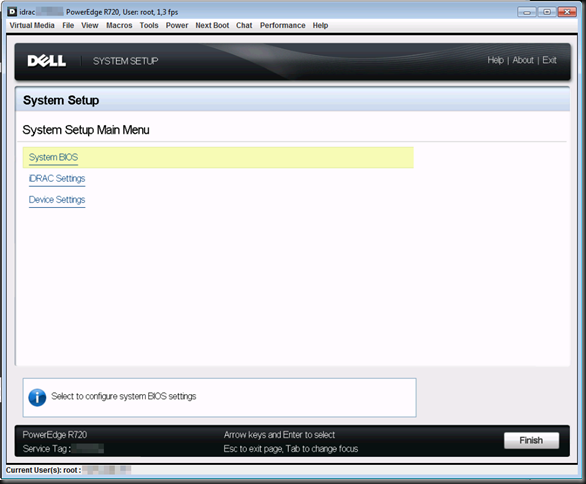

So where and how do I change the power configuration settings now? Let’s walk through one together. Reboot your server and during the boot cycle hit F2 to enter System Setup.

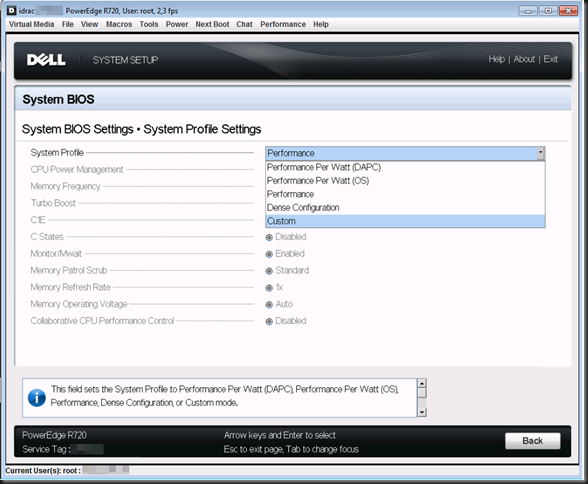

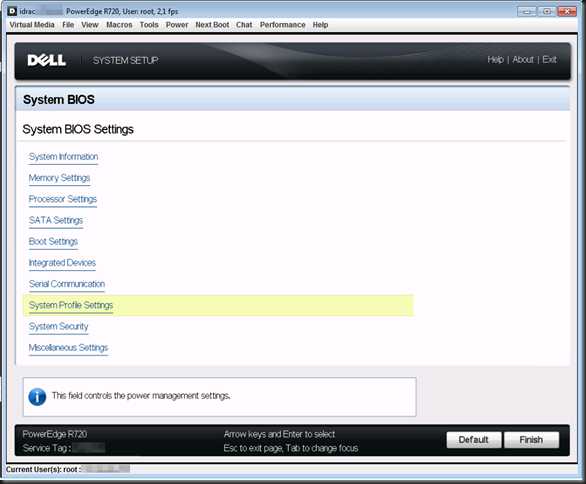

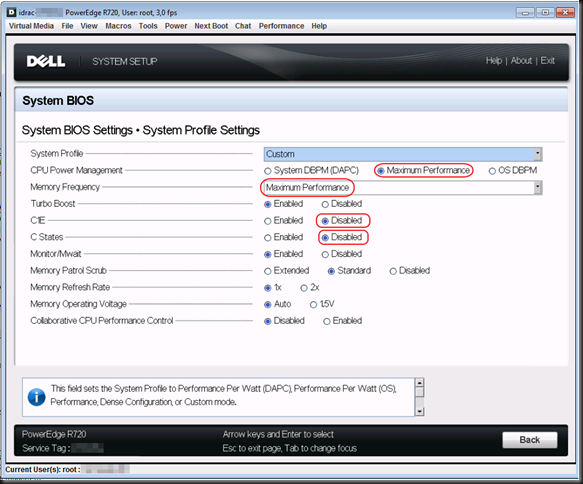

Click on System Profile Settings

The settings you want to adapt are:

- CPU Power Management should be on Maximum Performance

- Setting Memory Frequency to “Maximum Performance”

- C1E states should be disabled

- C states should be disabled

That’s it. The below configuration has optimized your power settings on a DELL Generation 12 server like the R720.

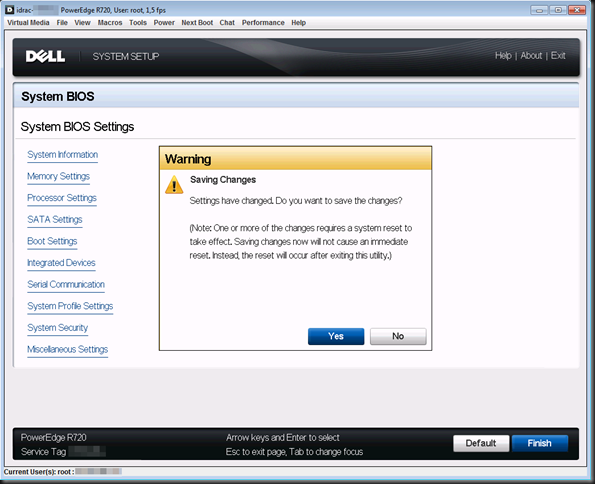

When don, click “Back” and than Finish. A warning will pop up and you need to confirm you want to safe your changes. Click “Yes” if you indeed want to do this.

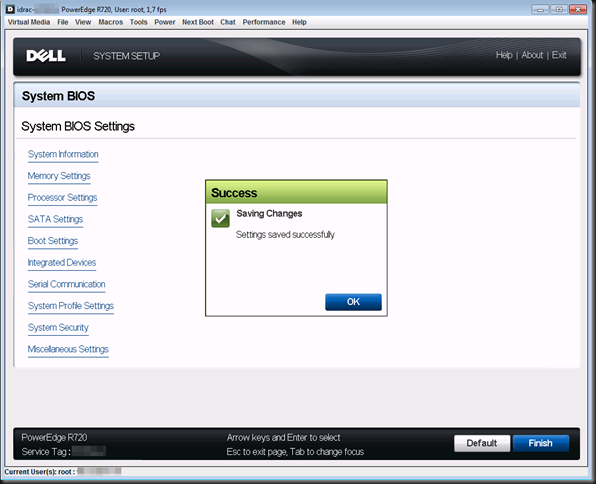

You’ll get a nice confirmation that your settings have been saved. Click “OK” and then click Finish.

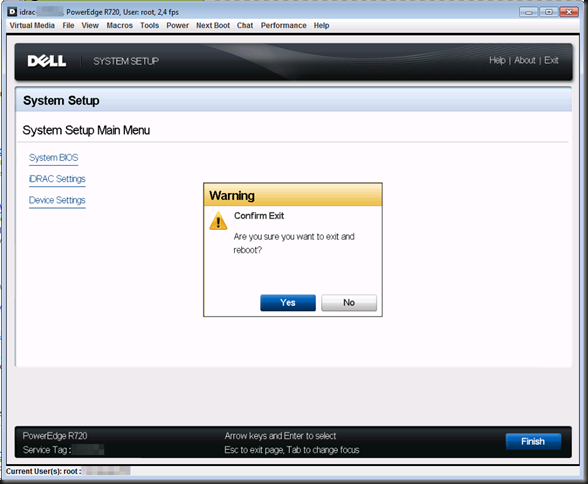

Confirm that you want to exit and reboot by clicking yes and voila, when the server comes back on it will be running a full speed at the cost of more power consumption, extra generated heat and cooling.

Remember, if you don’t need to run at full power, don’t. And if you consider using Dynamic Optimization and Power Optimization in System Center Virtual Machine Manager 2012. Save a penguin!