The DELL SonicWALL product range supports both policy based and route based VPN configurations. Specifically for Azure they have a configuration guide out there that will help you configure either.

Technically, networking people prefer to use route based configuration. It’s more flexible to maintain in the long run. As life is not perfect and we do not control the universe, policy based is also used a lot. SonicWALL used to be on the supported list for both a Static and Dynamically route Azure VPN connections. According to this thread it was taken off because some people had reliability issues with performance. I hope this gets fixed soon in a firmware release. Having that support is good for DELL as a lot of people watch that list to consider what they buy and there are not to many vendors on it in the more budget friendly range as it is. The reference in that thread to DELL stating that Route-Based VPN using Tunnel Interface is not supported for third party devices, is true but a bit silly as that’s a blanket statement in the VPN industry where there is a non written rule that you use route based when the devices are of the same brand and you control both points. But when that isn’t the case, you go a policy based VPN, even if that’s less flexible.

My advise is that you should test what works for you, make your choice and accept the consequences. In the end it determines only who’s going to have to fix the problem when it goes wrong. I’m also calling on DELL to sort this out fast & good.

A lot of people get confused when starting out with VPNs. Add Azure into the equation, where we also get confused whilst climbing the learning curve, and things get mixed up. So here a small recap of the state of Azure VPN options:

- There are two to create a Site-to-Site VPN VPN between an Azure virtual network (and all the subnets it contains) and your on premises network (and the subnets it contains).

- Static Routing: this is the one that will work with just about any device that supports policy based VPNs in any reasonable way, which includes a VPN with Windows RRAS.

- Dynamic Routing: This one is supported with a lot less vendors, but that doesn’t mean it won’t work. Do your due diligence. This also works with Windows RRAS

Note: Microsoft now has added a a 3rd option to it’s Azure VPN Gateway offerings, the High Performance VPN gateway, for all practical purposes it’s dynamic routing, but a more scalable version. Note that this does NOT support static routing.

The confusion is partially due to Microsoft Azure, network industry and vendor terminology differing from each other. So here’s the translation table for DELL SonicWALL & Azure

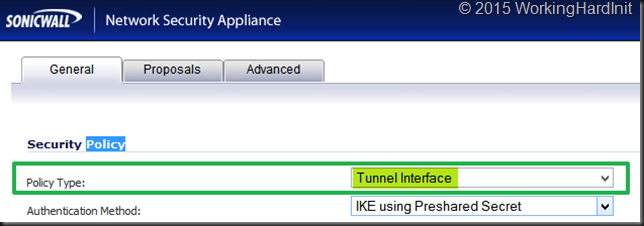

Dynamic Routing in Azure Speak is a Route-Based VPN in SonicWALL terminology and is called and is called Tunnel Interface in the policy type settings for a VPN.

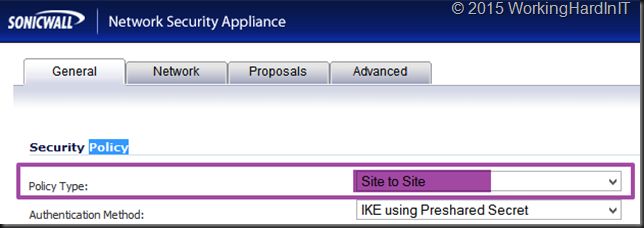

Static Routing in Azure Speak is a Policy-Based VPN in SonicWALL terminology and is called Site-To-Site in the “Policy Type” settings for a VPN.

- You can only use one. So you need to make sure you won’t mix the two on both sites as that won’t work for sure.

- Only a Pre-Shared Key (PSK) is currently supported for authentication. There is no support yet for certificate based authentication at the time of writing).

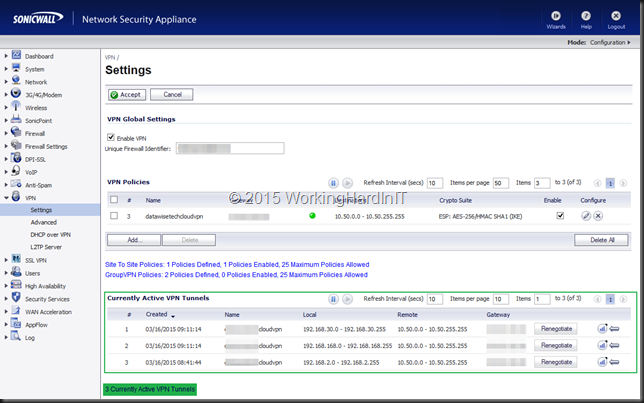

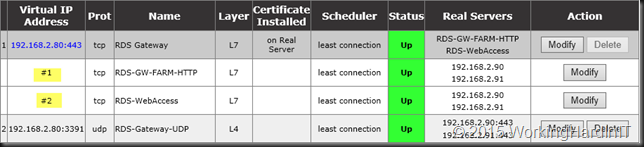

Also note that you can have 10 tunnels in a standard Azure site-to-site VPN which should give you enough wiggling room for some interesting scenarios. If not scale up to the high performance Azure site-to site VPN or move to Express Route. In the screenshot below you can see I have 3 tunnels to Azure from my home lab.