We’ve had snapshots, or better checkpoints as we call them now for consistency amongst products, for a longest time in Hyper-V. I have always liked and used them to my benefit. That’s what they are intended for. But you have to use them correctly, in a supported and smart manner. Some (or perhaps not an insignificant number of) people did not read the manual and/or do not test their assumptions before trying something in production. Some times that leads to a lesson, sometimes it leads to tears.

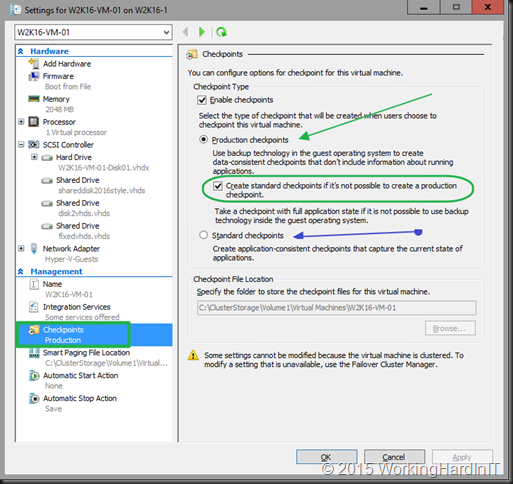

We now have the choice between two type of checkpoints: Production Checkpoints and Standard Checkpoints.

A standard virtual machine checkpoint all the memory state of running applications get stored and when you apply the checkpoint it’s back magically. Doing this to a production SQL or Exchange Server for example causes (huge) problems. With some applications these problems are minor or transient but it’s not a healthy consistent state to be in, and recovery has to happen. Which could happen automatically or require disaster recovery depending on the situation at hand.

Production checkpoints are made in application consistent manner. For this the leverage Volume Shadow Copy Services (or File System Freeze on Linux) which puts the virtual machines into a safe state to create a checkpoint that can be restored like a VSS based, application consistent backup or SAN snapshot. This does mean that applying a production checkpoint requires the restored virtual machine to boot from an off line state, just like with a restored backup.

The choice for what type of checkpoint can be made on a per virtual machine basis which make it’s flexible as you can pick the best option for a particular virtual machine for a specific purpose. As you might have guessed, that still requires some insight, reading the manual and testing your assumptions. But you now can have the behavior you want and way to many assumed to have.

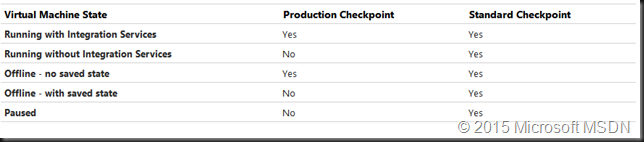

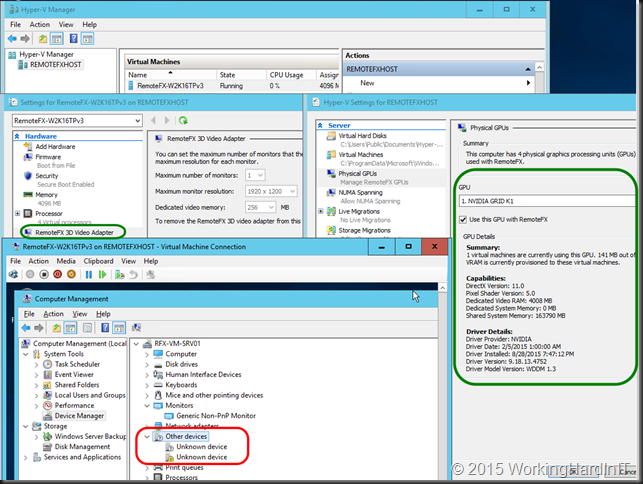

We also have the option of allowing or disallowing for a standard checkpoint to me made if for any reason (which results in the VSS snapshot in Windows or file systems freeze in Linux in the guest might not work or are not available) a production checkpoint cannot be made. Here’s the table of what type of checkpoint can be used when from MSDN. I conclude that the chosen default is the best fitting one for most scenarios.

You also have the option of choosing the standard checkpoints for a virtual machine. That gives you the exact behavior as with all previous versions of Hyper-V.

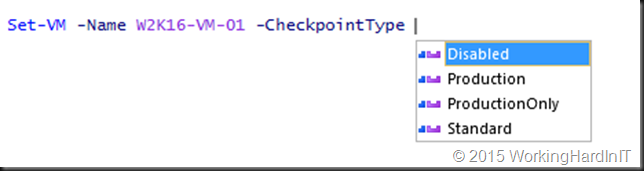

I love the GUI for ad hoc work but when I need to do this on dozens or hundreds of virtual machines or potentially tens of thousands when running a larger private cloud this is not the way to go. PowerShell is you long time trusted friend here!

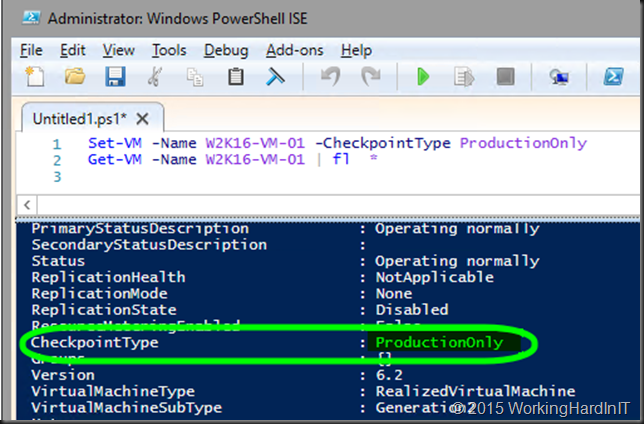

As you can see just the “-CheckpointType” parameter you control the check pointing behavior. And as it is very easy to grab all virtual machines on a host or cluster setting this for all or a selection of your virtual machines is easy and fast. Let’s set it to “ProductionOnly” and grab the setting for that VM via PowerShell

When you create a checkpoint of a Windows Server 2016 Hyper-V host you’ll even get a nice notification (you can turn it off) by default that the Production checkpoint used backup technology in the guest operating system.

It’s also important to realize that this capability is the basis of the new checkpoint based way of making backups in Windows Server 2016 as well. But that’s a subject for another blog post. Thank you for reading!