A remarkably little known feature in Windows Sever 2012 R2 (and Windows 8.1) is the ability to export one or multiple running virtual machines.

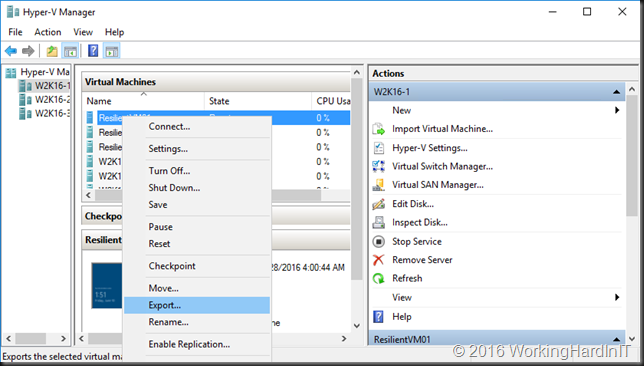

You just select right click in the Hyper-V manager and select Export from the context menu and follow the wizard to select an export location. Easy. This is also possible via PowerShell so you can automate this. The result is a VM you can import which gives you a copy of the original virtual machine in a saved state, at the point in time that you exported it.

More people seem to know about the capability to export a checkpoint of a running virtual machine, not so many of the capability to export a running VM itself. I noticed this because some people figured the latter was a new feature in Windows 2016. No it’s not. We’ve had this option since Windows 8.1 and Windows Server 2012 R2.

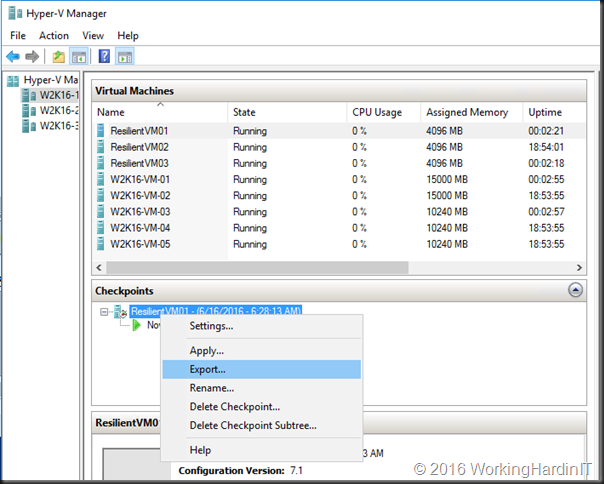

So why even have the option of exporting a checkpoint of a running VM? Because this enables you to have exports from various points in time, which is pretty cool and handy during test and development and trouble shooting or lab work. As a standard checkpoint has state in Windows Server 2012 R2 I prefer to shut down the VM, create a checkpoint and start the VM again. When I then export that checkpoint I don’t have to worry about the state in the VM at that point in time as it was shut down.

For some workloads this isn’t a big deal bit for some this is not a great experience, hence the fact that checkpoints are “”not supported in production but for test and dev.

In Windows Server 2016 we now have production checkpoints. That means that when we apply such checkpoints we have a consistent state just like when we restore VM from a backup. You’ll have to boot it up after applying the checkpoint, they do not appear running with the state at the time the snapshot was taken. Well, not unless you opt to create standard checkpoints. The reduces the need for me to shut down a VM before I create a checkpoint to export in many cases.

When you export a running VM in Windows Server 2016 you’ll have a copy of it in saved state. Just like you did in Windows Server 2012 R2, no change there. When you import that you’ll have a VM in saved state that you need to start up. If you want an application consistent copy, create a production checkpoint first and export that one.

So there you go. The feature to live export a running virtual machine was here before and it’s still here. The real extra capability with live exports comes from leveraging the live export of a checkpoint of a running virtual machine and the fact that we now have production checkpoints.