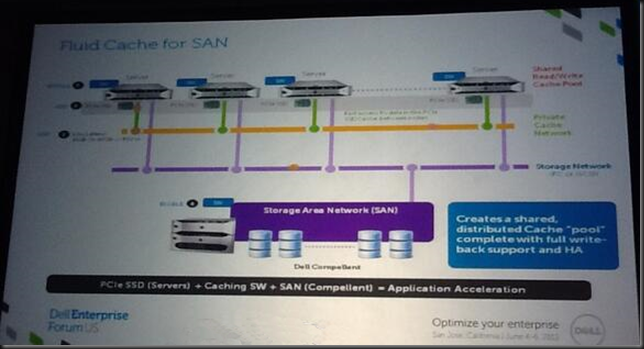

Fluid Cache For SAN

At Dell World 2013 in Austin Texas I spent some time talking to engineers & managers about Fluid Cache For SAN. The demo in the keynote was enough to grab my distinct attention, especially as a Compellent customer.

What is it?

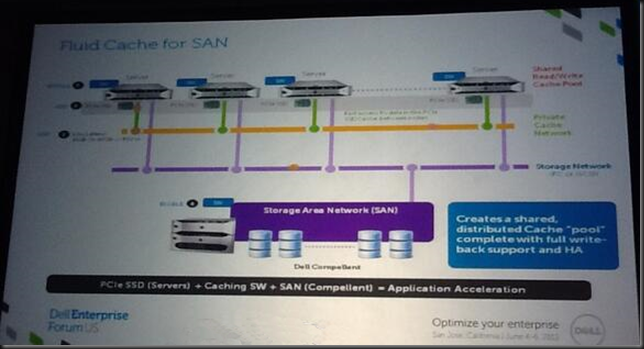

Dell already has Fluid Cache for DAS available in its PowerEdge servers. Now it’s time to bring this to their best SAN offering, the Compellent, and make Fluid Cache shared storage suitable for shared storage clustering. The way to do that cost effective and high performance is to build on the success of on board (local to the server) high performance storage and make that shared through software in a physical shared nothing replication/sync model. To make this happen they use a 10/40Gbps Ethernet solution leveraging RoCE (RDMA over Converged Ethernet). Yes that very technology I have been investing time & effort in for SMB Direct and which we leverage for CSV & Live Migration traffic and with SOFS in Windows 2012 R2.

Basically the super low latency an high throughput enable the memory to be synced across all nodes in a cluster and as such each node sees all the cluster memory. For redundancy you will need at least 3 nodes in a cluster. Dell will scale Fluid Cache For SAN to 128 nodes. Windows Server 2012 R2 can handle 64 nodes, which some think is ridiculously high, but then again, Dell aims even higher so it’s not as weird as you think. Some people have really huge computing needs. Just remember that 10 years ago you probably found that 16GB of RAM was extravagant.

Why this architecture?

Dell uses server based “shared nothing flash storage” & high speed low, latency synchronization to create a logical cluster wide shared pool of flash memory. This means the achieve stellar low latency as the flash storage is inside of the servers, close to the processors and as such delivers excellent performance for the workloads. Way better that “just” flash only SAN can. For data integrity they commit the data only when it written to the Express Flash drive(s) of one server and then also to another and verified. This needs to happen very fast and that where the RoCE network come sin to play. Later, at less speed critical times the data is pushed out to the Compellent SAN for storage. If that SAN is a flash based setup think about the capabilities this gives you in performance. Likewise data reads of the SAN that are highly active are pushed from the Compellent SAN and cached (also in multiple copies) on the Express Flash modules. While two servers with each a copy of the data on Express Flash modules would suffice DELL requires at least three. This is just a plain common sense N+1 redundancy design to have high availability even when a node fails. A cool think to note is that you can build larger clusters with 3 nodes each having one or more Express Flash modules and additional nodes don’t need it as long as they can read the cache of those 3. So the cost of this can be managed. The drawback is that you don’t read & write to a local Express Flash module on those extra node. If you want that you’ll need to put more $ on the table.

The thing to note here is that the Servers/SAN are connected over RoCE/RDMA. Well this look familiar. What technology can also leverages RDMA? SMB Direct in Windows Server 2012 R2! And where do we use this amongst other things? Storage IO in Scale Out File Server, CSV traffic, Live Migration …

The big benefit of this design it just it takes your SAN to the next level but also, if DELL does this right, they won’t break any of the good stuff like VSS aware snapshot with Replay Manager, Automatic Data Tiering, Live Volumes, Live Migration etc. A lot of the high IOPS/low latency solutions out their based on fast local flash break a lot of the good stuff and reduces centralized storage management. What if you can have your cookie and eat it to?

Demo Time at Dell World

Dell demonstrated an Oracle database load on an eight node cluster of PowerEdge R720 servers with Intel Xeon E5 processors, with Linux (no Windows Server 2012 R2 support yet  ) These servers each used 350GB PCI-Express flash cards (“only” PCI-Express 2.0 capable by the way). This cluster, using a Compellent SAN, managed to get a result of more than 5 million IOPS at 6 millisecond response times, delivering 12,000 tps for 14,000 client connections. This was read only. If they dropped the Fluid Cache for SAN they could “only” achieve 2,000 clients (6 times less clients due to 4 time less transactions and 99% slower responses). See this movie for more info: http://www.youtube.com/watch?v=uw7UHWWAtig and watch the keynote from Dell World 2013 here

) These servers each used 350GB PCI-Express flash cards (“only” PCI-Express 2.0 capable by the way). This cluster, using a Compellent SAN, managed to get a result of more than 5 million IOPS at 6 millisecond response times, delivering 12,000 tps for 14,000 client connections. This was read only. If they dropped the Fluid Cache for SAN they could “only” achieve 2,000 clients (6 times less clients due to 4 time less transactions and 99% slower responses). See this movie for more info: http://www.youtube.com/watch?v=uw7UHWWAtig and watch the keynote from Dell World 2013 here

Where would I use this?

Cost will determine use cases and this is unknown for now. We can only look at what Fluid Cache for DAS cost right now and speculate. I for one hope/bet on the fact that DELL won’t price itself out of the marked (they have a lot of competition from big & smalls players in a “good enough is good enough” world with a cloud mindset all around). So make it too expensive and we might be happy with “just” 500.000 IOPS at much less cost. It’s a fine line. Price it right, support it well and you might win the bulk of sales in the storage wars. Based on the DAS solution we’re looking at least 8000 $ per server (license is 3500 for DAS => see http://www.theregister.co.uk/2013/03/05/dell_fluid_cache_server_acceleration/ + cost of PCI-Express flash module (> 5000$ => see http://en.community.dell.com/techcenter/extras/chats/w/wiki/4480.3-5-2013-techchat-fluid-cache-for-das.aspx) & yearly maintenance fee. Then we need to factor in the cost of the RDMA/RoCE capable NICs & the (dedicated) Force10 switches – 2 for redundancy that are at least 10Gbps (S4810?) or probably 40Gbps (S6000?) & cabling. So this is not a cheap solution and you won’t just “throw it in” on a quiet afternoon to see what it does for you. Not that there will be a DIY “throw it on kit” I think, it’s a step above plug and play. If they keep it affordable and do some other things for Windows Server 201 R2 / Hyper-V they can be the absolute number one SAN vendor for any Microsoft customer. But that’s another blog topic.

Cost is indeed something that might make it a show stopper for us. I just can’t tell yet. One of the key factors is that if affordable it could give the point solutions we now see pop up more and more in storage. a run for their money. While cheap and workable in good enough is good enough scenarios it takes some of the centrally shared storage advantages away. But if we ever do a state full VDI project in an environment with high end physical desktops (500GB or more local storage, SSD disks, 8 core CPU, 8-32GB DDR3, dual or more screens) that run ArcGis, AutoCad, Visual Studio, SQL Server, Outlook with 5GB mailboxes, large documents & huge files (images) this might be the enabler we need to make VDI happen & works as desired with current all-purpose Compellent SANs. IIf the price is right it could enable VDI in now “NO GO” scenarios. And those are plentiful, … Another use case I see is a virtualized SQL Server environment on Hyper-V with general purpose shared storage. We’re doing very well but the day might arrive that we need those IOPS in order to take it even further. Don’t laugh but realize how much IOPS an SSD delivers to a workstation today and that’s what your users expect & demand. Want to fail at VDI? Have it outperformed by a 4 year old physical PC where you slapped an SSD into.

Could it help in keeping excessive IOPS away from the SAN, making that capable of doing more over a longer life time? In other words can it play a part in the Storage QoS issue across server/cluster/storage system issue for non workload aware storage solutions?

So I might have some homework to do. For our next SQL Server cluster we’ll look at the next generation of servers & start counting our PCI Express slots. We now already consume 4 PCI-Express slots for 2*FC & 2*Dual Port 10Gbps) in our Hyper-V design. That’s another discussion, but they are built purposely for performance under any condition & to be highly redundant. A health check / improvement track by Microsoft for our SQL Server environment has proven this to be an outstanding setup (nice e-mail to see your bosses get by the way). I digress, free PCI-Slots should not be an issue, as we also don’t need the FC cards in the Fluid Cache Nodes. The storage IO uses the RoCE network, to which the Compellent SAN attaches.

Cost is very important in determining if we’ll ever get to deploy it. The cloud is here, and while that is far from cheap either, it’s a lot easier to sell than internal IT for various reasons. That’s just how the powers that be roll right now & how things are.

What we’ll get in our hands

There was a lot of love between Dell & Samsung at Dell World. Talking to Dell at the server/storage/networking boots I understood that Samsung is going to produce flash modules for this that support PCI-Express 3.0 and the industry backed NVM Express host interface for solid state drives which will reduce latency with 1/3 compared to now. As it seems they will produce higher capacity cards than what was used in the demos (800 GB and 1.6 TB). So capacity will increase & latency will drop even more. They leverage the Force10 10Gpbs or 40Gbps switches for the RoCE network. As Dell & Mellanox are cooperating heavily (Mellanox Collaborates with Dell to Deliver 10/40GbE Solution for Mainstream Servers and Networking Solutions) my bet is on Mellanox for the cards. Broadcom is not there yet for it to happen in time and Intel has no RoCE cards afaik. They seem to be playing the waiting game before they jump in.

Magic Ball Time, Speculation & Questions.

I’m not a DELL Server / Storage designer or architect, and those that are don’t tell me to plaster it all over the internet, so this really is magic ball time …

I’ll show my ignorance on what Samsung does under the hood when I hear that the next generation of DELL servers can have 6TB of RAM I can only speculate that with the advent of DDR4 in servers & ever dropping cost the path is open to leverage NV-RAM disk for the read/write cache in Fluid Cache for SAN as well a bit like what IDT did http://us.generation-nt.com/idt-announces-world-first-pci-express-gen-3-nvme-nv-dram-press-3732872.html. The persistence comes from writing the DRAM content to NAND at shutdown, can we do that fast enough at 1.6 TB sized caches? Can we fit enough of those modules on a card? What would that do for IOPS & latency? Does that even make sense at this moment in time?

What if we could leverage the DDR4 dims in the server itself? This would perhaps cut costs and also save us some valuable PCI-Express 3.0 slots for our 10Gbps or better addiction  . Sure there is no persistence than but the content is distributed redundantly over the cluster anyway? Is that safe enough to make it feasible? What if we need to shut down the cluster? I guess it’s not that easy and perhaps we just need to make sure future motherboards have 8 or more PC-Express 3.0 slots & not worry about that. Or move to 40/100Gbps & have less need for NICs. Yeah that’s what was said of 10Gbps in the early days …

. Sure there is no persistence than but the content is distributed redundantly over the cluster anyway? Is that safe enough to make it feasible? What if we need to shut down the cluster? I guess it’s not that easy and perhaps we just need to make sure future motherboards have 8 or more PC-Express 3.0 slots & not worry about that. Or move to 40/100Gbps & have less need for NICs. Yeah that’s what was said of 10Gbps in the early days …

Support for Windows?

While it’s not there yet I have absolutely no doubt that they will bring it to Windows Server 2012 R2 and higher. Well Windows is a huge on premise market for native workloads like SQL Server, VDI and Hyper-V. The number of sales opportunities in the Microsoft ecosystem is growing (despite cloud) while others are stagnant or dropping. On top of that the low cost of Hyper-V leaves money to be spent on Fluid Cache for SAN. As Dell is in business to make money, they will not leave that big chunk of cash on the table.

When can we get our hands on this technology?

Timing wise that will be early to late Q2 in 2014, which is my best guestimate. Interesting times people, interesting times