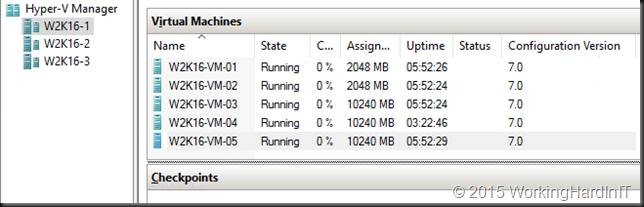

When building a Windows Server 2016 TPv4 Hyper-V cluster this weekend I noticed that we now have a new version of the virtual machine configuration.

When we migrate (rolling cluster upgrade, move to new cluster or host, import on new cluster or host) virtual machines to Windows Server 2016 Hyper-V from Windows Server 2012 R2, the virtual machine’s configuration file isn’t automatically upgraded. In the past it was, which blocked moving back to a previous edition of Hyper-V. Now we can do this until we manually update the virtual machine configuration version. This block going back but it enables our new virtual machine features. Version 5.0 is the one that’s compatible with Windows Server 2012 (R2) Windows Server 2016. Version 6.2 was what we had in TPv3 and could only run on Windows Server 2016. Windows Server 2016 TPv4 Hyper-V brings virtual machine configuration version 7.

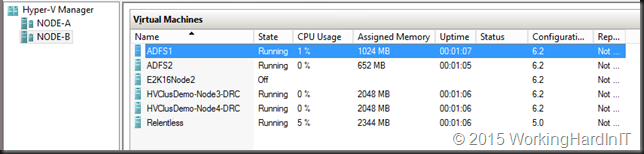

When you have virtual machines that come from Technical Preview v3 and you had updated the virtual machine configuration of your virtual machines or created brand new ones these would be at version 6.2. Since I do not consider it wise to keep testing these on a version of a previous preview I updated them all to version 7.

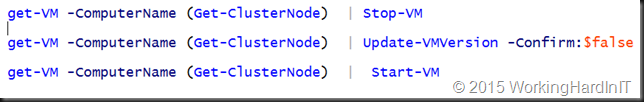

The code below grabs all VMs on all cluster nodes (even the none clustered VMs), shuts them down, updates the configuration version and starts them again. It’s just a quick example.

Now do NOT do this to virtual machines with configuration version 5 that you might want to move back / import to a Windows Server 2012 R2 Hyper-V host. But if you know you’ll be testing with the new features, have a blast, like me here on the TPv4 lab cluster.

I’m still looking for the features version 7.0 enables, probably nested virtualization is one of those features I’m guessing. Happy testing!