Introduction

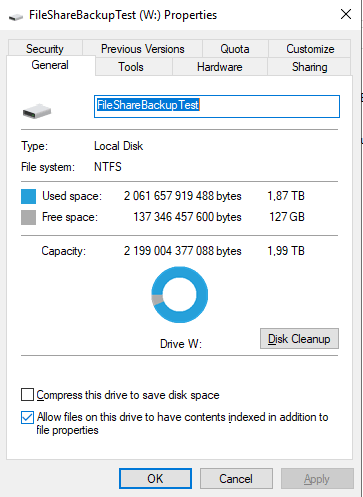

In this blog, we will demonstrate one of the things that can go wrong when someone gets a hold of your Veeam Backup & Replication server administrative credentials. They can do more than “just” delete all your backups, replicas, etc. When they can logon to the Veeam Backup & Replication Server itself they can also grab all the credentials form the Veeam configuration database. Those credentials normally have privileges that you do not want to fall into the wrong hands. These are quite literally the keys to the kingdom. Hence, protecting your Veeam Backup & Replication Server is critical.

Protecting your Veeam Backup & Replication Server is critical

Security is not about one feature, technology or action. It takes a more holistic approach. It requires physical security to start with. You also need to adhere to the principles of least privilege rigorously. All this while locking down access, reducing the attack surface, leveraging segmentation, etc.

A key element lies in prevention. You must avoid the harvesting of those credentials. For this reason, you absolutely must practice privileged credential hygiene. Today you also want to leverage multi-factor authentication in order to protect access even better. All this, and more, prevents unauthorized access in the first place. Even when one measure fails. Read Veeam Backup & Replication 9.5 Update 3 — Infrastructure Hardening for more details on this.

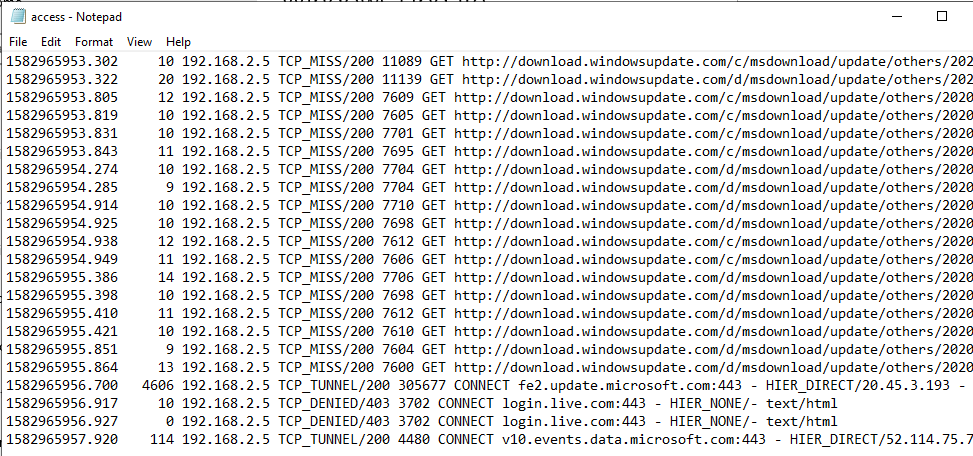

Veeam Backup & Replication itself requires credentials to do its work of protecting data and workloads. Access to servers, proxies, repositories, interaction with virtual machines, etc. cannot happen without such credentials. Veeam encrypts the passwords of these users via strong encryption. They use the Microsoft CryptoAPI (FIPS certified) with the machine-specific encryption key for this.

As a side note, you might have seen the big fuss around the critical vulnerability in January 2020 regarding CryptoAPI. This is a reminder of why you need to keep your systems patched.

It ensures decryption of those passwords on another host than the one were encrypting them happened, fails. This means that even if someone steals the configuration database, or in some shape, way or form gets a hold of the encrypted password in the database they cannot be decrypted. This is an industry-standard and quite safe. What you need to know is that when someone gains access to your machine with local administrative rights, all bets are off.

What can happen?

The moment an attacker logs on to the Veeam Backup & Replication server with administrative rights, it is game over.

They will be able to grant themselves access to SQL Server and query it for the credentials. With that information, all they need to do is load and use a Veeam DLL to decrypt them. When this runs on the server where you encrypted them, this will succeed. If anyone would get hold of the encrypted password and tries to decrypt them on another host this will fail as that host has the wrong machine-specific key.

Let me emphasize once more that this is not a insecure implementation by Veeam. When you store encrypted passwords for a service, that service must be able to decrypt them. Otherwise, they can never use them. You cannot get the passwords via the GUI or the Veeam PowerShell commands. But via code, this is quite possible.

Sample code to proof that protecting your Veeam Backup & Replication Server is critical

I assembled a little PowerShell script that grabs the data from the Veeam configuration database. For this purpose, I filter out the passwords that have an empty string. We loop through the ones that remain and decrypt the passwords. In the end, I decided not to post the script as it might help people with bad intentions. I know it won’t stop bad actors cold in their tracks and maybe I will update this post later. But for now, did not include it.

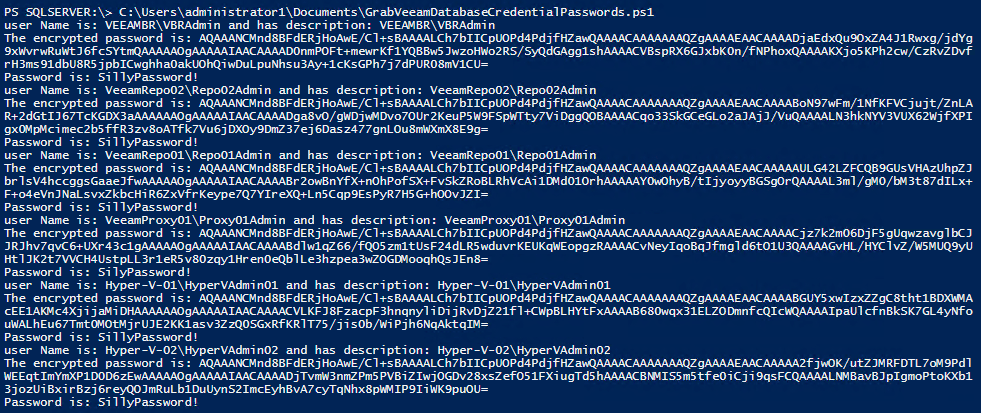

In the screenshot below you can see the results. This is a demo lab with demo credentials, so no worries about showing this to you. Remember that you can only decrypt the password on the Veeam Backup & Replication server where you encrypted them.

There they are, the users with the encrypted and decrypted passwords

To prove a point we will grab the encrypted passwords and try to decrypt them on another VBR server so we have around to do so. This fails with an Exception calling “GetLocalString” with “1” argument(s): “Key not valid for use in specified state. error.

“

As you can see even if you get a hold of the encrypted strings they cannot be decrypted on another machine. You must do this on the machine that encrypted them.

Conclusion

While to some this might be a shock when they first learn of this., it is not a gaping security hole. It just shows you that security is more than encryption. It takes multiple measures on multiple levels to protect assets. I repeat, protecting your Veeam Backup & Replication Server is critical. For many people, this is indeed an eye-opener. The lesson is that you must protect your assets adequately. Do not bank on one feature to hold off any and all threats by itself. That is asking for the impossible.

I do hope that all Veeam software itself will also support MFA in the future. That would also help protect access via the Veeam Backup & Replication console.