The Problem

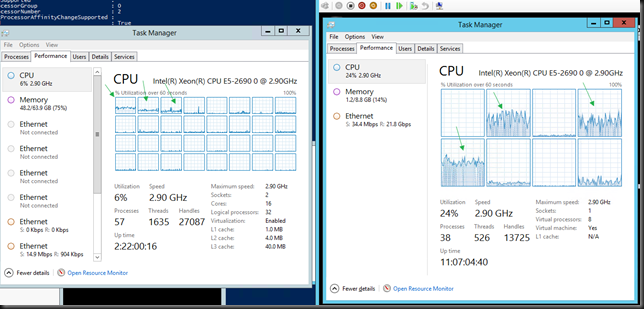

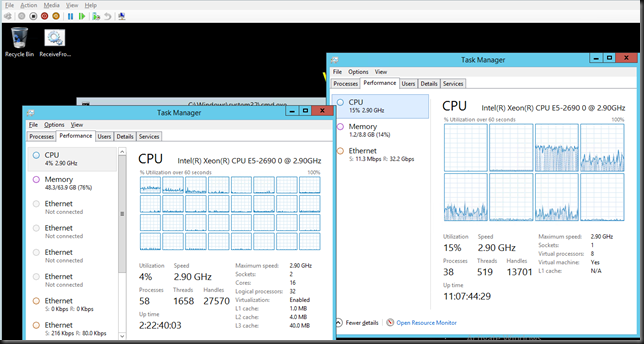

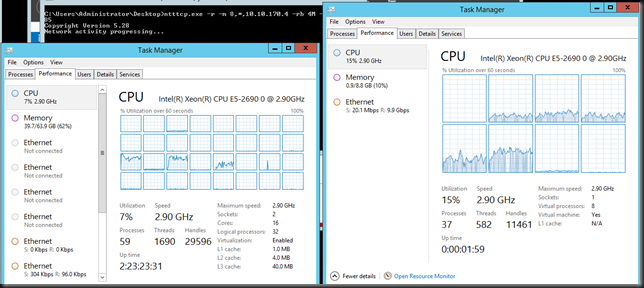

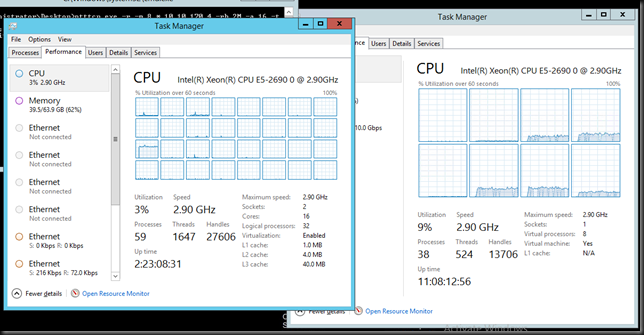

I recently had to trouble shoot a Windows Server 2012 R2 Hyper-V cluster where SMB Direct is leveraged for live migration. It seemed to work, sometime perfectly but at times it but it was in “slow” motion. The VMs got queued for live migration, it took some time for it started and sometimes it would finish or it would fail. This did not happen between all the nodes. I diligently checked out the SMB Direct network but that was OK on all nodes. Basically the LM network was perfectly fine.

To me this indicated that the hosts potentially had issues communicating with each other to coordinate the live migration. But pings and such looked good, there was connectivity, on the surface all seemed well. In the event log details we saw indications that this was indeed the case. Unfortunately I did not get the opportunity to take screenshots or copies of the events in this particular situation.

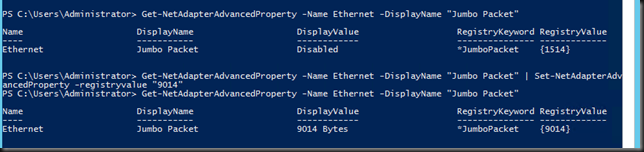

The nodes had a separate 2*1Gbps native team LAN access and backups. But diving deeper I noticed that they had set Jumbo Frames on some of those member NICs and not on others. So these setting differed from node to node and that was leading to the symptoms we described above.

Conclusion

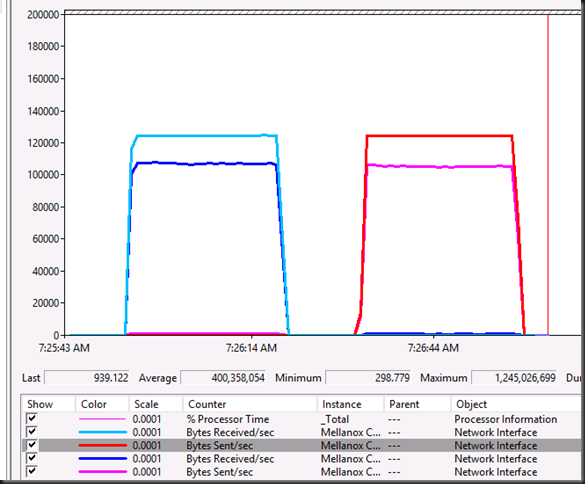

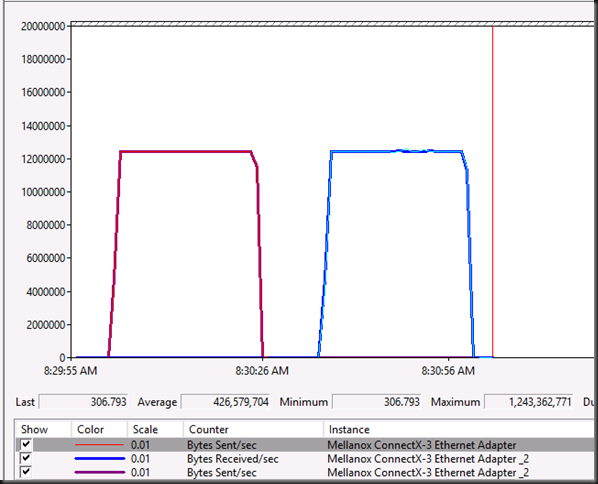

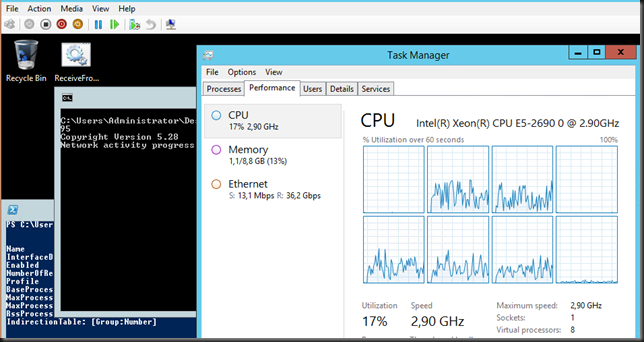

You can use Jumbo Frames on your live migration network. Testing has shown this to be beneficial. When you’re doing SMB direct it won’t make such a big difference but it doen not hurt. When SMB Direct fails you’ll fall back to SMB with Multichannel and there it helps more! See Live Migration Can Benefit From Jumbo Frames. While SMB Direct (infiniband, RoCE & iWarp) know Jumbo frames the limited testing I have ever done there indicates only a small increase (2%) in throughput so I’m not sure it’s even worthwhile when doing RDMA.

When you can use Jumbo Frames on you host LAN NIC or team of NICs (handy is you use it to do backups as well) you need to be consistent end to end. Meaning ALL hosts, ALL NICS & all switches/ switch ports. Being inconsistent in this on the cluster nodes was what cause the slow to failing live migrations. You need to have good communications between the hosts themselves and AD. Just unplug the LAN from a Hyper-V cluster host to demo this => live migration from to that node and the rest of the cluster won’t work. Mismatching Jumbo Frames or potentially other network settings make this less obvious. Another “fun” example to trouble shoot is a NIC team where the member NICs are in different VLANs.